From computers to smartphones, from smart appliances to the internet itself, the technology we use every day only exists thanks to decades of improvements in the semiconductor industry, that have allowed engineers to keep miniaturizing transistors and fitting more and more of them onto integrated circuits, or microchips. It’s the famous Moore’s scaling law, the observation—rather than an actual law—that the number of transistors on an integrated circuit tends to double roughly every two years.

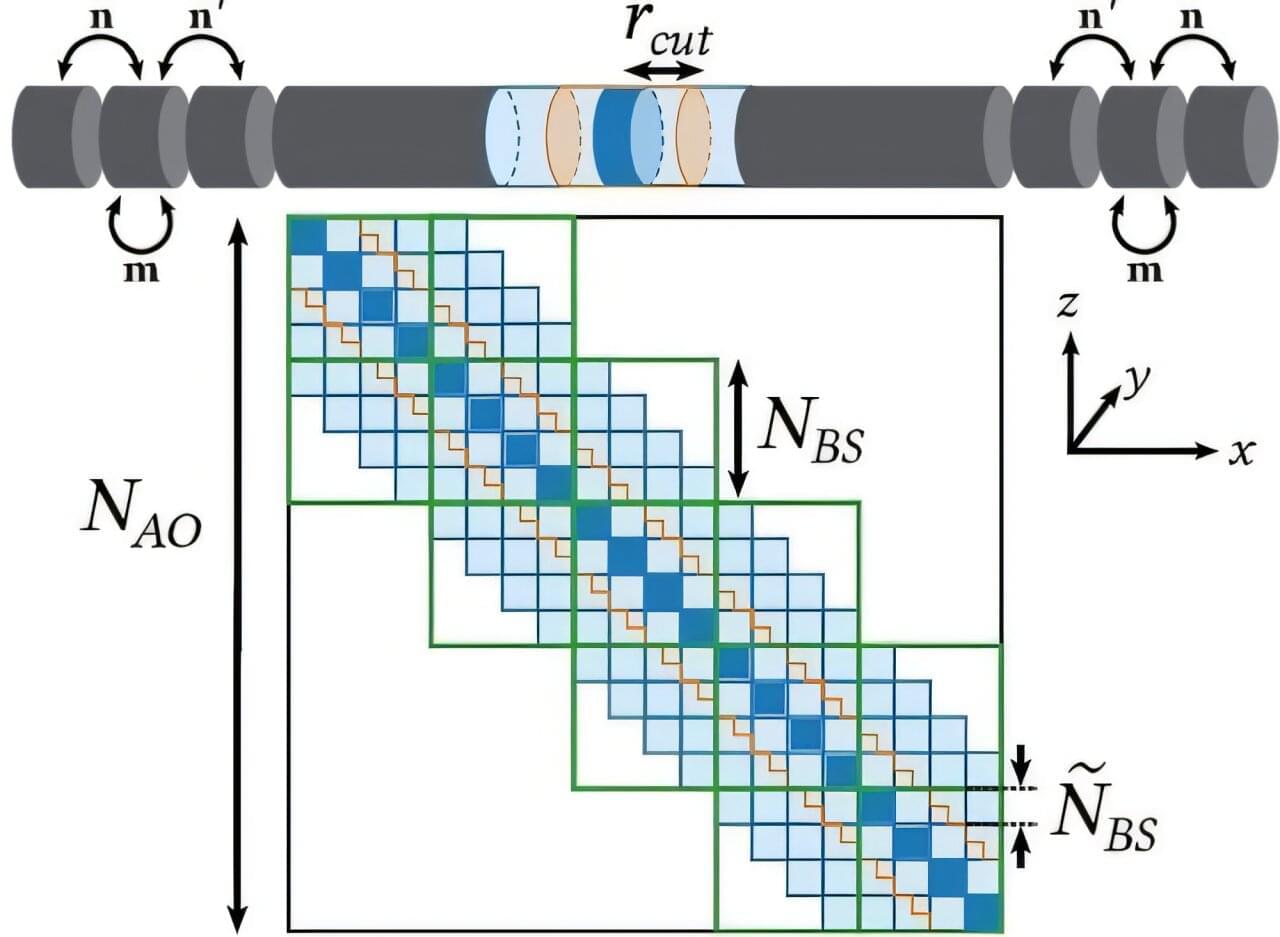

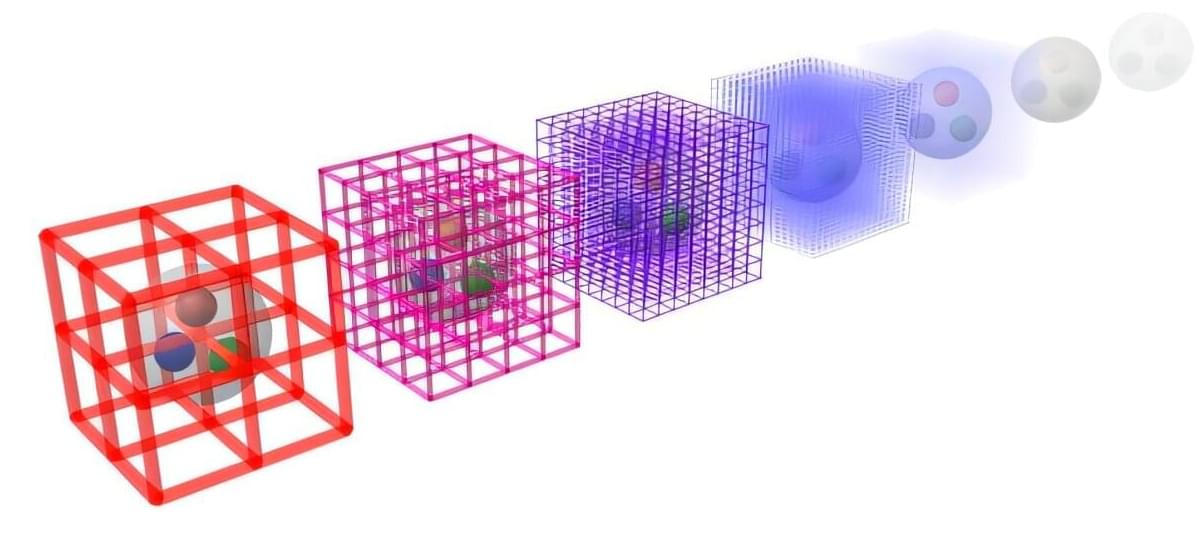

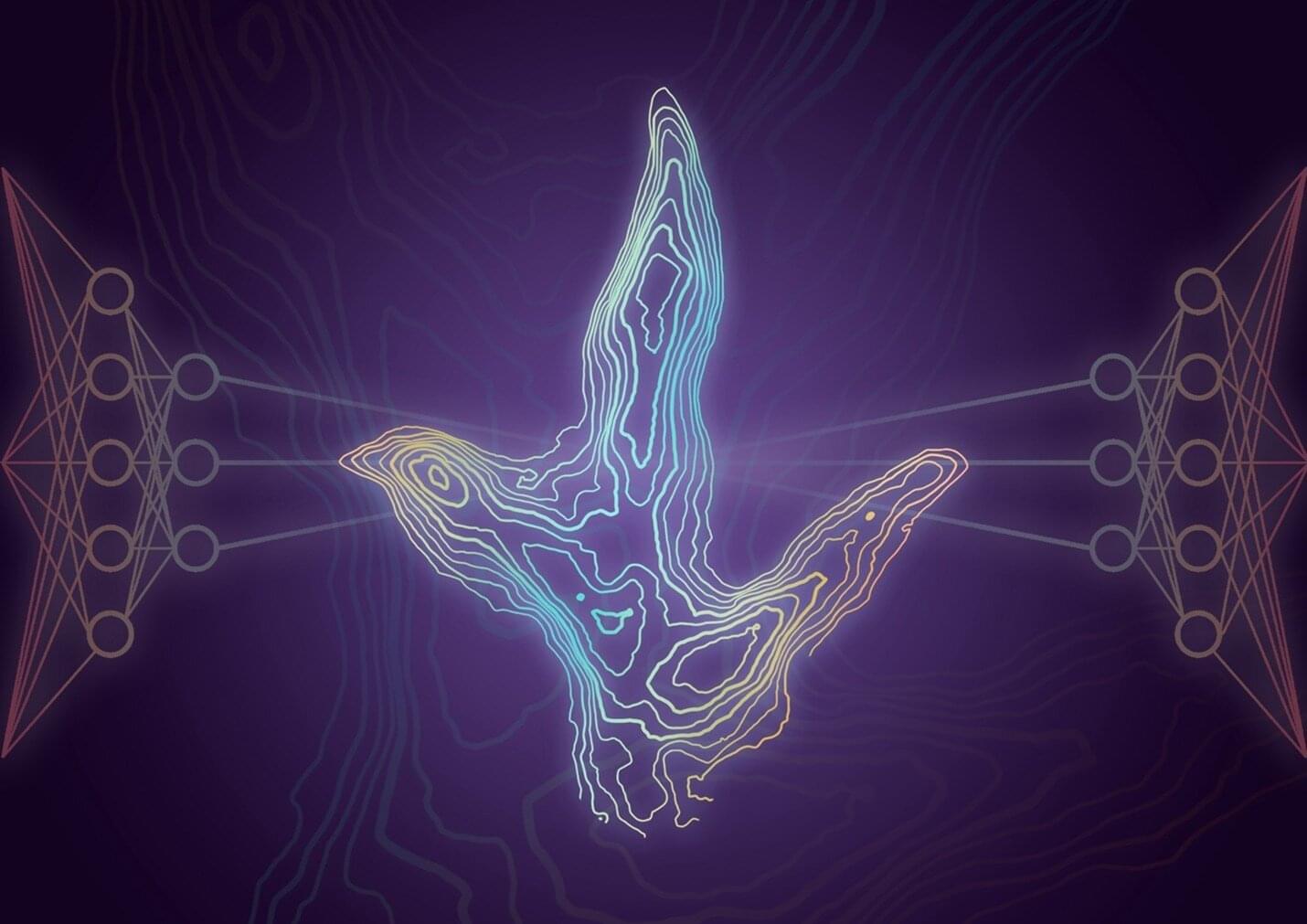

The current growth of artificial intelligence, robotics and cloud computing calls for more powerful chips made with even smaller transistors, which at this point means creating components that are only a few nanometers (or millionths of millimeters) in size. At that scale, classical physics is no longer enough to predict how the device will function, because, among other effects, electrons get so close to each other that quantum interactions between them can hugely affect the performance of the device.