AI-driven social engineering campaigns are evolving, targeting your employees, customers, and partners with impersonation tactics.

A cheetah’s powerful sprint, a snake’s lithe slither, or a human’s deft grasp: Each is made possible by the seamless interplay between soft and rigid tissues. Muscles, tendons, ligaments, and bones work together to provide the energy, precision, and range of motion needed to perform the complex movements seen throughout the animal kingdom.

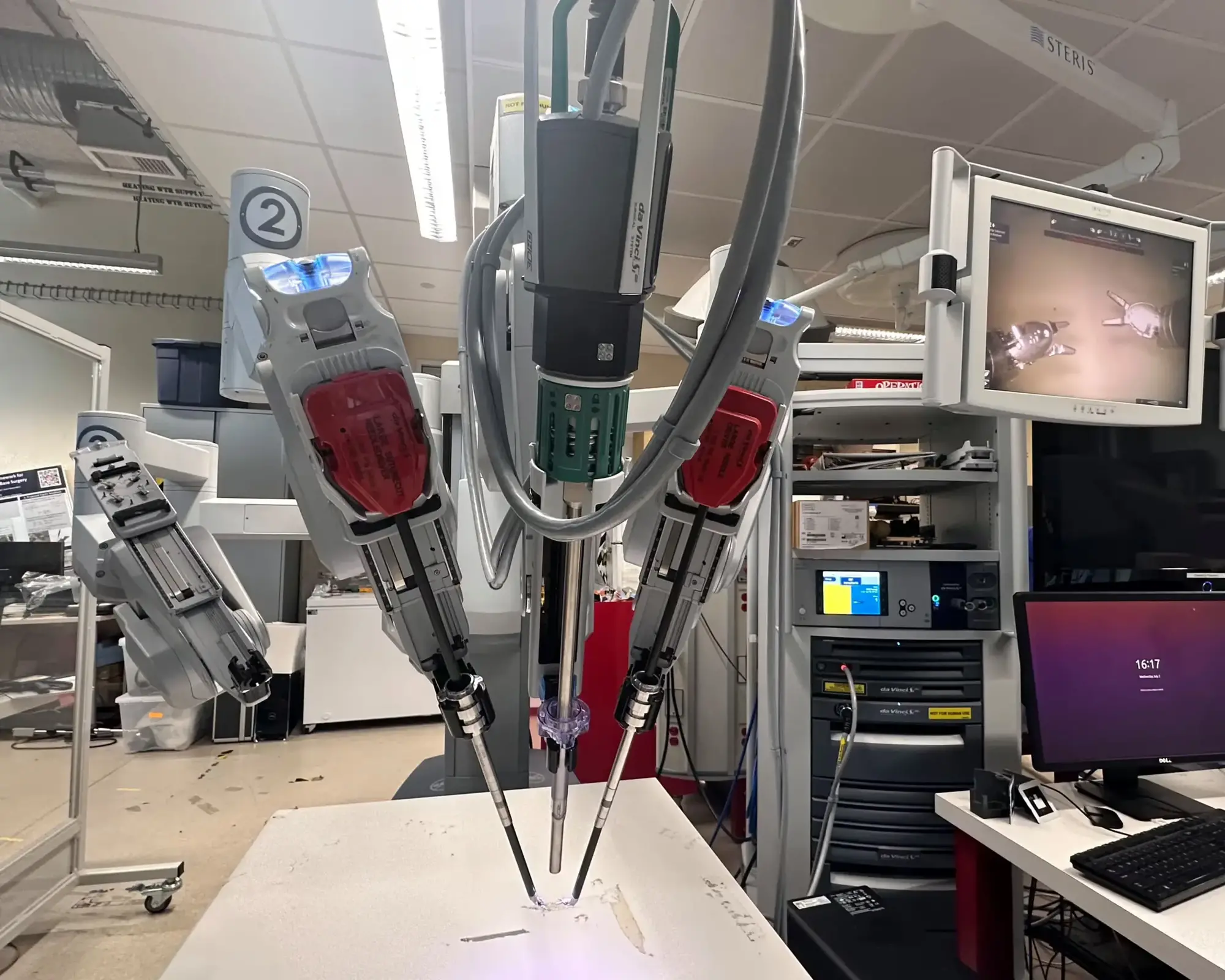

IN A NUTSHELL 🤖 The SRT-H robot, developed at Johns Hopkins University, performed a successful gallbladder surgery with human-like precision. 📚 Trained on surgical videos, the robot can learn and adapt in real-time, enhancing its ability to handle complex procedures. 🛠️ Equipped with machine learning technology similar to ChatGPT, SRT-H responds to voice commands and

Dear Friends & Colleagues, Like artificial intelligence, quantum technologies will transform our world as we know it. This issue focuses on what quantum constitutes and the promises and challenges of this emerging technology.

My new AI-assisted short film is here. Kira explores human cloning and the search for identity in today’s world.

It took nearly 600 prompts, 12 days (during my free time), and a $500 budget to bring this project to life. The entire film was created by one person using a range of AI tools, all listed at the end.

Enjoy.

~ Hashem.

Instagram: / hashem.alghaili.

Facebook: / sciencenaturepage.

Other channels: https://muse.io/hashemalghaili

Wimbledon’s new automated line-calling system glitched during a tennis match Sunday, just days after it replaced the tournament’s human line judges for the first time.

The system, called Hawk-Eye, uses a network of cameras equipped with computer vision to track tennis balls in real-time. If the ball lands out, a pre-recorded voice loudly says, “Out.” If the ball is in, there’s no call and play continues.

However, the software temporarily went dark during a women’s singles match between Brit Sonay Kartal and Russian Anastasia Pavlyuchenkova on Centre Court.