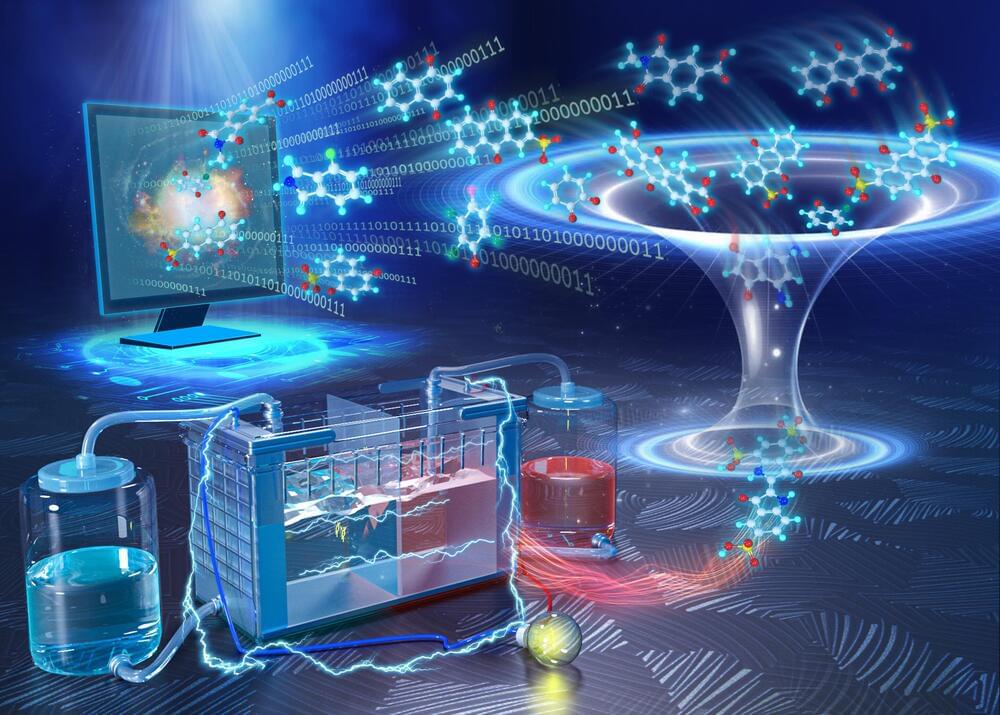

Scientists from the Dutch Institute for Fundamental Energy Research (DIFFER) have created a database of 31,618 molecules that could potentially be used in future redox-flow batteries. These batteries hold great promise for energy storage. Among other things, the researchers used artificial intelligence and supercomputers to identify the molecules’ properties. Today, they publish their findings in the journal Scientific Data.

In recent years, chemists have designed hundreds of molecules that could potentially be useful in flow batteries for energy storage. It would be wonderful, researchers from DIFFER in Eindhoven (the Netherlands) imagined, if the properties of these molecules were quickly and easily accessible in a database. The problem, however, is that for many molecules the properties are not known. Examples of molecular properties are redox potential and water solubility. Those are important since they are related to the power generation capability and energy density of redox flow batteries.

To find out the still-unknown properties of molecules, the researchers performed four steps. First, they used a desktop computer and smart algorithms to create thousands of virtual variants of two types of molecules. These molecule families, the quinones and aza aromatics, are good at reversibly accepting and donating electrons. That is important for batteries. The researchers fed the computer with backbone structures of 24 quinones and 28 aza-aromatics plus five different chemically relevant side groups. From that, the computer created 31,618 different molecules.