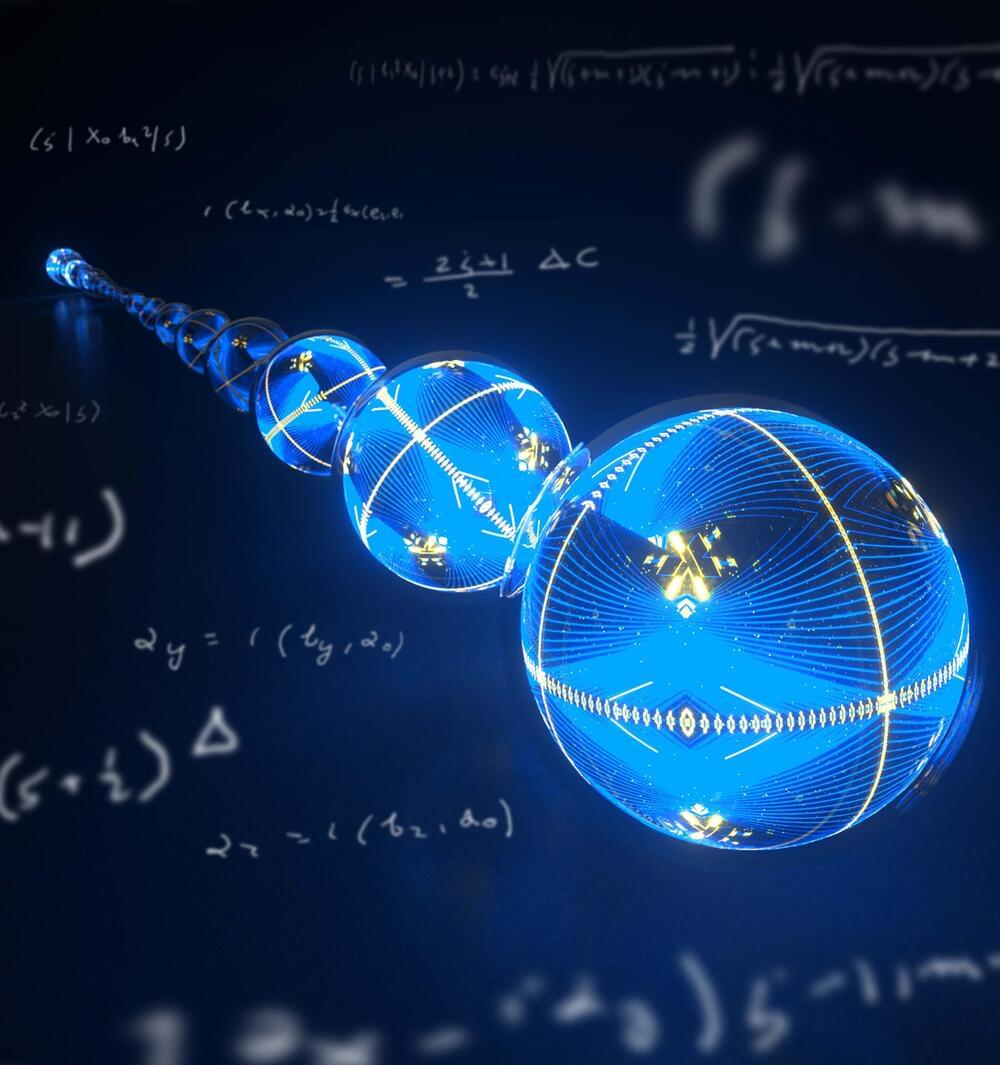

Physicists at Google Quantum AI have used their quantum computer to study a type of effective particle that is more resilient to environmental disturbances that can degrade quantum calculations. These effective particles, known as Majorana edge modes, form as a result of a collective excitation of multiple individual particles, like ocean waves form from the collective motions of water molecules. Majorana edge modes are of particular interest in quantum computing applications because they exhibit special symmetries that can protect the otherwise fragile quantum states from noise in the environment.

The condensed matter physicist Philip Anderson once wrote, “It is only slightly overstating the case to say that physics is the study of symmetry.” Indeed, studying physical phenomena and their relationship to underlying symmetries has been the main thrust of physics for centuries. Symmetries are simply statements about what transformations a system can undergo—such as a translation, rotation, or inversion through a mirror—and remain unchanged. They can simplify problems and elucidate underlying physical laws. And, as shown in the new research, symmetries can even prevent the seemingly inexorable quantum process of decoherence.

When running a calculation on a quantum computer, we typically want the quantum bits, or “qubits,” in the computer to be in a single, pure quantum state. But decoherence occurs when external electric fields or other environmental noise disturb these states by jumbling them up with other states to create undesirable states. If a state has a certain symmetry, then it could be possible to isolate it, effectively creating an island of stability that is impossible to mix with the other states that don’t also have the special symmetry. In this way, since the noise can no longer connect the symmetric state to the others, it could preserve the coherence of the state.