You can’t move a pharmaceutical scientist from a lab to a kitchen and expect the same research output. Enzymes behave exactly the same: They are dependent upon a specific environment. But now, in a study recently published in ACS Synthetic Biology, researchers from Osaka University have imparted an analogous level of adaptability to enzymes, a goal that has remained elusive for over 30 years.

Enzymes perform impressive functions, enabled by the unique arrangement of their constituent amino acids, but usually only within a specific cellular environment. When you change the cellular environment, the enzyme rarely functions well—if at all. Thus, a long-standing research goal has been to retain or even improve upon the function of enzymes in different environments; for example, conditions that are favorable for biofuel production. Traditionally, such work has involved extensive experimental trial-and-error that might have little assurance of achieving an optimal result.

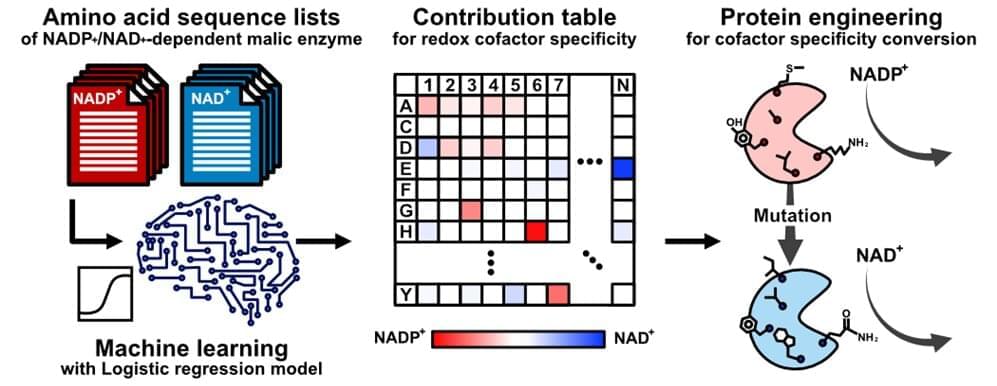

Artificial intelligence (a computer-based tool) can minimize this trial-and-error, but still relies on experimentally obtained crystal structures of enzymes—which can be unavailable or not especially useful. Thus, “the pertinent amino acids one should mutate in the enzyme might be only best-guesses,” says Teppei Niide, co-senior author. “To solve this problem, we devised a methodology of ranking amino acids that depends only on the widely available amino acid sequence of analogous enzymes from other living species.”