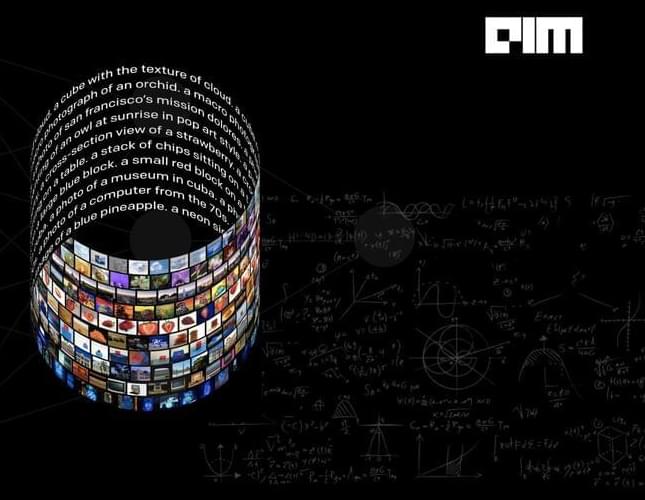

In 2020, OpenAI introduced GPT-3 and, a year later, DALL.E, a 12 billion parameter model, built on GPT-3. DALL.E was trained to generate images from text descriptions, and the latest release, DALL.E 2, generates even more realistic and accurate images with 4x better resolution. The model takes natural language captions and uses a dataset of text-image pairings to create realistic images. Additionally, it can take an image and create different variations inspired by original images.

DALL.E leverages the ‘diffusion’ process to learn the relationship between images and text descriptions. In diffusion, it starts with a pattern of random dots and tracks it towards an image when it recognises aspects of it. Diffusion models have emerged as a promising generative modelling framework and push the state-of-the-art image and video generation tasks. The guidance technique is leveraged in diffusion to improve sample fidelity for images and photorealism. DALL.E is made up of two major parts: a discrete autoencoder that accurately represents images in compressed latent space and a transformer that learns the correlations between language and this discrete image representation. Evaluators were asked to compare 1,000 image generations from each model, and DALL·E 2 was preferred over DALL·E 1 for its caption matching and photorealism.

DALL-E is currently only a research project, and is not available in OpenAI’s API.