Frustrated by the AI industry’s claims of proving math results without offering transparency, a team of leading academics has proposed a better way

One of the biggest challenges in climate science and weather forecasting is predicting the effects of turbulence at spatial scales smaller than the resolution of atmospheric and oceanic models. Simplified sets of equations known as closure models can predict the statistics of this “subgrid” turbulence, but existing closure models are prone to dynamic instabilities or fail to account for rare, high-energy events. Now Karan Jakhar at the University of Chicago and his colleagues have applied an artificial-intelligence (AI) tool to data generated by numerical simulations to uncover an improved closure model [1]. The finding, which the researchers subsequently verified with a mathematical derivation, offers insights into the multiscale dynamics of atmospheric and oceanic turbulence. It also illustrates that AI-generated prediction models need not be “black boxes,” but can be transparent and understandable.

The team trained their AI—a so-called equation-discovery tool—on “ground-truth” data that they generated by performing computationally costly, high-resolution numerical simulations of several 2D turbulent flows. The AI selected the smallest number of mathematical functions (from a library of 930 possibilities) that, in combination, could reproduce the statistical properties of the dataset. Previously, researchers have used this approach to reproduce only the spatial structure of small-scale turbulent flows. The tool used by Jakhar and collaborators filtered for functions that correctly represented not only the structure but also energy transfer between spatial scales.

They tested the performance of the resulting closure model by applying it to a computationally practical, low-resolution version of the dataset. The model accurately captured the detailed flow structures and energy transfers that appeared in the high-resolution ground-truth data. It also predicted statistically rare conditions corresponding to extreme-weather events, which have challenged previous models.

In a new study published in Physical Review Letters, researchers used machine learning to discover multiple new classes of two-dimensional memories, systems that can reliably store information despite constant environmental noise. The findings indicate that robust information storage is considerably richer than previously understood.

For decades, scientists believed there was essentially one way to achieve robust memory in such systems—a mechanism discovered in the 1980s known as Toom’s rule. All previously known two-dimensional memories with local order parameters were variations on this single scheme.

The challenge lies in the sheer scale of possibilities. The number of potential local update rules for a simple two-dimensional cellular automaton is astronomically large, far greater than the estimated number of atoms in the observable universe. Traditional methods of discovery through exhaustive search or hand-design are therefore impractical at this scale.

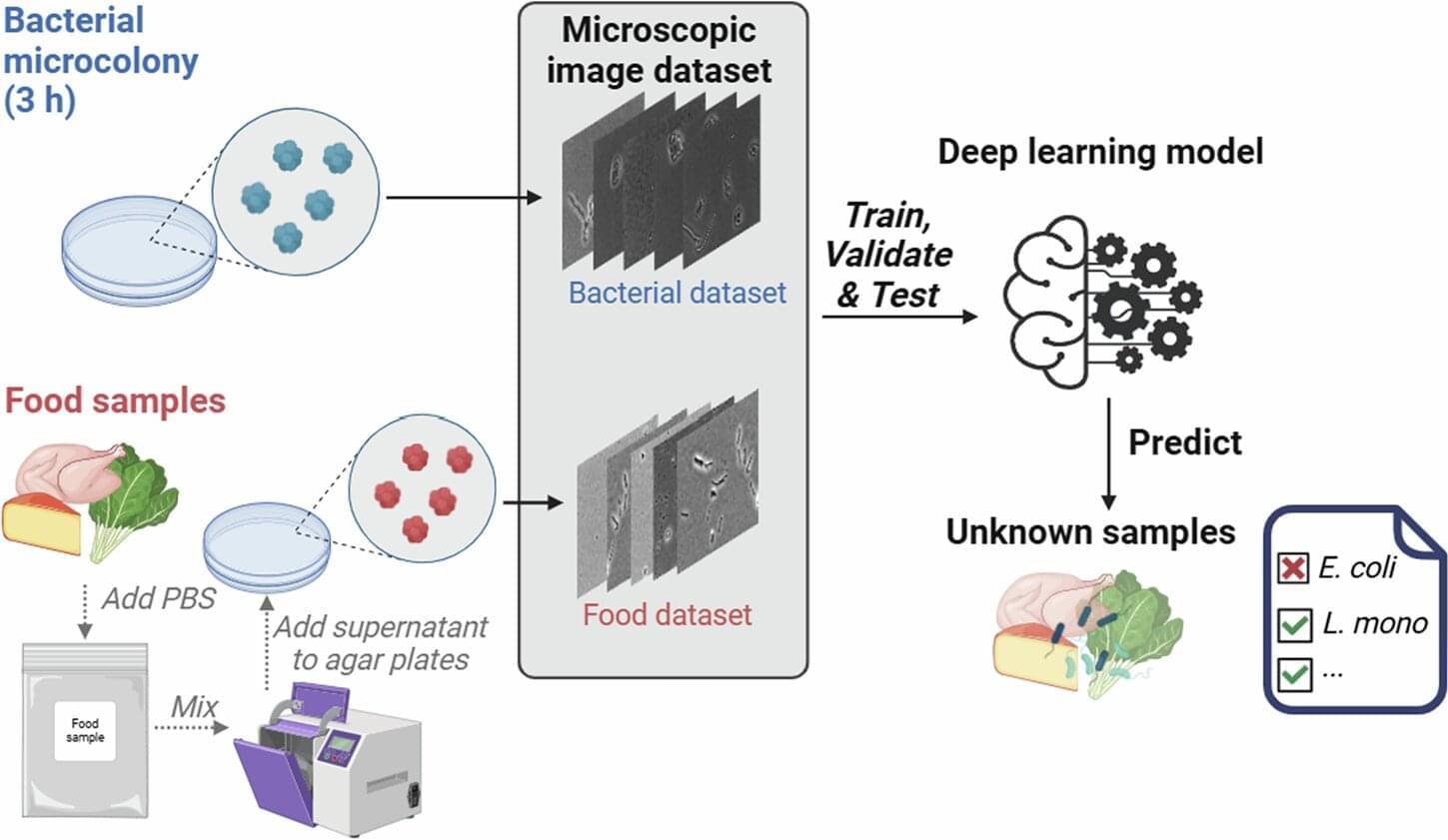

Researchers have significantly enhanced an artificial intelligence tool used to rapidly detect bacterial contamination in food by eliminating misclassifications of food debris that looks like bacteria. Current methods to detect contamination of foods such as leafy greens, meat and cheese, which typically involve cultivating bacteria, often require specialized expertise and are time-consuming—taking several days to a week.

Luyao Ma, an assistant professor at Oregon State University, and her collaborators from the University of California, Davis, Korea University and Florida State University, have developed a deep learning-based model for rapid detection and classification of live bacteria using digital images of bacteria microcolonies. The method enables reliable detection within three hours. The findings are published in the journal npj Science of Food.

Their latest breakthrough involves training the model to distinguish bacteria from microscopic food debris to improve its accuracy. A model trained only on bacteria misclassified debris as bacteria more than 24% of the time. The enhanced model, trained on both bacteria and debris, eliminated misclassifications.

In December, the artificial intelligence company Anthropic unveiled its newest tool, Interviewer, used in its initial implementation “to help understand people’s perspectives on AI,” according to a press release. As part of Interviewer’s launch, Anthropic publicly released 1,250 anonymized interviews conducted on the platform.

A proof-of-concept demonstration, however, conducted by Tianshi Li of the Khoury College of Computer Sciences at Northeastern University, presents a method for de-anonymizing anonymized interviews using widely available large language models (LLMs) to associate responses with the real people who participated. The paper is published on the arXiv preprint server.

Become a Big Think member to unlock expert classes, premium print issues, exclusive events and more: https://bigthink.com/membership/?utm_…

“Old systems of the past are collapsing, and new systems of the future are still to be born. I call this moment the great progression.”

Up next, We are living through a slowdown in human progress | Jason Crawford ► • We are living through a slowdown in human…

We are at a tipping point. In the next 25 years, technologies like AI, clean energy, and bioengineering are poised to reshape society on a scale few can imagine.

Peter Leyden draws on decades of observing technological revolutions and historical patterns to show how old systems collapse, new ones rise, and humanity faces both extraordinary risk and unprecedented opportunity.

0:00 We’re on the cusp of an era of progress.

As a listener of TOE you can get a special 20% off discount to The Economist and all it has to offer! Visit https://www.economist.com/toe.

Join My New Substack (Personal Writings): https://curtjaimungal.substack.com.

Listen on Spotify: https://tinyurl.com/SpotifyTOE

Become a YouTube Member (Early Access Videos):

https://www.youtube.com/channel/UCdWIQh9DGG6uhJk8eyIFl1w/join.

Support TOE on Patreon: https://patreon.com/curtjaimungal.

Twitter: https://twitter.com/TOEwithCurt.

Want to understand how artificial intelligence could change your job? Look to radiology as a clue.

Radiology has come up multiple times as an example of a field that’s been impacted by AI without replacing the need for human workers.

Lex Fridman Podcast full episode: https://www.youtube.com/watch?v=ykY69lSpDdo.

Please support this podcast by checking out our sponsors:

- Shopify: https://shopify.com/lex to get 14-day free trial.

- NetSuite: http://netsuite.com/lex to get free product tour.

- Linode: https://linode.com/lex to get $100 free credit.

- MasterClass: https://masterclass.com/lex to get 15% off.

- Indeed: https://indeed.com/lex to get $75 credit.

GUEST BIO:

Ray Kurzweil is an author, inventor, and futurist.

PODCAST INFO:

Podcast website: https://lexfridman.com/podcast.

Apple Podcasts: https://apple.co/2lwqZIr.

Spotify: https://spoti.fi/2nEwCF8

RSS: https://lexfridman.com/feed/podcast/

Full episodes playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOdP_8GztsuKi9nrraNbKKp4

Clips playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOeciFP3CBCIEElOJeitOr41

SOCIAL:

- Twitter: https://twitter.com/lexfridman.

- LinkedIn: https://www.linkedin.com/in/lexfridman.

- Facebook: https://www.facebook.com/lexfridman.

- Instagram: https://www.instagram.com/lexfridman.

- Medium: https://medium.com/@lexfridman.

- Reddit: https://reddit.com/r/lexfridman.

- Support on Patreon: https://www.patreon.com/lexfridman