“Cancer and other complex diseases arise from the interplay of various biological factors, for example, at the DNA, RNA, and protein levels,” explains the author. Characteristic changes at these levels — such as the amount of HER2 protein produced in breast or stomach cancer — are often recorded, but typically not yet analyzed in conjunction with all other therapy-relevant factors.

This is where Flexynesis comes in. “Comparable tools so far have often been either difficult to use, or only useful for answering certain questions,” says the author. “Flexynesis, by contrast, can answer various medical questions at the same time: for example, what type of cancer is involved, what drugs are particularly effective in this case, and how these will affect the patient’s chances of survival.” The tool also helps identify suitable biomarkers for diagnosis and prognosis, or — if metastases of unknown origin are discovered — to identify the primary tumor. “This makes it easier to develop comprehensive and personalized treatment strategies for all kinds of cancer patients,” says the author.

Nearly 50 new cancer therapies are approved every year. This is good news. “But for patients and their treating physicians, it is becoming increasingly difficult to keep track and to select the treatment methods from which the people affected — each with their very individual tumor characteristics — will benefit the most,” says the senior author. The researcher has been working for some time on developing tools that use artificial intelligence to make more precise diagnoses and that also determine the best form of therapy tailored to individual patients.

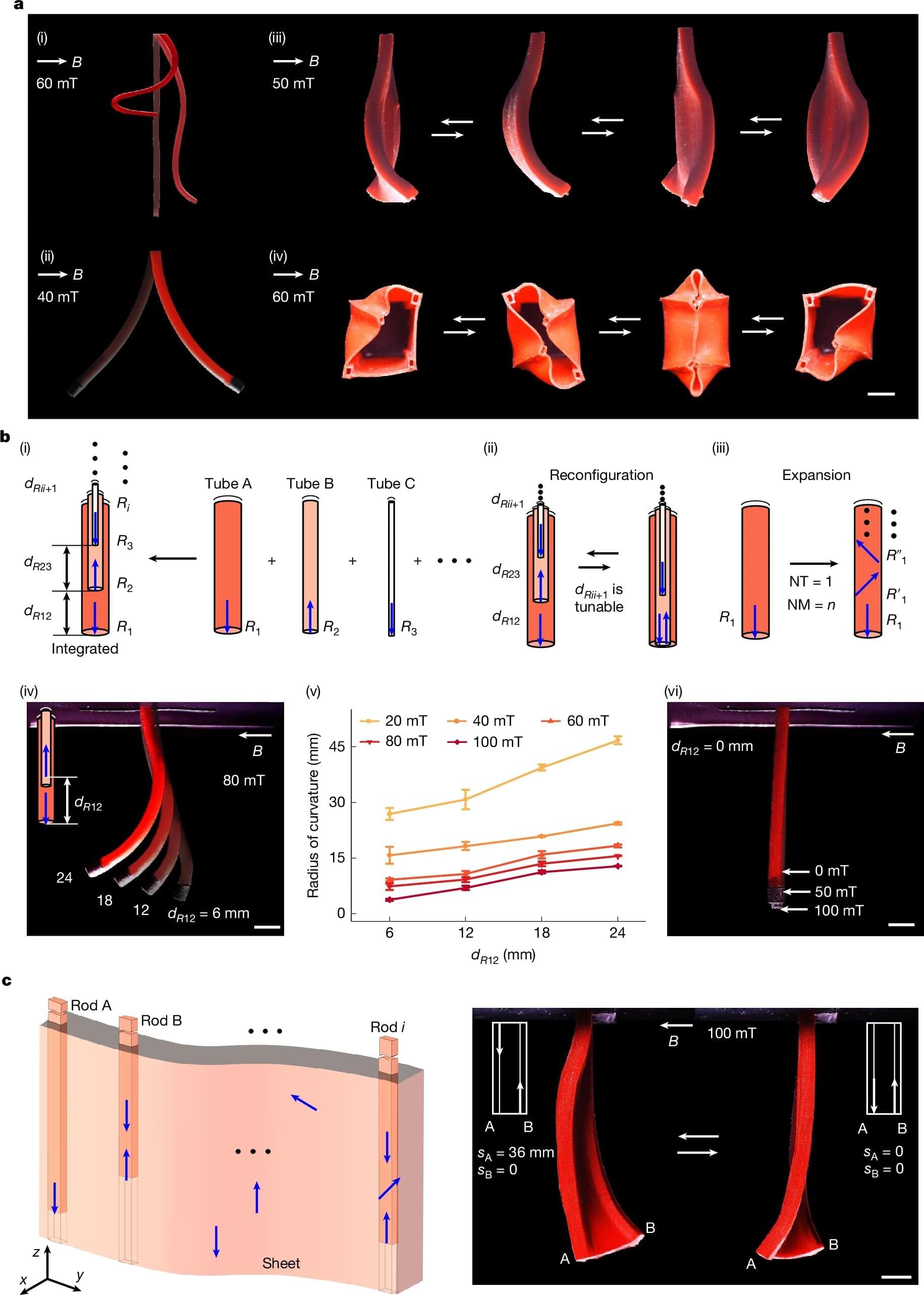

The team has now developed a toolkit called Flexynesis, which does not rely solely on classical machine learning but also uses deep learning to evaluate very different types of data simultaneously — for example, multi-omics data as well as specially processed texts and images, such as CT or MRI scans. “In this way, it enables doctors to make better diagnoses, prognoses, and develop more precise treatment strategies for their patients,” says the author. Flexynesis is described in detail in a paper published in “Nature Communications.”

“We are running multiple translational projects with medical doctors who want to identify biomarkers from multi-omics data that align with disease outcomes,” says the first and co-corresponding author of the publication. “Although many deep-learning based methods have been published for this purpose, most have turned out to be inflexible, tied to specific modeling tasks, or difficult to install and reuse. That gap motivated us to build Flexynesis as a proper toolkit, which is flexible for different modeling tasks and packaged on PyPI, Guix, Docker, Bioconda, and Galaxy, so others can readily apply it in their own pipelines.”