Google patched Gemini AI flaws—prompt and search injection bugs risking user privacy and cloud data theft.

AI scientists are emerging computational systems that serve as collaborative partners in discovery. These systems remain difficult to build because they are bespoke, tied to rigid workflows, and lack shared environments that unify tools, data, and analyses into a common ecosystem. In omics, unified ecosystems have transformed research by enabling interoperability, reuse, and community-driven development; AI scientists require comparable infrastructure. We present ToolUniverse, an ecosystem for building AI scientists from any language or reasoning model, whether open or closed. TOOLUNIVERSE standardizes how AI scientists identify and call tools, integrating more than 600 machine learning models, datasets, APIs, and scientific packages for data analysis, knowledge retrieval, and experimental design. It automatically refines tool interfaces for correct use by AI scientists, creates new tools from natural language descriptions, iteratively optimizes tool specifications, and composes tools into agentic workflows. In a case study of hypercholesterolemia, ToolUniverse was used to create an AI scientist to identify a potent analog of a drug with favorable predicted properties. The open-source ToolUniverse is available at https://aiscientist.tools.

More users will be able to use advanced and personalized AI in their daily lives, taking one more step further towards AI democratization

Artificial people may put a lot of actors out of work.

Tilly Norwood looks and sounds real, but she’s not real at all.

Created by Eline Van Der Velden, the CEO of the AI production company Particle6, the “actress” has garnered interest from studios with talent agents eyeing to sign her.

Variety reports, Van Der Velden explained at the Zurich Summit that studio interest has spiked since Tilly’s launch with agency representation expected soon. If signed, she would be one of the first AI-generated actresses to have talent representation.

Whether an asteroid is spinning neatly on its axis or tumbling chaotically, and how fast it is doing so, has been shown to be dependent on how frequently it has experienced collisions. The findings, presented at the recent EPSC-DPS2025 Joint Meeting in Helsinki, are based on data from the European Space Agency’s Gaia mission and provide a means of determining an asteroid’s physical properties—information that is vital for successfully deflecting asteroids on a collision course with Earth.

“By leveraging Gaia’s unique dataset, advanced modeling and A.I. tools, we’ve revealed the hidden physics shaping asteroid rotation, and opened a new window into the interiors of these ancient worlds,” said Dr. Wen-Han Zhou of the University of Tokyo, who presented the results at EPSC-DPS2025.

During its survey of the entire sky, the Gaia mission produced a huge dataset of asteroid rotations based on their light curves, which describe how the light reflected by an asteroid changes over time as it rotates. When the asteroid data is plotted on a graph of the rotation period versus diameter, something startling stands out—there’s a gap, or dividing line that appears to split two distinct populations.

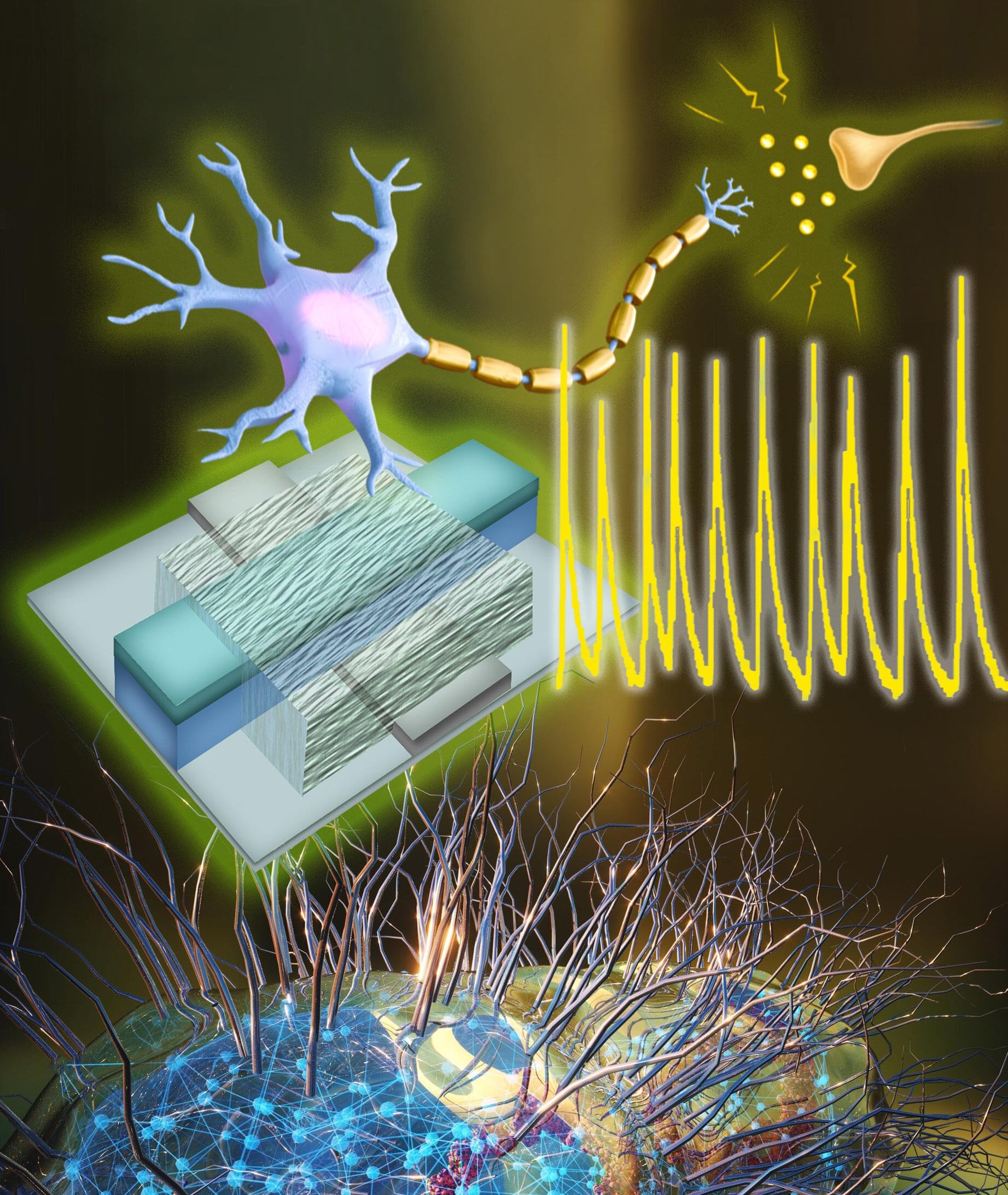

A team of engineers at the University of Massachusetts Amherst has announced the creation of an artificial neuron with electrical functions that closely mirror those of biological ones. Building on their previous work using protein nanowires synthesized from electricity-generating bacteria, the team’s discovery means that we could see immensely efficient computers built on biological principles which could interface directly with living cells.

“Our brain processes an enormous amount of data,” says Shuai Fu, a graduate student in electrical and computer engineering at UMass Amherst and lead author of the study published in Nature Communications. “But its power usage is very, very low, especially compared to the amount of electricity it takes to run a Large Language Model, like ChatGPT.”

The human body is over 100 times more electrically efficient than a computer’s electrical circuit. The human brain is composed of billions of neurons, specialized cells that send and receive electrical impulses all over the body. While it takes only about 20 watts for your brain to, say, write a story, an LLM might consume well over a megawatt of electricity to do the same task.