Recall.ai provides an API to get recordings, transcripts and metadata from video conferencing platforms like Zoom, Google Meet Microsoft Teams, and more. Get this data with our Meeting Bot API, Desktop Recording SDK, or Mobile Recording SDK.

Category: robotics/AI – Page 120

Elon Musk on DOGE, Optimus, Starlink Smartphones, Evolving with AI, Why the West is Imploding

Questions to inspire discussion.

🧠 Q: What improvements does Tesla’s AI5 chip offer over AI4? A: AI5 provides a 40x improvement in silicon, addressing core limitations of AI4, with 8x more compute, 9x more memory, 5x more memory bandwidth, and the ability to easily handle mixed precision models.

📱 Q: How will Starlink-enabled smartphones revolutionize connectivity? A: Starlink-enabled smartphones will allow direct high bandwidth connectivity from satellites to phones, requiring hardware changes in phones and collaboration between satellite providers and handset makers.

🌐 Q: What is Elon Musk’s vision for Starlink as a global carrier? A: Musk envisions Starlink as a global carrier working worldwide, offering users a comprehensive solution for high bandwidth at home and direct to cell through one direct deal.

🚀 Q: What are the expected capabilities of SpaceX’s Starship? A: Starship is projected to demonstrate full reusability next year, carrying over 100 tons to orbit, being five times bigger than Falcon Heavy, and capable of catching both the booster and ship.

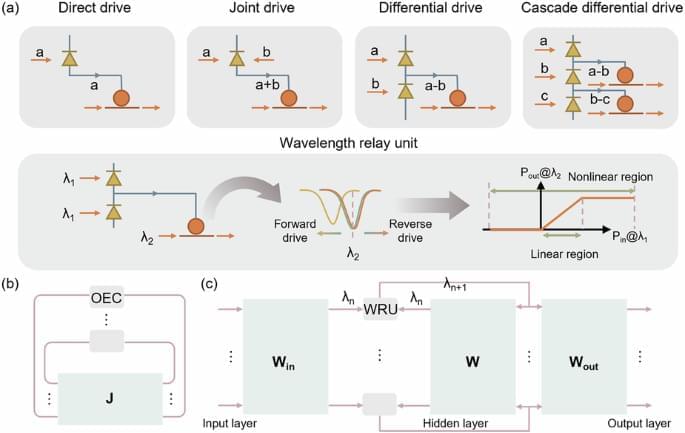

AI and Compute.

Elon Spills The Beans On Tesla AI, Optimus & Autonomy

Solving The Money Problem

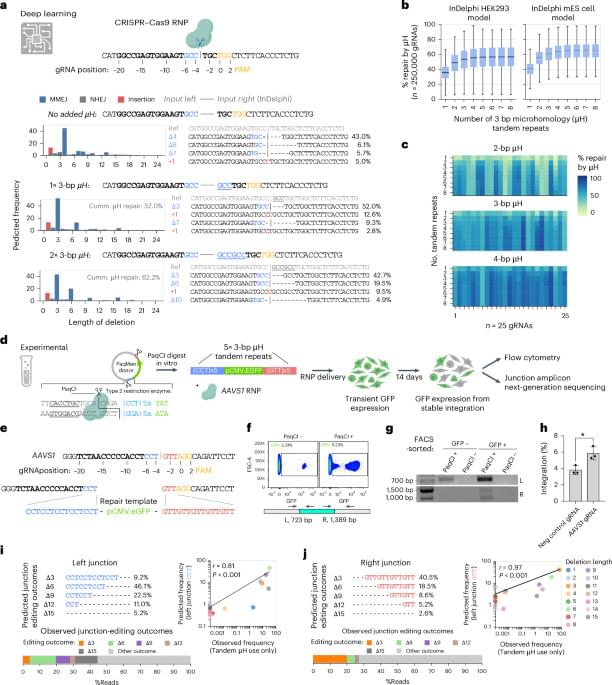

Rethinking how our brains build the neural networks underlying motor memories

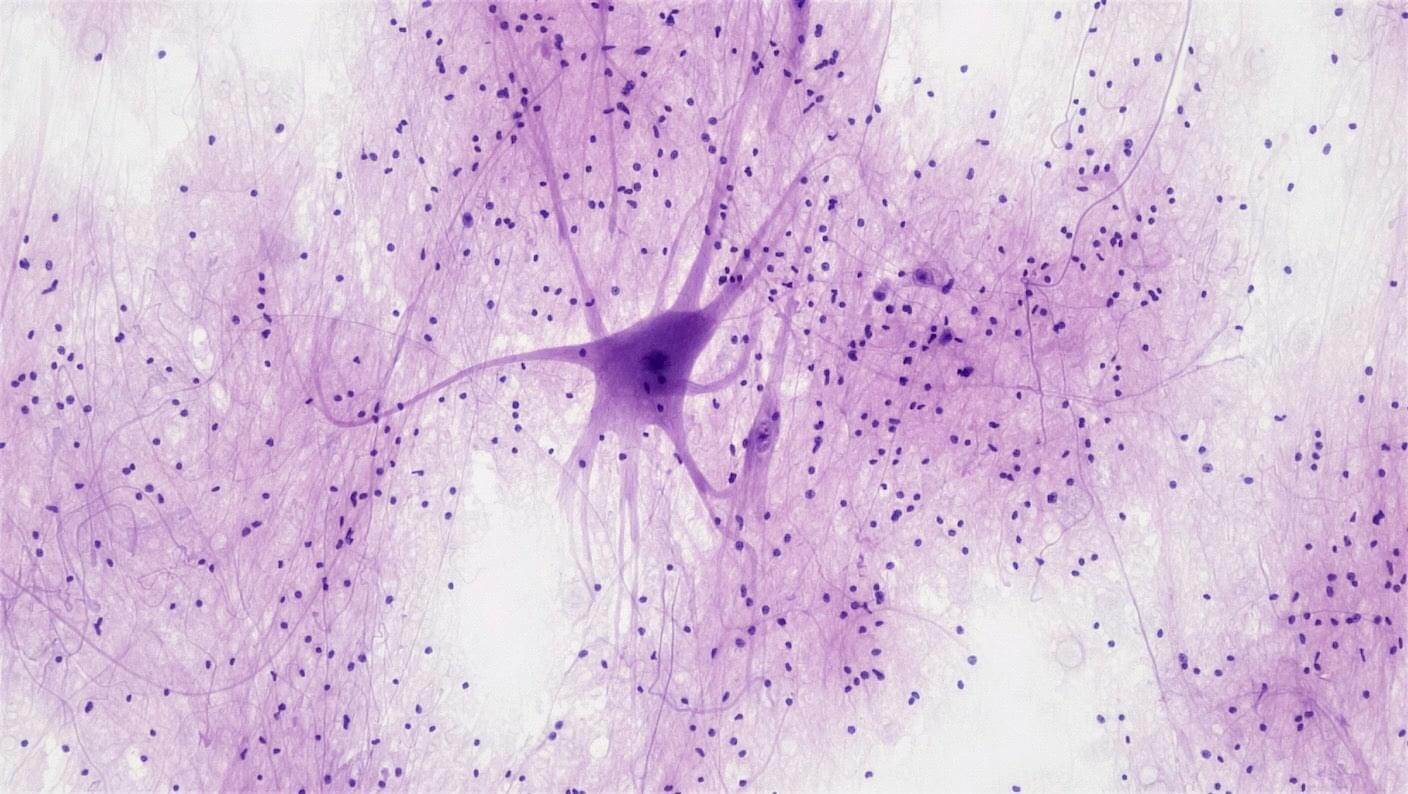

For every motor skill you’ve ever learned, whether it’s walking or watchmaking, there is a small ensemble of neurons in your brain that makes that movement happen. Our brains trigger these ensembles—what we sometimes call “muscle memories”—to get our bodies cooking, showering, typing, and every other voluntary thing we do.

This Crawling Robot Is Made With Living Brain and Muscle Cells

Watching the robot crawl around is amusing, but the study’s main goal is to see if a biohybrid robot can form a sort of long-lasting biological “mind” that directs movement. Neurons are especially sensitive cells that rapidly stop working or even die outside of a carefully controlled environment. Using blob-like amalgamations of different types of neurons to direct muscles, the sponge-bots retained their crawling ability for over two weeks.

Scientists have built biohybrid bots that use electricity or light to control muscle cells. Some mimic swimming, walking, and grabbing motions. Adding neurons could further fine-tune their activity and flexibility and even bestow a sort of memory for repeated tasks.

These biohybrid bots offer a unique way to study motion, movement disorders, and drug development without lab animals. Because their components are often compatible with living bodies, they could be used for diagnostics, drug delivery, and other medical scenarios.