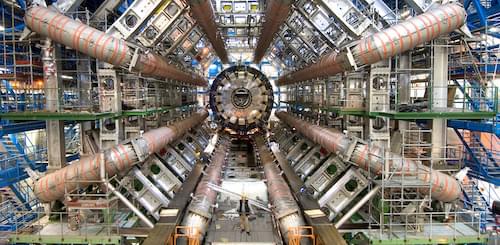

Europe now has an exascale supercomputer which runs entirely on renewable energy. Of particular interest: one of the 30 inaugural projects for the machine focuses on realistic simulations of biological neurons (see https://www.fz-juelich.de/en/news/effzett/2024/brain-research)

[ https://www.nature.com/articles/d41586-025-02981-1](https://www.nature.com/articles/d41586-025-02981-1)

Large language models (LLMs) work with artificial neural networks inspired by the way the brain works. Dr. Thorsten Hater (JSC) is focused on the nature-inspired models of LLMs: neurons that communicate with each other in the human brain. He wants to use the exascale computer JUPITER to perform even more realistic simulations of the behaviour of individual neurons.

Many models treat a neuron merely as a point that is connected to other points. The spikes, or electrical signals, travel along these connections. “Of course, this is overly simplified,” says Hater. “In our model, the neurons have a spatial extension, as they do in reality. This allows us to describe many processes in detail on the molecular level. We can calculate the electric field across the entire cell. And we can thus show how signal transmission varies right down to the individual neuron. This gives us a much more realistic picture of these processes.”

For the simulations, Hater uses a program called Arbor. This allows more than two million individual cells to be interconnected computationally. Such models of natural neural networks are useful, for example, in the development of drugs to combat neurodegenerative diseases like Alzheimer’s. The physicist and software developer would like to simulate and study the changes that take place in the neurons in the brain on the exascale computer.