A trio of scientists who developed the combination drug Trikafta are among the winners of five major awards in life sciences, physics and mathematics.

What would happen if, instead of buying the newest iPhone every time Apple launches one, you bought that same amount of Apple stock? There is a tweet floating around saying that if you had bought Apple shares instead of an iPhone every time they came out, you’d have hundreds of millions of dollars. The math is off (if you’d spent $20k on Apple stock when the rumors of the iPhone first started, you’d have $1.5 million today, at best) but in any case – it’d only make sense if you were clairvoyant in 2007, and knew when Apple would be launching phones, and at which price.

I figured a more fair way of calculating it would be to imagine buy a top-of-the-line iPhone every time Apple releases a new iPhone, or spend the same amount on Apple stock. If you had done that, by my calculations, you’d have spent around $16,000 on iPhones over the years (that’s around $20,000 in today’s dollars). If you’d bought Apple shares instead, you’d today have $147,000 or so — or a profit of around $131,000.

When Isaac Newton inscribed onto parchment his now-famed laws of motion in 1,687, he could have only hoped we’d be discussing them three centuries later.

Writing in Latin, Newton outlined three universal principles describing how the motion of objects is governed in our Universe, which have been translated, transcribed, discussed and debated at length.

But according to a philosopher of language and mathematics, we might have been interpreting Newton’s precise wording of his first law of motion slightly wrong all along.

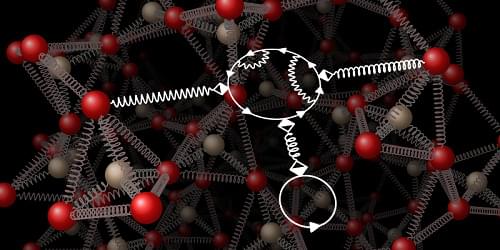

A new set of equations captures the dynamical interplay of electrons and vibrations in crystals and forms a basis for computational studies.

Although a crystal is a highly ordered structure, it is never at rest: its atoms are constantly vibrating about their equilibrium positions—even down to zero temperature. Such vibrations are called phonons, and their interaction with the electrons that hold the crystal together is partly responsible for the crystal’s optical properties, its ability to conduct heat or electricity, and even its vanishing electrical resistance if it is superconducting. Predicting, or at least understanding, such properties requires an accurate description of the interplay of electrons and phonons. This task is formidable given that the electronic problem alone—assuming that the atomic nuclei stand still—is already challenging and lacks an exact solution. Now, based on a long series of earlier milestones, Gianluca Stefanucci of the Tor Vergata University of Rome and colleagues have made an important step toward a complete theory of electrons and phonons [1].

At a low level of theory, the electron–phonon problem is easily formulated. First, one considers an arrangement of massive point charges representing electrons and atomic nuclei. Second, one lets these charges evolve under Coulomb’s law and the Schrödinger equation, possibly introducing some perturbation from time to time. The mathematical representation of the energy of such a system, consisting of kinetic and interaction terms, is the system’s Hamiltonian. However, knowing the exact theory is not enough because the corresponding equations are only formally simple. In practice, they are far too complex—not least owing to the huge number of particles involved—so that approximations are needed. Hence, at a high level, a workable theory should provide the means to make reasonable approximations yielding equations that can be solved on today’s computers.

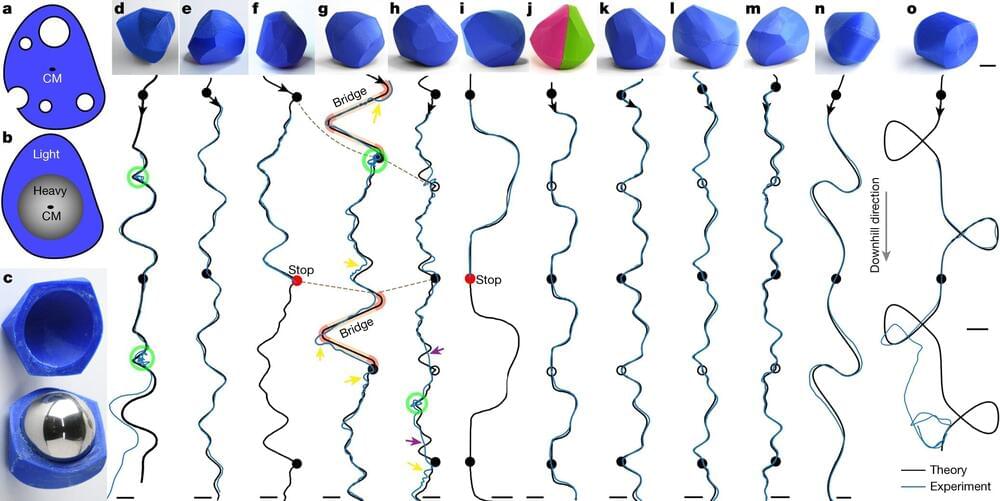

Normally, when we think of a rolling object, we tend to imagine a torus (like a bicycle wheel) or a sphere (like a tennis ball) that will always follow a straight path when rolling. However, the world of mathematics and science is always open to exploring new ideas and concepts. This is why researchers have been studying shapes, like oloids, sphericons and more, which do not roll in straight lines.

All these funky shapes are really interesting to researchers as they can show us new ways to move objects around smoothly and efficiently. For example, imagine reducing the energy required to make a toy robot move, or mixing ingredients more thoroughly with a unique-looking spoon. While these peculiar shapes have been studied before, scientists have now taken it a step further.

Consider a game where you draw a path on a tilted table—similar to tilting a pinball table to make the ball go in a particular direction. Now, try to come up with a 3D object that, when placed at the top of the table, will roll down and exactly follow that path, instead of just going straight down. There are a few other rules of this game: the table needs to be inclined slightly (and not too much), there should be no slipping during rolling, and the initial orientation of the object can be chosen at launch. Plus, the path you draw must never go uphill and must be periodic. It must also consist of identical repeating segments—somewhat like in music rhythm patterns.

Summary: Researchers delved deep into the mysteries of synchronization in complex systems, uncovering how certain elements effortlessly fall into or out of sync. This dance of coordination can be observed from humans clapping in rhythm to the synchronicity of heart cells.

By studying “walks” through networks, the team discovered the role of convergent walks in diminishing the quality of synchronization. These findings could revolutionize our understanding of everything from power grid stability to brain functions and social media dynamics.

Diagonal Arguments are a powerful tool in maths, and appear in several different fundamental results, like Cantor’s original Diagonal argument proof (there exist uncountable sets, or “some infinities are bigger than other infinities”), Turing’s Halting Problem, Gödel’s incompleteness theorems, Russell’s Paradox, the Liar Paradox, and even the Y Combinator.

In this video, I try and motivate what a general diagonal argument looks like, from first principles. It should be accessible to anyone who’s comfortable with functions and sets.

The main result that I’m secretly building up towards is Lawvere’s theorem in Category Theory.

[https://link.springer.com/chapter/10.1007/BFb0080769]

with inspiration from this motivating paper by Yanofsky.

[https://www.jstor.org/stable/3109884].

This video will be followed by a more detailed video on just Gödel’s incompleteness theorems, building on the idea from this video.

====Timestamps====

00:00 Introduction.

00:59 A first look at uncountability.

05:04 Why generalise?

06:53 Mathematical patterns.

07:40 Working with functions and sets.

11:40 Second version of Cantor’s Proof.

13:40 Powersets and Cantor’s theorem in its generality.

15:38 Proof template of Diagonal Argument.

16:40 The world of Computers.

21:05 Gödel numbering.

23:05 An amazing program (setup of the Halting Problem)

25:05 Solution to the Halting Problem.

29:49 Comparing two diagonal arguments.

31:13 Lawvere’s theorem.

32:49 Diagonal function as a way for encoding self-reference.

35:11 Summary of video.

35:44 Bonus treat — Russell’s Paradox.

CORRECTIONS