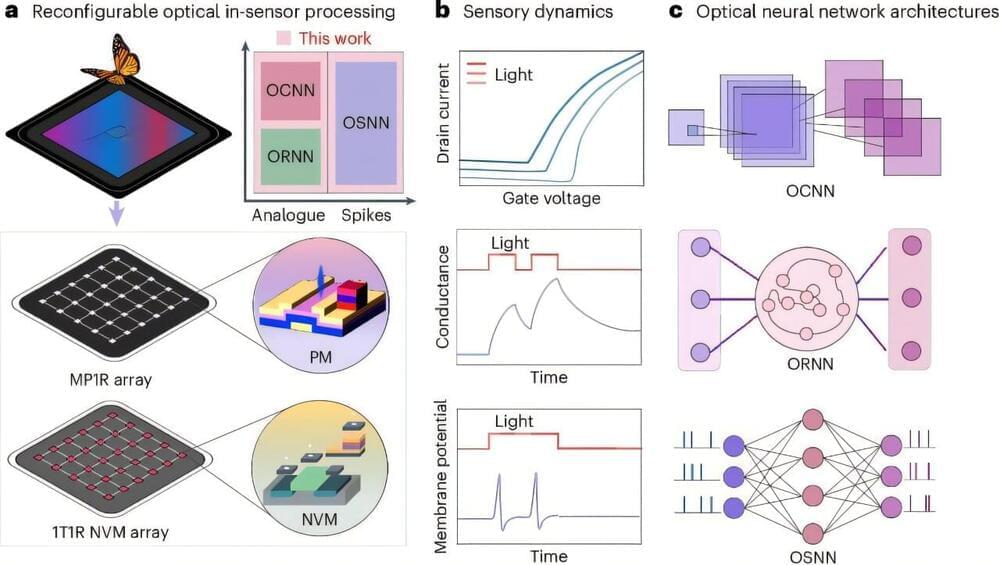

In recent years, engineers have been trying to create hardware systems that better support the high computational demands of machine learning algorithms. These include systems that can perform multiple functions, acting as sensors, memories and computer processors all at once.

Researchers at Peking University recently developed a new reconfigurable neuromorphic computing platform that integrates sensing and computing functions in a single device. This system, outlined in a paper published in Nature Electronics, is comprised of an array of multiple phototransistors with one memristor (MP1R).

“The inspiration for this research stemmed from the limitations of traditional vision computing systems based on the CMOS von Neumann architecture,” Yuchao Yang, senior author of the paper, told Tech Xplore.