Over the past decade, digital cameras have been widely adopted in various aspects of our society, and are being massively used in mobile phones, security surveillance, autonomous vehicles, and facial recognition. Through these cameras, enormous amounts of image data are being generated, which raises growing concerns about privacy protection.

Some existing methods address these concerns by applying algorithms to conceal sensitive information from the acquired images, such as image blurring or encryption. However, such methods still risk exposure of sensitive data because the raw images are already captured before they undergo digital processing to hide or encrypt the sensitive information. Also, the computation of these algorithms requires additional power consumption. Other efforts were also made to seek solutions to this problem by using customized cameras to downgrade the image quality so that identifiable information can be concealed. However, these approaches sacrifice the overall image quality for all the objects of interest, which is undesired, and they are still vulnerable to adversarial attacks to retrieve the sensitive information that is recorded.

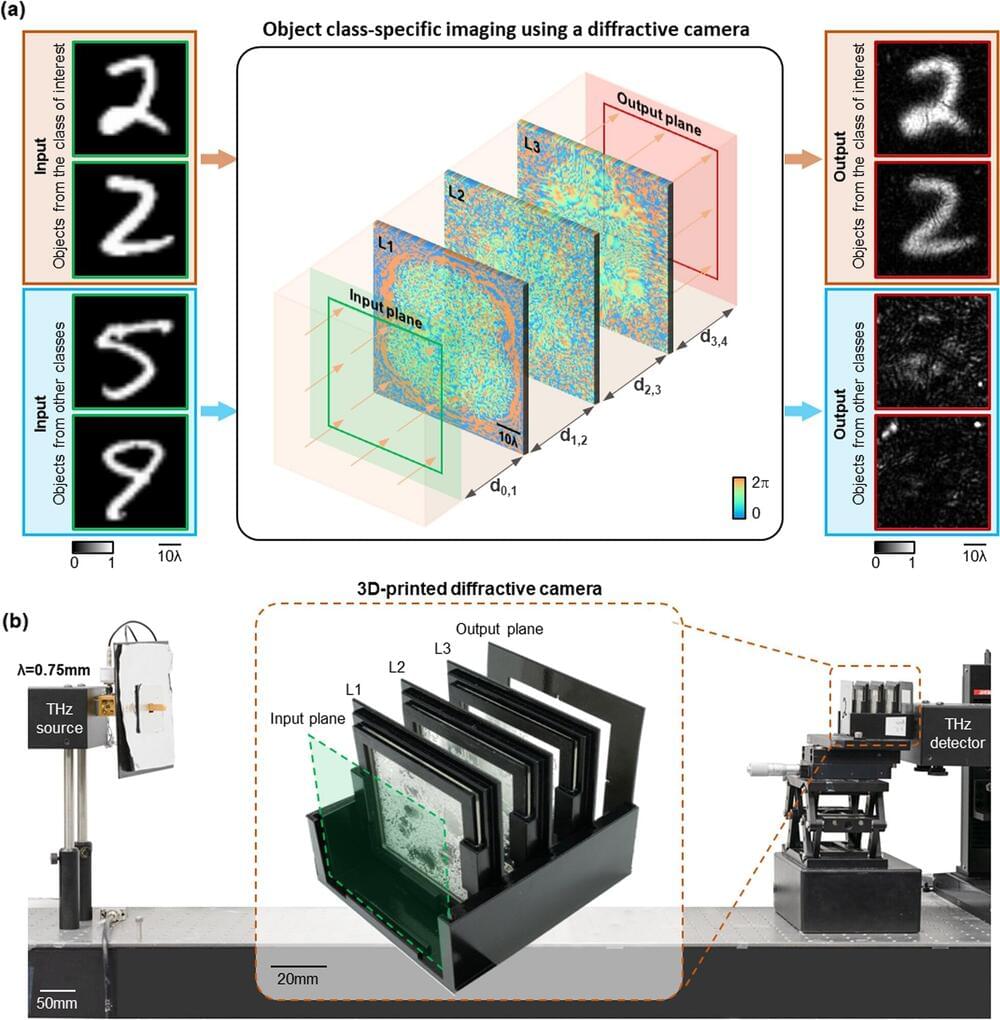

A new research paper published in eLight demonstrated a new paradigm to achieve privacy-preserving imaging by building a fundamentally new type of imager designed by AI. In their paper, UCLA researchers, led by Professor Aydogan Ozcan, presented a smart camera design that images only certain types of desired objects, while instantaneously erasing other types of objects from its images without requiring any digital processing.