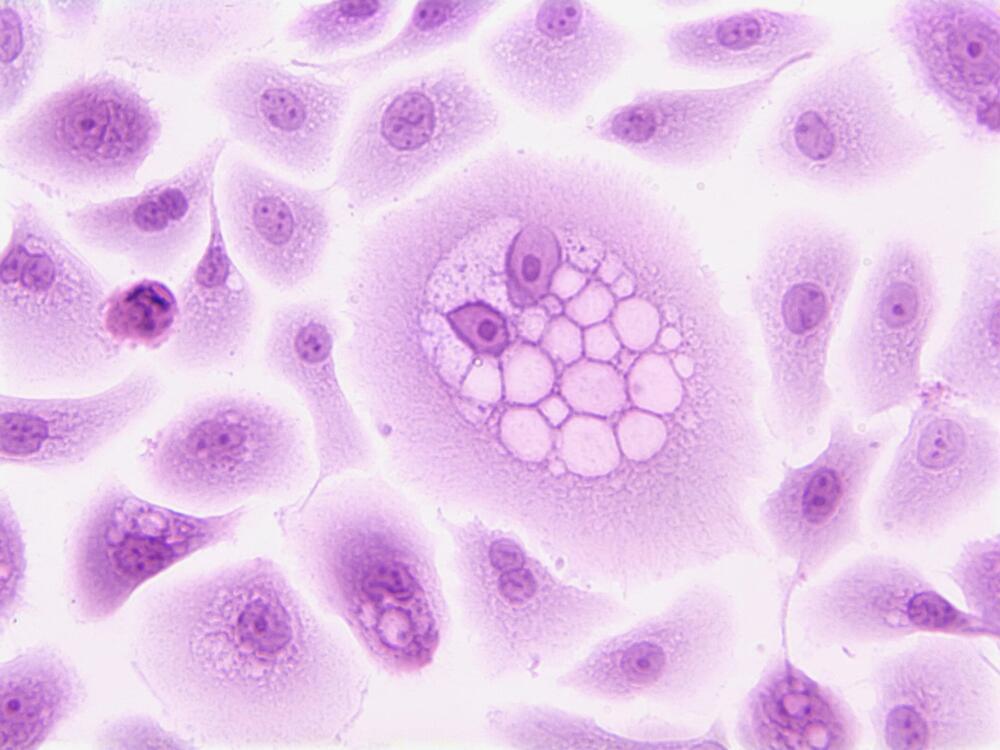

Vanderbilt researchers have developed an active machine learning approach to predict the effects of tumor variants of unknown significance, or VUS, on sensitivity to chemotherapy. VUS, mutated bits of DNA with unknown impacts on cancer risk, are constantly being identified. The growing number of rare VUS makes it imperative for scientists to analyze them and determine the kind of cancer risk they impart.

Traditional prediction methods display limited power and accuracy for rare VUS. Even machine learning, an artificial intelligence tool that leverages data to “learn” and boost performance, falls short when classifying some VUS. Recent work by the lab of Walter Chazin, Chancellor’s Chair in Medicine and professor of biochemistry and chemistry, led by co-first authors and postdoctoral fellows Alexandra Blee and Bian Li, featured an active machine learning technique.

Active machine learning relies on training an algorithm with existing data, as with machine learning, and feeding it new information between rounds of training. Chazin and his lab identified VUS for which predictions were least certain, performed biochemical experiments on those VUS and incorporated the resulting data into subsequent rounds of algorithm training. This allowed the model to continuously improve its VUS classification.