Superfast algorithm put crimp in 2019 claim that Google’s machine had achieved “quantum supremacy”.

Turbulence plays a key role in our daily lives, making for bumpy plane rides, affecting weather and climate, limiting the fuel efficiency of the cars we drive, and impacting clean energy technologies. Yet, scientists and engineers have puzzled at ways to predict and alter turbulent fluid flows, and it has long remained one of the most challenging problems in science and engineering.

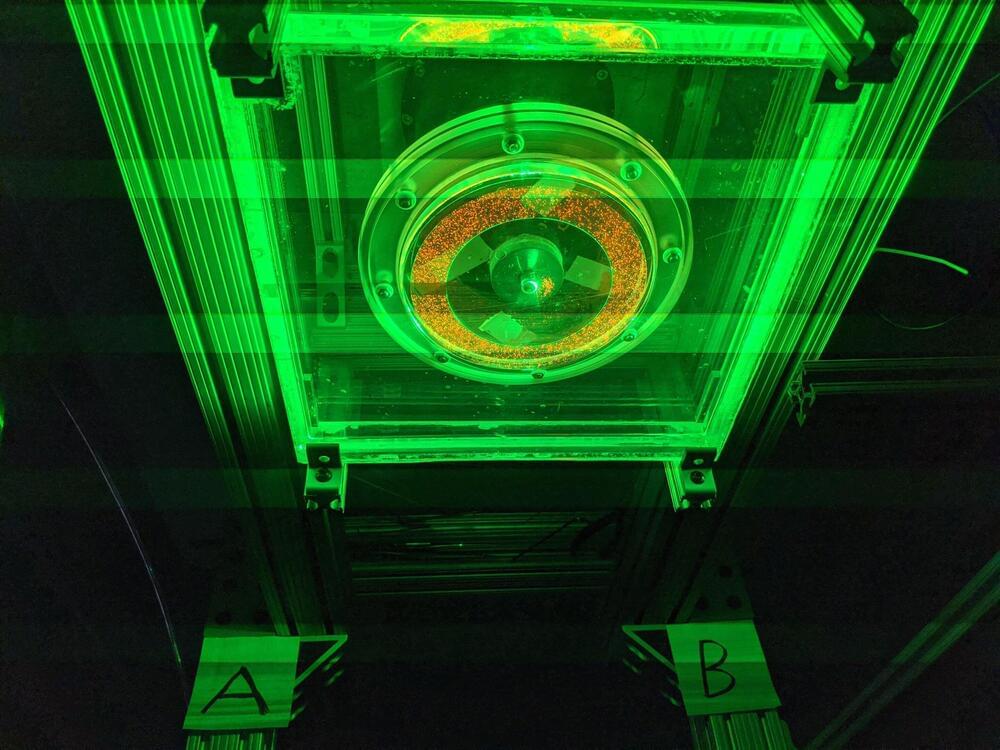

Now, physicists from the Georgia Institute of Technology have demonstrated—numerically and experimentally—that turbulence can be understood and quantified with the help of a relatively small set of special solutions to the governing equations of fluid dynamics that can be precomputed for a particular geometry, once and for all.

“For nearly a century, turbulence has been described statistically as a random process,” said Roman Grigoriev. “Our results provide the first experimental illustration that, on suitably short time scales, the dynamics of turbulence is deterministic—and connects it to the underlying deterministic governing equations.”

From my understanding, inertia is typically taken as an axiom rather than something that can be explained by some deeper phenomenon. However, it’s also my understanding that quantum mechanics must reduce to classical, Newtonian mechanics in the macroscopic limit.

By inertia, I mean the resistance to changes in velocity — the fact of more massive objects (or paticles, let’s say) accelerating more slowly given the same force.

What is the quantum mechanical mechanism that, in its limit, leads to Newtonian inertia? Is there some concept of axiomatic inertia that applies to the quantum mechanical equations and explains Newtonian inertia, even if it remains a fundamental assumption of quantum theory?

The tool can identify symptoms of dengue, malaria, leptospirosis, and scrub typhus.

The study investigates both statistical and machine learning approaches. WHO has categorized dengue as a “neglected tropical disease.”

A prediction tool based on multi-nominal regression analysis and a machine learning algorithm was developed.

Accurate diagnosis is essential for the proper treatment and ensuring the well-being of patients. However, some diseases present with similar clinical symptoms and laboratory results, making diagnosing them more challenging.

Artificial Intelligence is perhaps the most promising technology for transforming our lives — but it’s also incredibly scary. At CES 2022, A panel of AI experts discussed what role AI might play in the future of healthcare.

In a session titled “Consumer Safety Driven by AI,” Pat Baird, an AI engineer and software developer who works in standards and regulations for Phillips, and Joseph Murphy, VP Marketing at Sensory Inc., an American technology company that develops AI products, discussed what AI could add to our lives. They also discussed the apprehension many people feel about the technology.

Summary: A newly developed artificial intelligence model can detect Parkinson’s disease by reading a person’s breathing patterns. The algorithm can also discern the severity of Parkinson’s disease and track progression over time.

Source: MIT

Parkinson’s disease is notoriously difficult to diagnose as it relies primarily on the appearance of motor symptoms such as tremors, stiffness, and slowness, but these symptoms often appear several years after the disease onset.

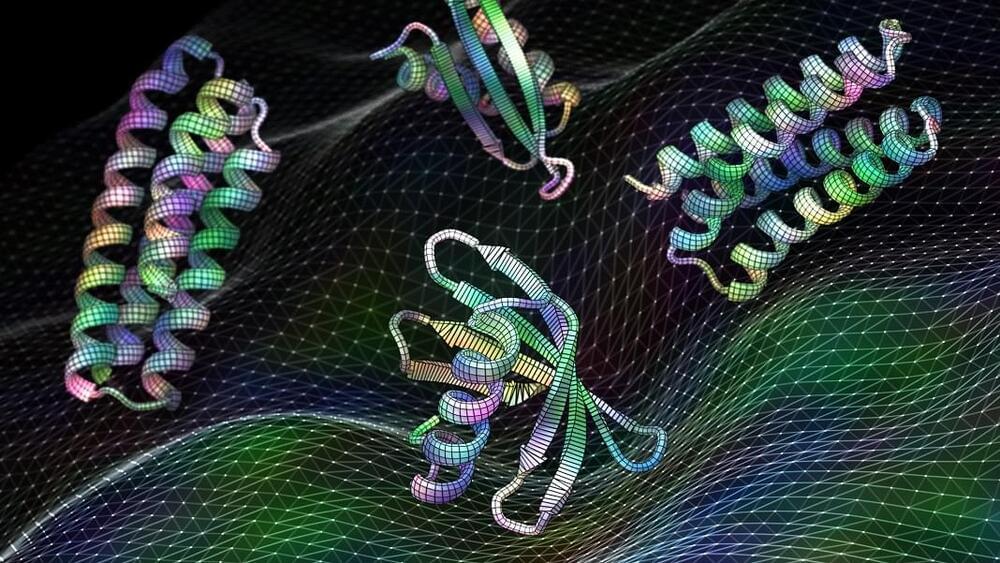

A new study in Science overthrew the whole gamebook. Led by Dr. David Baker at the University of Washington, a team tapped into an AI’s “imagination” to dream up a myriad of functional sites from scratch. It’s a machine mind’s “creativity” at its best—a deep learning algorithm that predicts the general area of a protein’s functional site, but then further sculpts the structure.

As a reality check, the team used the new software to generate drugs that battle cancer and design vaccines against common, if sometimes deadly, viruses. In one case, the digital mind came up with a solution that, when tested in isolated cells, was a perfect match for an existing antibody against a common virus. In other words, the algorithm “imagined” a hotspot from a viral protein, making it vulnerable as a target to design new treatments.

The algorithm is deep learning’s first foray into building proteins around their functions, opening a door to treatments that were previously unimaginable. But the software isn’t limited to natural protein hotspots. “The proteins we find in nature are amazing molecules, but designed proteins can do so much more,” said Baker in a press release. The algorithm is “doing things that none of us thought it would be capable of.”

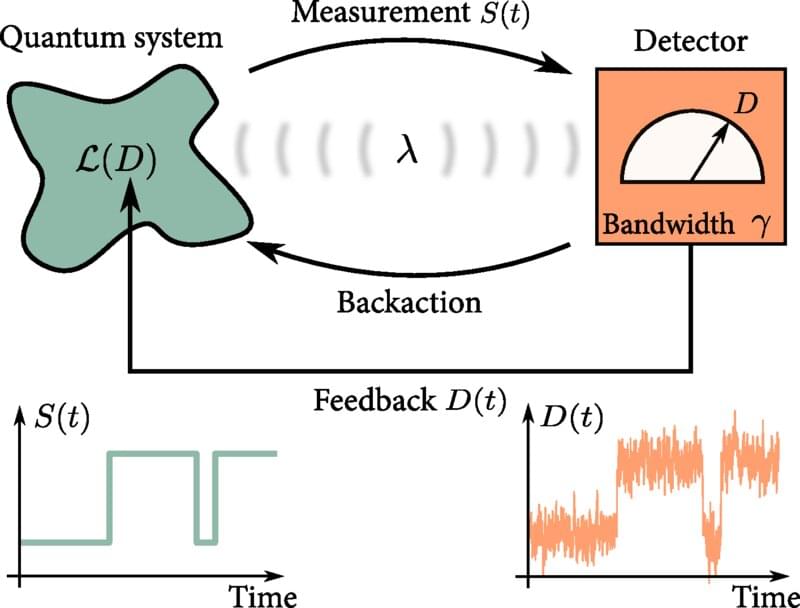

As the size of modern technology shrinks down to the nanoscale, weird quantum effects—such as quantum tunneling, superposition, and entanglement—become prominent. This opens the door to a new era of quantum technologies, where quantum effects can be exploited. Many everyday technologies make use of feedback control routinely; an important example is the pacemaker, which must monitor the user’s heartbeat and apply electrical signals to control it, only when needed. But physicists do not yet have an equivalent understanding of feedback control at the quantum level. Now, physicists have developed a “master equation” that will help engineers understand feedback at the quantum scale. Their results are published in the journal Physical Review Letters.

“It is vital to investigate how feedback control can be used in quantum technologies in order to develop efficient and fast methods for controlling quantum systems, so that they can be steered in real time and with high precision,” says co-author Björn Annby-Andersson, a quantum physicist at Lund University, in Sweden.

An example of a crucial feedback-control process in quantum computing is quantum error correction. A quantum computer encodes information on physical qubits, which could be photons of light, or atoms, for instance. But the quantum properties of the qubits are fragile, so it is likely that the encoded information will be lost if the qubits are disturbed by vibrations or fluctuating electromagnetic fields. That means that physicists need to be able to detect and correct such errors, for instance by using feedback control. This error correction can be implemented by measuring the state of the qubits and, if a deviation from what is expected is detected, applying feedback to correct it.

Circa 2019 face_with_colon_three Biological singularity here we come :3.

Scientific Reports volume 9, Article number: 12,181 (2019) Cite this article.

Given the potential scope and capabilities of quantum technology, it is absolutely crucial not to repeat the mistakes made with AI—where regulatory failure has given the world algorithmic bias that hypercharges human prejudices, social media that favors conspiracy theories, and attacks on the institutions of democracy fueled by AI-generated fake news and social media posts. The dangers lie in the machine’s ability to make decisions autonomously, with flaws in the computer code resulting in unanticipated, often detrimental, outcomes. In 2021, the quantum community issued a call for action to urgently address these concerns. In addition, critical public and private intellectual property on quantum-enabling technologies must be protected from theft and abuse by the United States’ adversaries.

https://urldefense.com/v3/__https:/www.youtube.com/watch?v=5…MexaVnE%24

There are national defense issues involved as well. In security technology circles, the holy grail is what’s called a cryptanalytically relevant quantum computer —a system capable of breaking much of the public-key cryptography that digital systems around the world use, which would enable blockchain cracking, for example. That’s a very dangerous capability to have in the hands of an adversarial regime.

Experts warn that China appears to have a lead in various areas of quantum technology, such as quantum networks and quantum processors. Two of the world’s most powerful quantum computers were been built in China, and as far back as 2017, scientists at the University of Science and Technology of China in Hefei built the world’s first quantum communication network using advanced satellites. To be sure, these publicly disclosed projects are scientific machines to prove the concept, with relatively little bearing on the future viability of quantum computing. However, knowing that all governments are pursuing the technology simply to prevent an adversary from being first, these Chinese successes could well indicate an advantage over the United States and the rest of the West.

We need the computers and sensors to better our lives, to allow everyone access to the wisdom of the ages. We can’t collect all the data ourselves and try to make sense of it without machines because our brains aren’t up to the task. Imagine if every little decision everyone has made over the past thousand years along with its outcome had been recorded on index cards and stored in a gargantuan facility somewhere. Remember that giant warehouse at the end of the first Indiana Jones movie where they ended up storing the Ark of the Covenant? That’s where index cards AA through AC are housed. Imagine five thousand more of those to store all that data. What could we do with it? Nothing useful.

Computers can do only one thing: manipulate ones and zeros in memory. But they can do that at breathtaking speeds with perfect accuracy. Our challenge is getting all that data into the digital mirror, to copy our analog lives in their digital brains. Cheap sensors and computers will do this for us, with prices that fall every year and capabilities that increase.

Coupling massive processing power with sensors will create a species-level brain and memory. Instead of being billions of separate people with siloed knowledge, we will become billions of people who share a single vast intellect. Comparisons to The Matrix are easy to make but are not really apropos. We aren’t talking about a world without human agency but with enhanced agency, information-based agency. Making decisions informed by data is immeasurably better. Even if someone ignores the suggestion of the digital mirror, they are richer for knowing it. Imagine having an AI that could not only tell you what you should do but would allow you to insert your own values into the decision process. In fact, the system would learn your values from your actions, and the suggestions it gives you would be different from those it would give everyone else, as they should be. If knowledge is power, such a system is by definition the ultimate in empowerment. Every person on the planet could effectively be smarter and wiser than anyone who has ever lived.