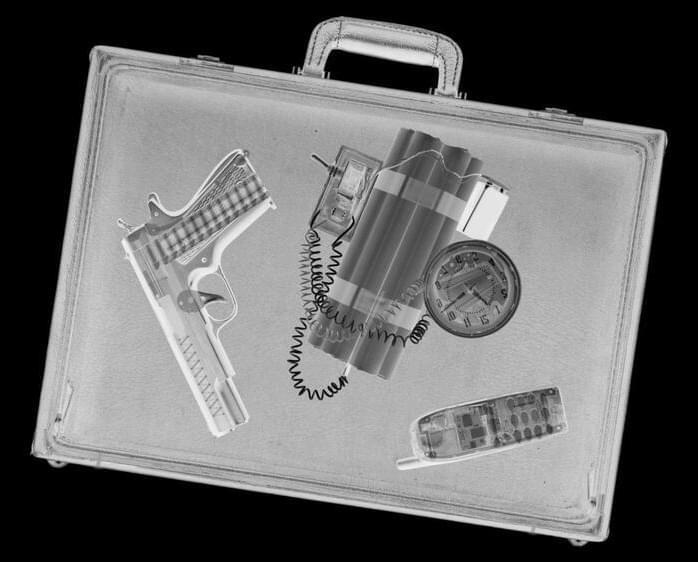

How can mobile robots perceive and understand the environment correctly, even if parts of the environment are occluded by other objects? This is a key question that must be solved for self-driving vehicles to safely navigate in large crowded cities. While humans can imagine complete physical structures of objects even when they are partially occluded, existing artificial intelligence (AI) algorithms that enable robots and self-driving vehicles to perceive their environment do not have this capability.

Robots with AI can already find their way around and navigate on their own once they have learned what their environment looks like. However, perceiving the entire structure of objects when they are partially hidden, such as people in crowds or vehicles in traffic jams, has been a significant challenge. A major step towards solving this problem has now been taken by Freiburg robotics researchers Prof. Dr. Abhinav Valada and Ph.D. student Rohit Mohan from the Robot Learning Lab at the University of Freiburg, which they have presented in two joint publications.

The two Freiburg scientists have developed the amodal panoptic segmentation task and demonstrated its feasibility using novel AI approaches. Until now, self-driving vehicles have used panoptic segmentation to understand their surroundings.