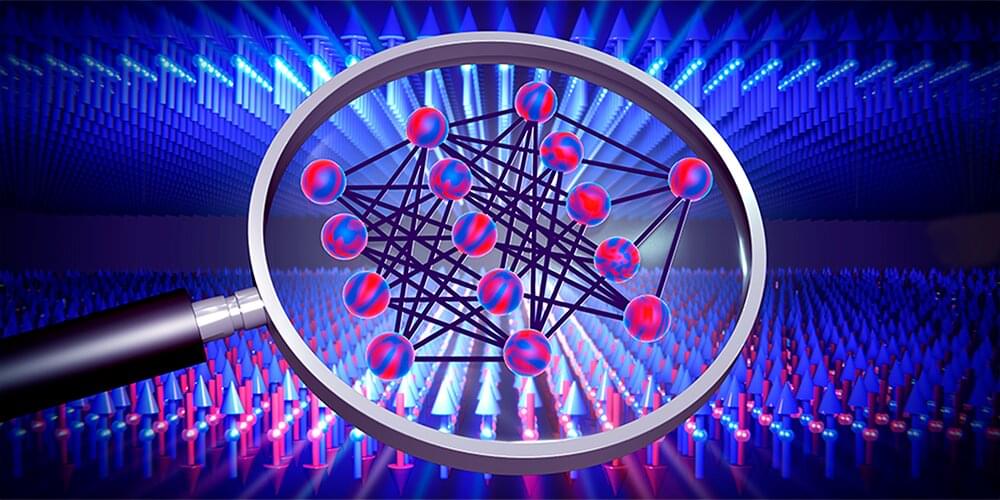

Scientists trained a machine learning tool to capture the physics of electrons moving on a lattice using far fewer equations than would typically be required, all without sacrificing accuracy. A daunting quantum problem that until now required 100,000 equations has been compressed into a bite-size task of as few as four equations by physicists using artificial intelligence. All of this was accomplished without sacrificing accuracy. The work could revolutionize how scientists investigate systems containing many interacting electrons. Furthermore, if scalable to other problems, the approach could potentially aid in the design of materials with extremely valuable properties such as superconductivity or utility for clean energy generation.

עברית (Hebrew)

עברית (Hebrew)