Humans and animals detect different stimuli such as light, sound, and odor through nerve cells, which then transmit the information to the brain. Nerve cells must be able to adjust to the wide range of stimuli they receive, which can range from very weak to very strong. To do this, they may become more or less sensitive to stimuli (sensitization and habituation), or they may become more sensitive to weaker stimuli and less sensitive to stronger stimuli for better overall responsiveness (gain control). However, the exact way this happens is not yet understood.

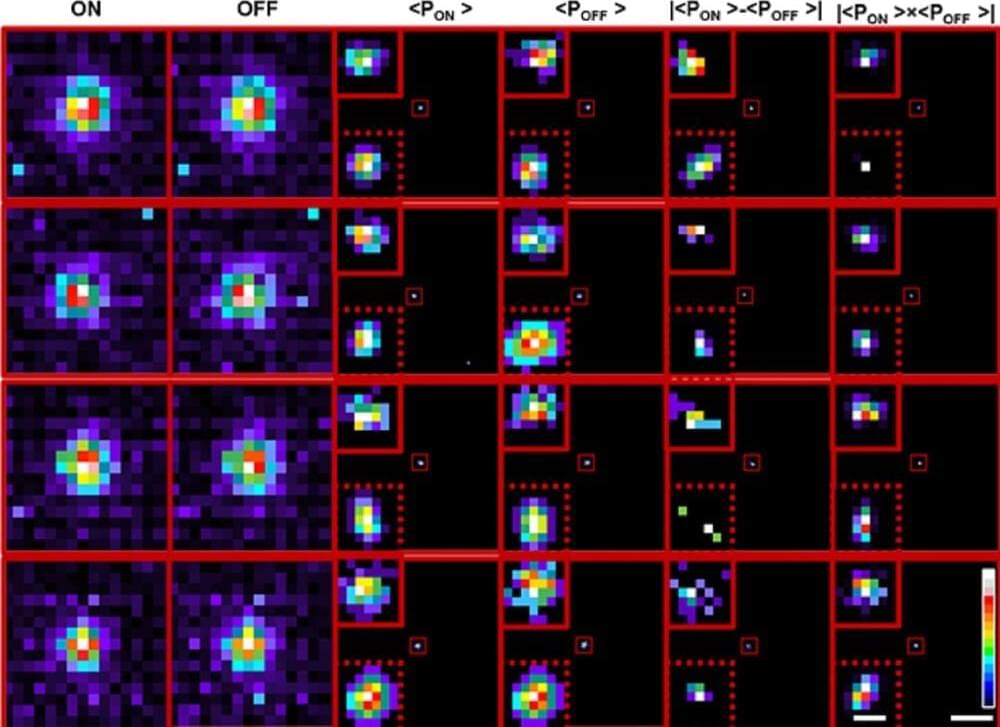

To better understand the process of gain control, a research team led by Professor Kimura at Nagoya City University in Japan studied the roundworm C. elegans. They found that, when the worm first smells an unpleasant odor, its nerve cells exhibit a large, quickly increasing, and continuous response to both weak and strong stimuli. However, after exposure to the odor, the response is smaller and slower to weak stimuli but remains large to strong stimuli, similar to the response to the first exposure to the odor. Because the experience of odor exposure causes more efficient movement of worms away from the odor, the nerve cells have changed their response to better adapt to the stimulus using gain control.

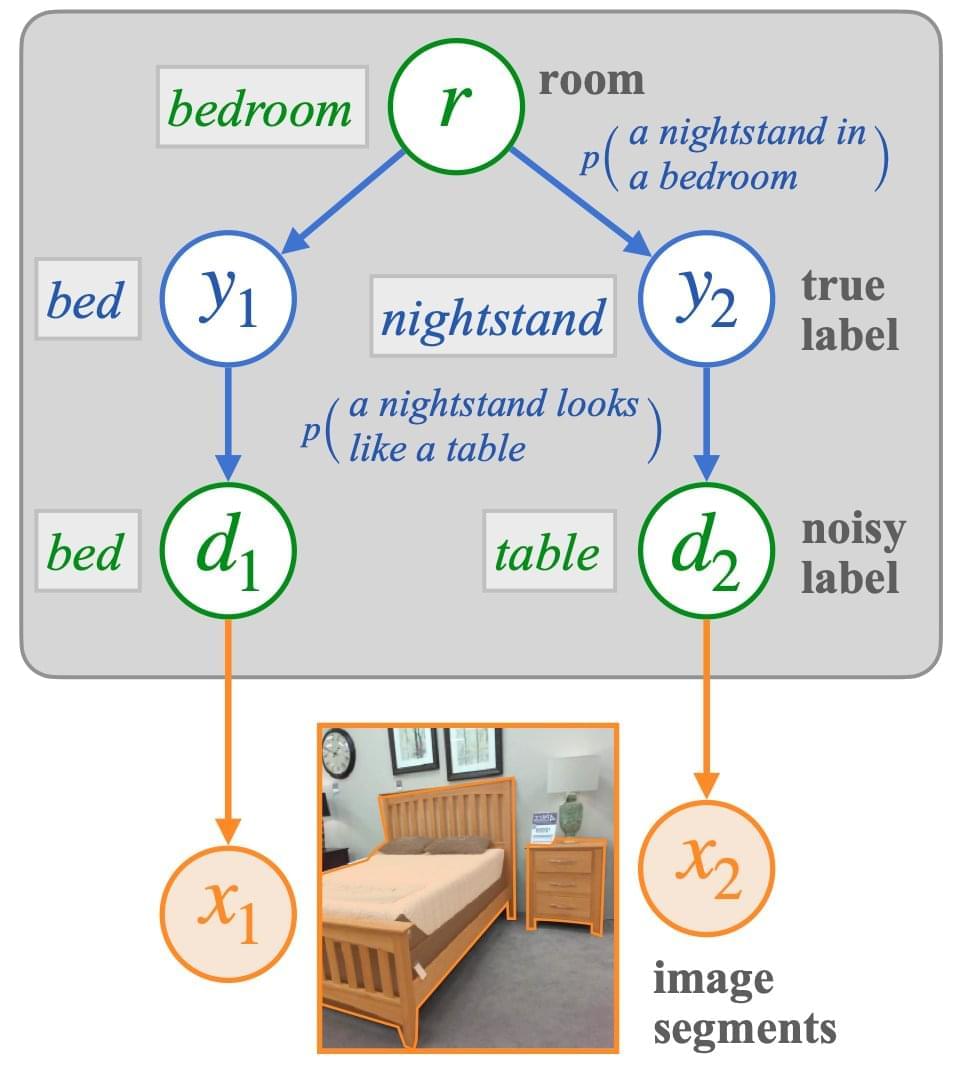

Then the researchers used mathematical modeling to understand this process. Mathematical modeling is a powerful tool that can be used to better understand complex biological processes. They found that the “response to first smell” consists of fast and slow components, while the “response after exposure” only consists of the slow component, meaning that the odor experience inhibits the fast component to achieve gain control. They further found that both responses could be described by a simple differential equation and that the slow and fast components correspond to the leaky integration of a first and second derivative term of the odor concentration that the worm senses, respectively. The results of this study showed that the prior odor experience only appears to inhibit the mechanism required for the fast component.