The field equations of Einstein’s General Relativity theory say that faster-than-light (FTL) travel is possible, so a handful of researchers are working to see whether a Star Trek-style warp drive, or perhaps a kind of artificial wormhole, could be created through our technology.

But even if shown feasible tomorrow, it’s possible that designs for an FTL system could be as far ahead of a functional starship as Leonardo da Vinci’s 16th century drawings of flying machines were ahead of the Wright Flyer of 1903. But this need not be a showstopper against human interstellar flight in the next century or two. Short of FTL travel, there are technologies in the works that could enable human expeditions to planets orbiting some of the nearest stars.

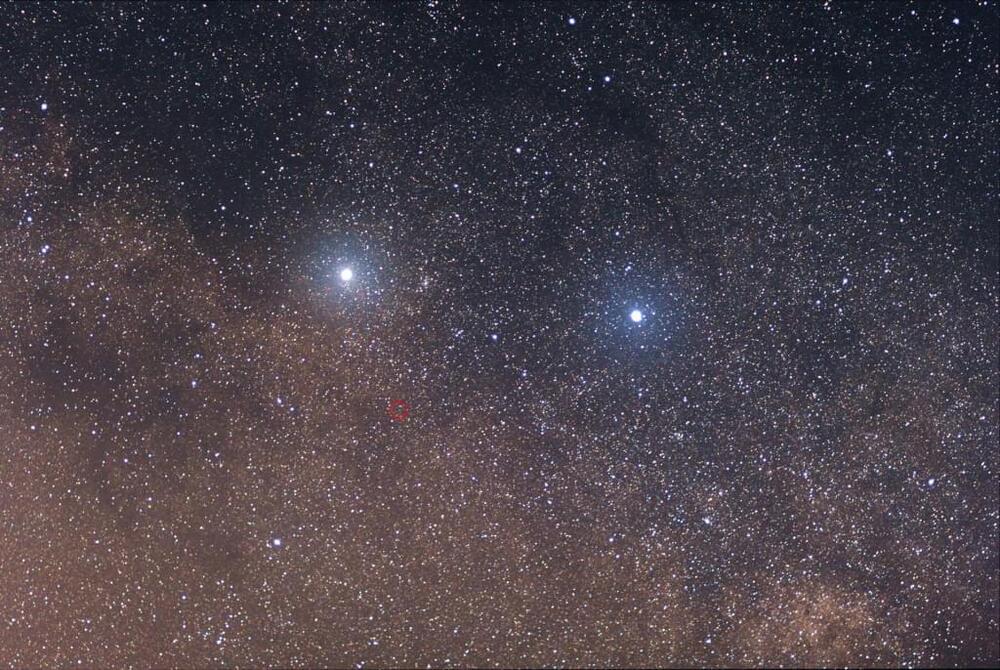

Certainly, feasibility of such missions will depend on geopolitical-economic factors. But it also will depend on the distance to nearest Earth-like exoplanet. Located roughly 4.37 light years away, Alpha Centauri is the Sun’s closest neighbor; thus science fiction, including Star Trek, has envisioned it as humanity’s first interstellar destination.