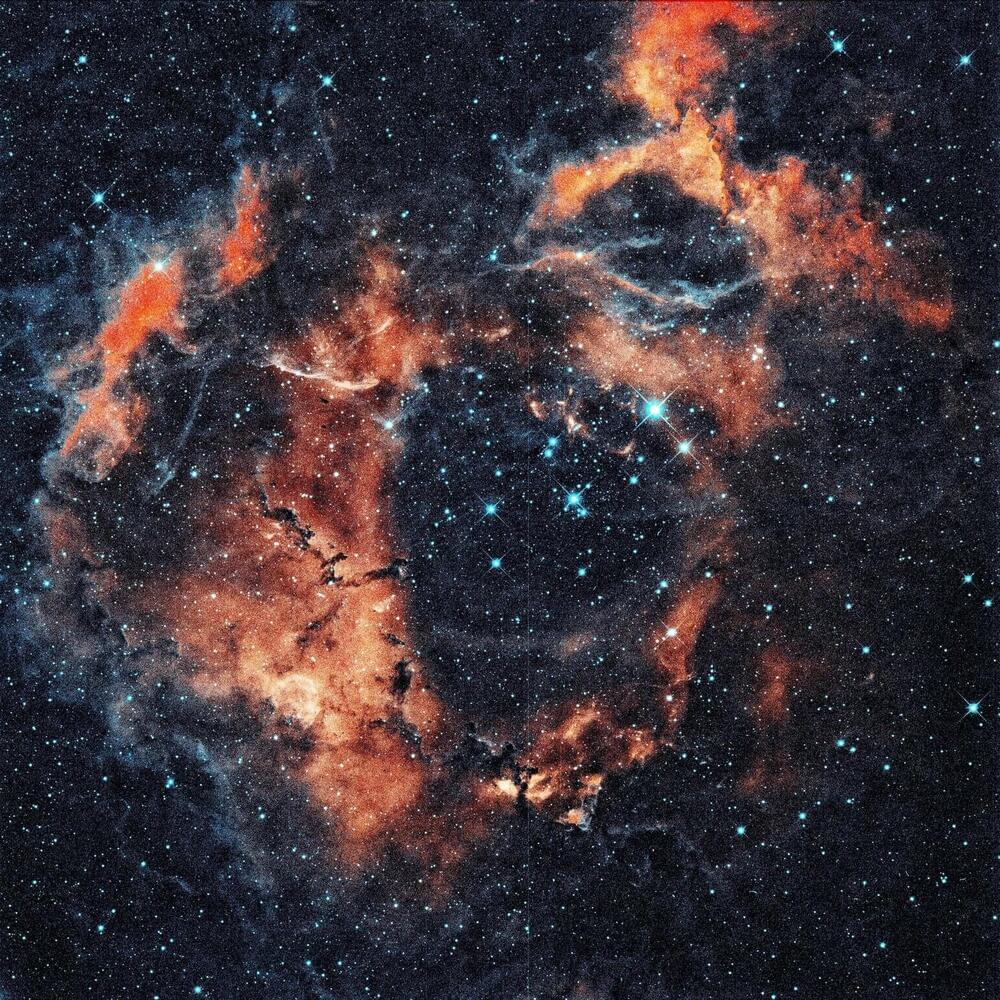

Throughout history, humans have gazed at the sky, contemplating the celestial lights, including the sun, the moon, and beyond. In those ancient moments, an insatiable curiosity ignited within them, urging them to seek answers about the origins of the cosmos. Over time, this burning curiosity has been passed down, compelling generations to develop theories in pursuit of one timeless question: Where did it all come from?

One of the most complete and widely accepted theories in this regard is the Big Bang Theory. The Big Bang is a scientific theory that proposes that the birth and development of the universe originated from a point in space-time called the singularity. Think of this in a way that all the matter and energy of the universe were trapped in an inconceivably small point of high density and high temperature (Williams & Today, n.d.). It is theorized to be a colossal release of energy that initiated the rapid expansion of the universe over 13.7 billion years that led to the creation of galaxies, stars, planetary systems and eventually humankind. What happened that led to the sudden expansion? This question continues to puzzle cosmologists, as the answer remains unknown to this day (What Is the Big Bang Theory? n.d.).

In 1915, while developing his General Theory of Relativity, Albert Einstein faced a challenge. If gravity were to solely attract all objects, the universe would ultimately collapse under its overwhelming force. However, observations indicated that the universe was not collapsing. To address this issue, Einstein introduced a cosmological constant into his equations. This constant acted as a counterforce to gravity and proposed a static model of the universe. Little did Einstein know that an astronomer named Edwin Hubble would soon contradict his proposed static model of the universe. Working at Mount Wilson Observatory in California, Hubble made a noteworthy observation in the late 1920s. He noticed a peculiar phenomenon known as redshift, where light emitted by celestial bodies moved toward the red end of the spectrum, indicating that they were moving away from us (Vogel, 2021).