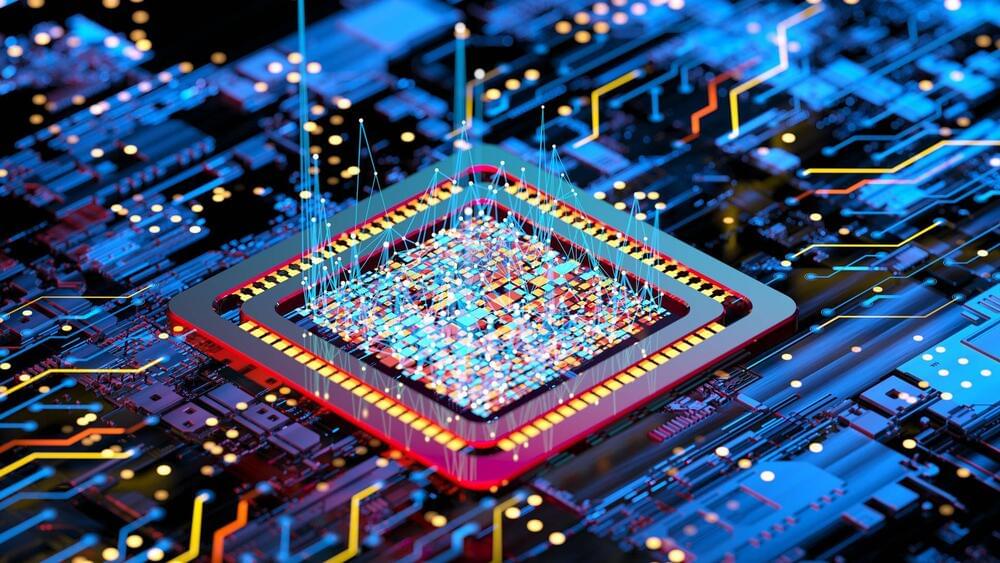

Researchers from the University of Aberdeen develop an AI algorithm to detect planetary craters with high accuracy, efficiency, and flexibility.

A team of scientists from the University of Aberdeen has developed a new algorithm that could revolutionize planetary studies. The new technology enables scientists to detect planetary craters and accurately map their surfaces using different data types, according to a release.

The team used a new universal crater detection algorithm (CDA) developed using the Segment Anything Model (SAM), an artificial intelligence (AI) model that can automatically identify and cut out any object in any image.