How can artificial intelligence (AI) earn, and rebuild, our trust over time? This is what a recent study published in AI and Ethics hopes to address as a p | Technology

Professors Karl Friston & Mark Solms, pioneers in the fields of neuroscience, psychology, and theoretical biology, delve into the frontiers of consciousness: “Can We Engineer Artificial Consciousness?”. From mimicry to qualia, this historic conversation tackles whether artificial consciousness is achievable — and how. Essential viewing/listening for anyone interested in the mind, AI ethics, and the future of sentience. Subscribe to the channel for more profound discussions!

Professor Karl Friston is one of the most highly cited living neuroscientists in history. He is Professor of Neuroscience at University College London and holds Honorary Doctorates from the University of Zurich, University of York and Radboud University. He is the world expert on brain imaging, neuroscience, and theoretical neurobiology, and pioneers the Free-Energy Principle for action and perception, with well-over 300,000 citations. Friston was elected a Fellow of the Academy of Medical Sciences (1999). In 2000 he was President of the international Organization of Human Brain Mapping. He was elected a Fellow of the Royal Society in 2006. He became a Fellow of the Royal Society of Biology in 2012 and was elected as a member of EMBO (excellence in the life sciences) in 2014 and the Academia Europaea in (2015).

Professor Mark Solms is director of Neuropsychology in the Neuroscience Institute of the University of Cape Town and Groote Schuur Hospital (Departments of Psychology and Neurology), an Honorary Lecturer in Neurosurgery at the Royal London Hospital School of Medicine, an Honorary Fellow of the American College of Psychiatrists, and the President of the South African Psychoanalytical Association. He is also Research Chair of the International Psychoanalytical Association (since 2013). He founded the International Neuropsychoanalysis Society in 2000 and he was a Founding Editor (with Ed Nersessian) of the journal Neuropsychoanalysis. He is Director of the Arnold Pfeffer Center for Neuropsychoanalysis at the New York Psychoanalytic Institute. He is also Director of the Neuropsychoanalysis Foundation in New York, a Trustee of the Neuropsychoanalysis Fund in London, and Director of the Neuropsychoanalysis Trust in Cape Town.

TIMESTAMPS:

0:00 — Introduction.

0:45 — Defining Consciousness & Intelligence.

8:20 — Minimizing Free Energy + Maximizing Affective States.

9:07 — Knowing if Something is Conscious.

13:40 — Mimicry & Zombies.

17:13 — Homology in Consciousness Inference.

21:27 — Functional Criteria for Consciousness.

25:10 — Structure vs Function Debate.

29:35 — Mortal Computation & Substrate.

35:33 — Biological Naturalism vs Functionalism.

42:42 — Functional Architectures & Independence.

48:34 — Is Artificial Consciousness Possible?

55:12 — Reportability as Empirical Criterion.

57:28 — Feeling as Empirical Consciousness.

59:40 — Mechanistic Basis of Feeling.

1:06:24 — Constraints that Shape Us.

1:12:24 — Actively Building Artificial Consciousness (Mark’s current project)

1:24:51 — Hedonic Place Preference Test & Ethics.

1:30:51 — Conclusion.

EPISODE LINKS:

- Karl’s Round 1: https://youtu.be/Kb5X8xOWgpc.

- Karl’s Round 2: https://youtu.be/mqzyKs2Qvug.

- Karl’s Lecture 1: https://youtu.be/Gp9Sqvx4H7w.

- Karl’s Lecture 2: https://youtu.be/Sfjw41TBnRM

- Karl’s Lecture 3: https://youtu.be/dM3YINvDZsY

- Mark’s Round 1: https://youtu.be/qqM76ZHIR-o.

- Mark’s Round 2: https://youtu.be/rkbeaxjAZm4

CONNECT:

Can a child’s imagination alter the laws of physics? In this speculative science essay, we explore SCP-239, “The Witch Child” — a sleeping eight-year-old whose mind can reshape matter, rewrite probability, and collapse reality itself.

We examine how the SCP Foundation’s containment procedures—from telekill alloys to induced comas—reflect humanity’s struggle to contain a consciousness powerful enough to bend the universe. Through philosophy, ethics, and quantum speculation, this essay asks:

What happens when belief becomes a force of nature?

🎓 About the Series.

This video is part of our Speculative Science series, where we analyze anomalous phenomena through physics, cognitive science, and ethics.

📅 New speculative science videos every weekday at 6 PM PST / 9 PM EST.

🔔 Subscribe and turn on notifications to stay at the edge of what’s possible.

💬 Share your theories in the comments below:

Should SCP-239 remain asleep forever, or does humanity have a moral duty to understand her?

#SCP239 #SpeculativeScience #TheWitchChild #SCPFoundation #ScienceFiction #Philosophy #AIExplained #Ethics #SciFiEssay #LoreExplained

Humanity stands at a crossroads. Our beautiful Earth, cradle of all we know, is straining under the weight of nearly 8.5 billion people. Environmental degradation, social inequity, and resource scarcity deepen by the day. We are reaching the limits of a single-planet civilization. We can face this challenge in two ways. Some will cling to the old patterns—fighting over dwindling resources and defending narrow borders. Others will rise above, expanding into space not to escape Earth, but to renew and sustain it. These pioneers—the Space Settlers —will carry the next chapter of civilization beyond our home planet.

The Humanist Path: Living in Free Space. When people imagine living beyond Earth, they often picture Lunar or Martian colonies. Yet, from a humanist perspective, a better path exists: rotating free space habitats, as envisioned by Gerard K. O’Neill. These are vast, spinning structures orbiting Earth or the Moon, or standing at Lagrange Libration Points, designed to simulate Earth’s gravity and sustain full, flourishing communities. Unlike planetary colonies bound to weak gravity, dust, or darkness, O’Neill habitats offer: 1g simulated gravity to preserve human health; continuous sunlight and abundant solar energy; freedom of movement, as habitats can orbit safely or relocate if needed. More than technical achievements, these habitats embody the Enlightenment spirit—the belief that reason, ethics, and creativity can design environments of dignity, beauty, and freedom.

Freedom and Human Dignity in Space. Freedom is at the heart of humanity’s destiny. Consider a lunar settler who finds his bones too fragile to withstand Earth’s gravity—trapped by biology, after a few years living on the Moon. In contrast, inhabitants of a rotating habitat retain the freedom to return on Earth, at will. Simulated gravity safeguards their health, ensuring that space settlement remains reversible and voluntary. Freedom of movement leads naturally to freedom of culture. In a habitat like “New Gaia”, thousands of people from all nations live together: Russians celebrating Maslenitsa, Indians lighting Diwali lamps, and space-born storytellers sharing ancient myths. New traditions also emerge—festivals, music, and art inspired by life between worlds. These habitats can become beacons of a new Renaissance —a rebirth of cultural and creative freedom beyond the constraints of geography and politics.

What if a simple apartment door in Boston opened into another universe?

SCP-4357, also known as “Slimelord,” is one of the strangest and most human anomalies ever recorded — a hyperspatial discontinuity leading to a world of intelligent slug-like beings with philosophy, humor, and heartbreak.

In this speculative science essay, we explore what SCP-4357 means for physics, biology, and the idea of consciousness itself. How could life evolve intelligence in a sulfur-rich world? Why do these beings mirror human culture so closely? And what happens when curiosity crosses the line into exploitation?

Join us as we break down the science, ethics, and wonder behind one of the SCP Foundation’s most thought-provoking entries.

🔔 Subscribe for more speculative science every weekday at 6PM PST / 9PM EST.

💡 Become a channel member for early access and exclusive behind-the-scenes content.

🌌 Because somewhere out there, even the slugs have opinions on Kant.

Introduction.

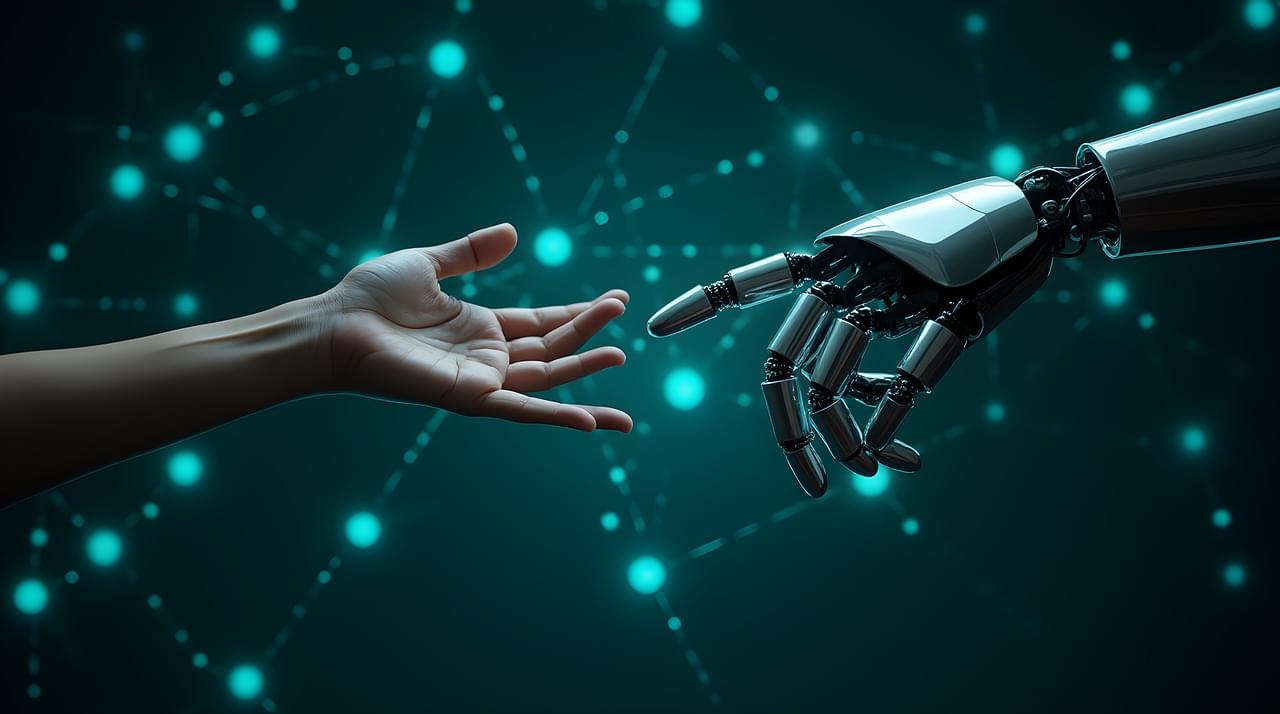

Grounded in the scientific method, it critically examines the work’s methodology, empirical validity, broader implications, and opportunities for advancement, aiming to foster deeper understanding and iterative progress in quantum technologies. ## Executive Summary.

This work, based on experiments conducted in 1984–1985, addresses a fundamental question in quantum physics: the scale at which quantum effects persist in macroscopic systems.

By engineering a Josephson junction-based circuit where billions of Cooper pairs behave collectively as a single quantum entity, the laureates provided empirical evidence that quantum phenomena like tunneling through energy barriers and discrete energy levels can manifest in human-scale devices.

This breakthrough bridges microscopic quantum mechanics with macroscopic engineering, laying foundational groundwork for advancements in quantum technologies such as quantum computing, cryptography, and sensors.

Overall strengths include rigorous experimental validation and profound implications for quantum information science, though gaps exist in scalability to room-temperature applications and full mitigation of environmental decoherence.

Framed within the broader context, this award highlights the enduring evolution of quantum mechanics from theoretical curiosity to practical innovation, building on prior Nobel-recognized discoveries like the Josephson effect (1973) and superconductivity mechanisms (1972).

WARNING: AI could end humanity, and we’re completely unprepared. Dr. Roman Yampolskiy reveals how AI will take 99% of jobs, why Sam Altman is ignoring safety, and how we’re heading toward global collapse…or even World War III.

Dr. Roman Yampolskiy is a leading voice in AI safety and a Professor of Computer Science and Engineering. He coined the term “AI safety” in 2010 and has published over 100 papers on the dangers of AI. He is also the author of books such as, ‘Considerations on the AI Endgame: Ethics, Risks and Computational Frameworks’

He explains:

⬛How AI could release a deadly virus.

⬛Why these 5 jobs might be the only ones left.

⬛How superintelligence will dominate humans.

⬛Why ‘superintelligence’ could trigger a global collapse by 2027

⬛How AI could be worse than nuclear weapons.

⬛Why we’re almost certainly living in a simulation.

00:00 Intro.

02:28 How to Stop AI From Killing Everyone.

04:35 What’s the Probability Something Goes Wrong?

04:57 How Long Have You Been Working on AI Safety?

08:15 What Is AI?

09:54 Prediction for 2027

11:38 What Jobs Will Actually Exist?

14:27 Can AI Really Take All Jobs?

18:49 What Happens When All Jobs Are Taken?

20:32 Is There a Good Argument Against AI Replacing Humans?

22:04 Prediction for 2030

23:58 What Happens by 2045?

25:37 Will We Just Find New Careers and Ways to Live?

28:51 Is Anything More Important Than AI Safety Right Now?

30:07 Can’t We Just Unplug It?

31:32 Do We Just Go With It?

37:20 What Is Most Likely to Cause Human Extinction?

39:45 No One Knows What’s Going On Inside AI

41:30 Ads.

42:32 Thoughts on OpenAI and Sam Altman.

46:24 What Will the World Look Like in 2100?

46:56 What Can Be Done About the AI Doom Narrative?

53:55 Should People Be Protesting?

56:10 Are We Living in a Simulation?

1:01:45 How Certain Are You We’re in a Simulation?

1:07:45 Can We Live Forever?

1:12:20 Bitcoin.

1:14:03 What Should I Do Differently After This Conversation?

1:15:07 Are You Religious?

1:17:11 Do These Conversations Make People Feel Good?

1:20:10 What Do Your Strongest Critics Say?

1:21:36 Closing Statements.

1:22:08 If You Had One Button, What Would You Pick?

1:23:36 Are We Moving Toward Mass Unemployment?

1:24:37 Most Important Characteristics.

Follow Dr Roman:

X — https://bit.ly/41C7f70

Google Scholar — https://bit.ly/4gaGE72

You can purchase Dr Roman’s book, ‘Considerations on the AI Endgame: Ethics, Risks and Computational Frameworks’, here: https://amzn.to/4g4Jpa5

See my Comment below for a link to David Orban’s 20 minute talk.

In this keynote, delivered at The Futurists X Summit, on September 22 in Dubai, David Orban maps how AI and humanoid robotics shift us from steady exponential progress to an acceleration of acceleration—what he calls the Jolting Technologies Hypothesis. He argues we’re not in a zero-sum economy; as capability compounds and doubling times shrink, we unlock new degrees of freedom for individuals, firms, and society. The challenge is to steer that power with clear narratives, robust safety, and deliberate design of work, value, and purpose.

You’ll hear:

• Why narratives (optimism vs. doom) shape which futures become real.

• How shortening doubling times in AI capabilities pull forward timelines once thought 20–30 years out.

• Why trust in AI is task-relative: if +5% isn’t enough, aim for 10× reliability.

• The coming phase transformation as intelligence becomes infrastructure (homes, mobility, industry).

• Concrete social questions (e.g., organ donation post–road-death decline) that demand AI-assisted governance.

• Why the nature of work will change: from jobs as status to human aspiration as value.

Key ideas:

• Humanoid robots at scale: rapid iteration, non-fragile recovery, and human-complementary performance.

• Designing agency: go from idea → action with near-instant execution; experiment, learn, and iterate fast.

• From zombies to luminaries: use newfound freedom to architect lives worth living.

Resources & Links: