AI surveillance, AI surveillance systems, AI surveillance technology, AI camera systems, artificial intelligence privacy, AI tracking systems, AI in public surveillance, smart city surveillance, facial recognition technology, real time surveillance ai, AI crime prediction, predictive policing, emotion detecting ai, AI facial recognition, privacy in AI era, AI and data collection, AI spying tech, surveillance capitalism, government surveillance 2025, AI monitoring tools, AI tracking devices, AI and facial data, facial emotion detection, emotion recognition ai, mass surveillance 2025, AI in smart cities, china AI surveillance, skynet china, AI scanning technology, AI crowd monitoring, AI face scanning, AI emotion scanning, AI powered cameras, smart surveillance system, AI and censorship, privacy and ai, digital surveillance, AI surveillance dangers, AI surveillance ethics, machine learning surveillance, AI powered face id, surveillance tech 2025, AI vs privacy, AI in law enforcement, AI surveillance news, smart city facial recognition, AI and security, AI privacy breach, AI threat to privacy, AI prediction tech, AI identity tracking, AI eyes everywhere, future of surveillance, AI and human rights, smart cities AI control, AI facial databases, AI surveillance control, AI emotion mapping, AI video analytics, AI data surveillance, AI scanning behavior, AI and behavior prediction, invisible surveillance, AI total control, AI police systems, AI surveillance usa, AI surveillance real time, AI security monitoring, AI surveillance 2030, AI tracking systems 2025, AI identity recognition, AI bias in surveillance, AI surveillance market growth, AI spying software, AI privacy threat, AI recognition software, AI profiling tech, AI behavior analysis, AI brain decoding, AI surveillance drones, AI privacy invasion, AI video recognition, facial recognition in cities, AI control future, AI mass monitoring, AI ethics surveillance, AI and global surveillance, AI social monitoring, surveillance without humans, AI data watch, AI neural surveillance, AI surveillance facts, AI surveillance predictions, AI smart cameras, AI surveillance networks, AI law enforcement tech, AI surveillance software 2025, AI global tracking, AI surveillance net, AI and biometric tracking, AI emotion AI detection, AI surveillance and control, real AI surveillance systems, AI surveillance internet, AI identity control, AI ethical concerns, AI powered surveillance 2025, future surveillance systems, AI surveillance in cities, AI surveillance threat, AI surveillance everywhere, AI powered recognition, AI spy systems, AI control cities, AI privacy vs safety, AI powered monitoring, AI machine surveillance, AI surveillance grid, AI digital prisons, AI digital tracking, AI surveillance videos, AI and civilian monitoring, smart surveillance future, AI and civil liberties, AI city wide tracking, AI human scanner, AI tracking with cameras, AI recognition through movement, AI awareness systems, AI cameras everywhere, AI predictive surveillance, AI spy future, AI surveillance documentary, AI urban tracking, AI public tracking, AI silent surveillance, AI surveillance myths, AI surveillance dark side, AI watching you, AI never sleeps, AI surveillance truth, AI surveillance 2025 explained, AI surveillance 2025, future of surveillance technology, smart city surveillance, emotion detecting ai, predictive AI systems, real time facial recognition, AI and privacy concerns, machine learning surveillance, AI in public safety, neural surveillance systems, AI eye tracking, surveillance without consent, AI human behavior tracking, artificial intelligence privacy threat, AI surveillance vs human rights, automated facial ID, AI security systems 2025, AI crime prediction, smart cameras ai, predictive policing technology, urban surveillance systems, AI surveillance ethics, biometric surveillance systems, AI monitoring humans, advanced AI recognition, AI watchlist systems, AI face tagging, AI emotion scanning, deep learning surveillance, AI digital footprint, surveillance capitalism, AI powered spying, next gen surveillance, AI total control, AI social monitoring, AI facial mapping, AI mind reading tech, surveillance future cities, hidden surveillance networks, AI personal data harvesting, AI truth detection, AI voice recognition monitoring, digital surveillance reality, AI spy software, AI surveillance grid, AI CCTV analysis, smart surveillance networks, AI identity tracking, AI security prediction, mass data collection ai, AI video analytics, AI security evolution, artificial intelligence surveillance tools, AI behavioral detection, AI controlled city, AI surveillance news, AI surveillance system explained, AI visual tracking, smart surveillance 2030, AI invasion of privacy, facial detection ai, AI sees you always, AI surveillance rising, future of AI spying, next level surveillance, AI technology surveillance systems, ethical issues in AI surveillance, AI surveillance future risks.

Category: ethics – Page 7

Post-Biological Civilizations: Life Beyond Flesh and Bone

What happens when intelligence escapes the bounds of flesh and bone? In this episode, we explore post-biological civilizations—entities that may trade biology for digital minds, machine bodies, or stranger forms still—and ask what becomes of identity, purpose, and humanity when the body is no longer required.

Watch my exclusive video Antimatter Propulsion: Harnessing the Power of Annihilation — https://nebula.tv/videos/isaacarthur–… Nebula using my link for 40% off an annual subscription: https://go.nebula.tv/isaacarthur Get a Lifetime Membership to Nebula for only $300: https://go.nebula.tv/lifetime?ref=isa… Use the link gift.nebula.tv/isaacarthur to give a year of Nebula to a friend for just $30. SFIA Discord Server: / discord Credits: Post-Biological Civilizations: Life Beyond Flesh and Bone Episode 498a; May 11, 2025 Written, Produced & Narrated by: Isaac Arthur Edited by: Ludwig Luska Select imagery/video supplied by Getty Images Music Courtesy of Epidemic Sound http://epidemicsound.com/creator Chris Zabriskie, “Unfoldment, Revealment” Phase Shift, “Forest Night” Lewis Gill, “The Phobos Diary” Stellardrone, “Red Giant” 0:00 Intro 1:24 The Physical Presence of Post-Biological Civilizations 3:38 Societal & Cultural Aspects of Post-Biological Life 5:08 The Scifi Path to Post-Biological Life 8:03 The Singularity and the Ultimate Transition 9:47 Many Paths to Post-Biological Life 17:50 The Fermi Paradox & Post-Biological Civilizations 27:39 Ethics & the Fate of Humanity 29:17 The Transition Process at a Civilizational Scale.

Get Nebula using my link for 40% off an annual subscription: https://go.nebula.tv/isaacarthur.

Get a Lifetime Membership to Nebula for only $300: https://go.nebula.tv/lifetime?ref=isa…

Use the link gift.nebula.tv/isaacarthur to give a year of Nebula to a friend for just $30.

SFIA Discord Server: / discord.

Credits:

Post-Biological Civilizations: Life Beyond Flesh and Bone.

Episode 498a; May 11, 2025

Written, Produced & Narrated by: Isaac Arthur.

Edited by: Ludwig Luska.

Select imagery/video supplied by Getty Images.

Music Courtesy of Epidemic Sound http://epidemicsound.com/creator.

Chris Zabriskie, \

Michael Levin: The New Era of Cognitive Biorobotics | Robinson’s Podcast #187

Patreon: https://bit.ly/3v8OhY7

Michael Levin is a Distinguished Professor in the Biology Department at Tufts University, where he holds the Vannevar Bush endowed Chair, and he is also associate faculty at the Wyss Institute at Harvard University. Michael and the Levin Lab work at the intersection of biology, artificial life, bioengineering, synthetic morphology, and cognitive science. Michael also appeared on the show in episode #151, which was all about synthetic life and collective intelligence. In this episode, Michael and Robinson discuss the nature of cognition, working with Daniel Dennett, how cognition can be realized by different structures and materials, how to define robots, a new class of robot called the Anthrobot, and whether or not we have moral obligations to biological robots.

The Levin Lab: https://drmichaellevin.org/

OUTLINE

00:00 Introduction.

02:14 What is Cognition?

08:01 On Working with Daniel Dennett.

13:17 Gatekeeping in Cognitive Science.

25:15 The Multi-Realizability of Cognition.

31:30 What are Anthrobots?

39:33 What Are Robots, Really?

59:53 Do We Have Moral Obligations to Biological Robots?

Robinson’s Website: http://robinsonerhardt.com

Robinson Erhardt researches symbolic logic and the foundations of mathematics at Stanford University. Join him in conversations with philosophers, scientists, weightlifters, artists, and everyone in-between.

The Rise of Cyborgs: Merging Man with Machine | Terrifying Future of Human Augmentation

Human cyborgs are individuals who integrate advanced technology into their bodies, enhancing their physical or cognitive abilities. This fusion of man and machine blurs the line between science fiction and reality, raising questions about the future of humanity, ethics, and the limits of human potential. From bionic limbs to brain-computer interfaces, cyborg technology is rapidly evolving, pushing us closer to a world where humans and machines become one.

Shocking transformation, futuristic nightmare, beyond human limits, man merges with machine, terrifying reality, future is now, ultimate evolution, secret experiments exposed, technology gone too far, sci-fi turns real, mind-blowing upgrade, science fiction no more, unstoppable machine man, breaking human boundaries, dark future ahead, human cyborgs, cyborg technology, cyborg implants, cyborg augmentation, cyborg evolution, cyborg future, cyborg innovations, cyborg advancements, cyborg ethics, cyborg integration, cyborg society, cyborg culture, cyborg development, cyborg research, cyborg science, cyborg engineering, cyborg design, cyborg applications, cyborg trends, cyborg news, cyborg updates, cyborg breakthroughs, cyborg discoveries, cyborg implants, bionic limbs, neural interfaces, prosthetic enhancements, biohacking, cybernetics, exoskeletons, brain-computer interfaces, robotic prosthetics, augmented humans, wearable technology, artificial organs, human augmentation, smart prosthetics, neuroprosthetics, biomechatronics, implantable devices, synthetic biology, transhumanism, bioengineering, nanotechnology, genetic engineering, bioinformatics, artificial intelligence, machine learning, robotics, automation, virtual reality, augmented reality, mixed reality, haptic feedback, sensory augmentation, cognitive enhancement, biofeedback, neurofeedback, brain mapping, neural networks, deep learning, biotechnology, regenerative medicine, tissue engineering, stem cells, gene therapy, personalized medicine, precision medicine, biomedical engineering, medical devices, health tech, digital health, telemedicine, eHealth, mHealth, health informatics, wearable sensors, fitness trackers, smartwatches, health monitoring, remote monitoring, patient engagement, health apps, health data, electronic health records, health analytics, health AI, medical robotics, surgical robots, rehabilitation robotics, assistive technology, disability tech, inclusive design, universal design, accessibility, adaptive technology, human-machine interaction, human-computer interaction, user experience, user interface, UX design, UI design, interaction design, design thinking, product design, industrial design, innovation, technology trends, future tech, emerging technologies, disruptive technologies, tech startups, tech entrepreneurship, venture capital, startup ecosystem, tech innovation, research and development, R&D, scientific research, science and technology, STEM, engineering, applied sciences, interdisciplinary research, academic research, scholarly articles, peer-reviewed journals, conferences, symposiums, workshops, seminars, webinars, online courses, e-learning, MOOCs, professional development, continuing education, certifications, credentials, skills development, career advancement, job market, employment trends, workforce development, labor market, gig economy, freelancing, remote work, telecommuting, digital nomads, coworking spaces, collaboration tools, project management, productivity tools, time management, work-life balance, mental health, wellness, self-care, mindfulness, meditation, stress management, resilience, personal growth, self-improvement, life coaching, goal setting, motivation, inspiration, success stories, case studies, testimonials, reviews, ratings, recommendations, referrals, networking, professional associations, industry groups, online communities, forums, discussion boards, social media, content creation, blogging, vlogging, podcasting, video production, photography, graphic design, animation, illustration, creative arts, performing arts, visual arts, music, literature, film, television, entertainment, media, journalism, news, reporting, storytelling, narrative, communication, public speaking, presentations, persuasion, negotiation, leadership, management, entrepreneurship, business, marketing, advertising, branding, public relations, sales, customer service, client relations, customer experience, market research, consumer behavior, demographics, psychographics, target audience, niche markets, segmentation, positioning, differentiation, competitive analysis, SWOT analysis, strategic planning, business development, growth strategies, scalability, sustainability, corporate social responsibility, ethics, compliance, governance, risk management, crisis management, change management, organizational behavior, corporate culture, diversity and inclusion, team building, collaboration, innovation management, knowledge management, intellectual property, patents, trademarks, copyrights.

AI-Extended Minds: The Future of Human Morality

Source : ai-extended moral agency? by pii telakivi, tomi kokkonen, raul hakli & pekka mäkelä

Two AIs Discuss: They’re Building Brains In a Jar! Organoid Intelligence (OI)

“Disembodied Brains: Understanding our Intuitions on Human-Animal Neuro-Chimeras and Human Brain Organoids” by John H. Evans Book Link: https://amzn.to/40SSifF “Introduction to Organoid Intelligence: Lecture Notes on Computer Science” by Daniel Szelogowski Book Link: https://amzn.to/3Eqzf4C “The Emerging Field of Human Neural Organoids, Transplants, and Chimeras: Science, Ethics, and Governance” by The National Academy of Sciences, Engineering and Medicine Book Link: https://amzn.to/4hLR1Oe (Affiliate links: If you use these links to buy something, I may earn a commission at no extra cost to you.) Playlist: • Two AI’s Discuss: The Quantum Physics… The hosts explore the ethical and scientific implications of brain organoids and synthetic biological intelligence (SBI). Several sources discuss the potential for consciousness and sentience in these systems, prompting debate on their moral status and the need for ethical guidelines in research. A key focus is determining at what point, if any, brain organoids or SBI merit moral consideration similar to that afforded to humans or animals, influencing research limitations and regulations. The texts also examine the use of brain organoids as a replacement for animal testing in research, highlighting the potential benefits and challenges of this approach. Finally, the development of “Organoid Intelligence” (OI), combining organoids with AI, is presented as a promising but ethically complex frontier in biocomputing. Our sources discuss several types of brain organoids, which are 3D tissue cultures derived from human pluripotent stem cells (hPSCs) that self-organize to model features of the developing human brain. Here’s a brief overview: • Cerebral Organoids: This term is often used interchangeably with “brain organoids”. They are designed to model the human neocortex and can exhibit complex brain activity. These organoids can replicate the development of the brain in-vitro up to the mid-fetal period. • Cortical Organoids: These are a type of brain organoid specifically intended to model the human neocortex. They are formed of a single type of tissue and represent one important brain region. They have been shown to develop nerve tracts with functional output. • Whole-brain Organoids: These organoids are not developed with a specific focus, like the forebrain or cerebellum. They show electrical activity very similar to that of preterm infant brains. • Region-specific Organoids: These are designed to model specific regions of the brain such as the forebrain, midbrain, or hypothalamus. For example, midbrain-specific organoids can contain functional dopaminergic and neuromelanin-producing neurons. • Optic Vesicle-containing Brain Organoids (OVB-organoids): These organoids develop bilateral optic vesicles, which are light sensitive, and contain cellular components of a developing optic vesicle, including primitive corneal epithelial and lens-like cells, retinal pigment epithelia, retinal progenitor cells, axon-like projections, and electrically active neuronal networks. • Brain Assembloids: These are created when organoids from different parts of the brain are placed next to each other, forming links. • Brainspheres/Cortical Spheroids: These are simpler models that primarily resemble the developing in-vivo human prenatal brain, and are particularly useful for studying the cortex. Unlike brain organoids, they do not typically represent multiple brain regions. • Mini-brains: This term has been debunked in favor of the more accurate “brain organoid”. These various types of brain organoids offer diverse models for studying brain development, function, and disease. Researchers are also working to improve these models by incorporating features like vascularization and sensory input. #BrainOrganoids #organoid #Bioethics #OrganoidIntelligence #WetwareComputing #Sentience #ArtificialConsciousness #Neuroethics #AI #Biocomputing #NeuralNetworks #ConsciousnessResearch #PrecautionaryPrinciple #AnimalTestingAlternatives #ResearchEthics #EmergingTechnology #skeptic #podcast #synopsis #books #bookreview #ai #artificialintelligence #booktube #aigenerated #documentary #alternativeviews #aideepdive #science #hiddenhistory #futurism #videoessay #ethics

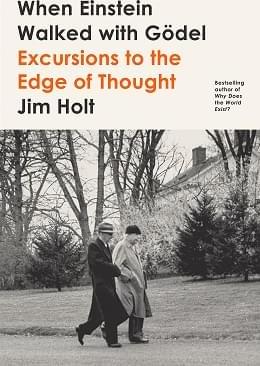

When Einstein Walked with Gödel

Parul Sehgal of The New York Times stated “In these pieces, plucked from the last 20 years, Holt takes on infinity and the infinitesimal, the illusion of time, the birth of eugenics, the so-called new atheism, smartphones and distraction. It is an elegant history of recent ideas. There are a few historical correctives — he dismantles the notion that Ada Lovelace, the daughter of Lord Byron, was the first computer programmer. But he generally prefers to perch in the middle of a muddle — say, the string theory wars — and hear evidence from both sides without rushing to adjudication. The essays orbit around three chief concerns: How do we conceive of the world (metaphysics), how do we know what we know (epistemology) and how do we conduct ourselves (ethics)”. [ 6 ]

Steven Poole of The Wall Street Journal commented “…this collection of previously published essays by Jim Holt, who is one of the very best modern science writers”. [ 7 ]

[ edit ].

“Pantheon” creator Craig Silverstein on uploading our brains to the internet

“The meaningful difference,” argues Silverstein, “comes down to our lifespan. For humans, our mortality defines so much of our experience. If a human commits murder and receives a life sentence, we understand what that means: a finite number of years. But if a UI with an indefinite lifespan commits murder, what do life sentences mean? Are we talking about a regular human lifespan? 300 years? A thousand? Then there’s love and relationships. Let’s say you find your soulmate and spend a thousand years together. At some point, you may decide you had a good run and move on with someone else. The idea of not growing old with someone feels alien and upsetting. But if we were to live hundreds or thousands of years, our perceptions of relationships and identity may change fundamentally.”

“One of the best” because — in addition to having a well-crafted, suspenseful, and heartfelt narrative about love and loss — thoughtfully engages with both the technical and philosophical questions raised by its cerebral premise: Is a perfect digital copy of a person’s mind still meaningfully human? Does uploaded intelligence, which combines the processing power of a supercomputer with the emotional intelligence of a sentient being, have a competitive edge over cold, unfeeling artificial intelligence? How would uploaded intelligence compromise ethics or geopolitical strategy?

“Underrated” because was produced by — and first aired on — AMC+, a streaming service that, owing to the dominance of Netflix, HBO Max, Disney+, and Amazon Prime, has but a fraction of its competitors’ subscribers and which, motivated by losses in ad revenue, ended up canceling the show’s highly anticipated (and fully completed) second season in exchange for tax write-offs. Although has since been salvaged by Netflix, […] its troubled distribution history resulted in the show becoming a bit of a hidden gem, rather than the global hit it could have been, had it premiered on a platform with more eyeballs.

Still, the fact that managed to endure and build a steadily growing cult following is a testament to the show’s quality and cultural relevance. Although the concept of uploaded intelligence is nothing new, and has been tackled by other prominent sci-fi properties like Black Mirror and Altered Carbon, is unique in that it not only explores how this hypothetical technology would affect us on a personal level, but also explores how it might play out on a societal level. Furthermore, take is a nuanced one, rejecting both techno-pessimism and techno-optimism in favor of what series creator Craig Silverstein calls “techno-realism.”