Year 2022 face_with_colon_three

For more than 250 years, mathematicians have wondered if the Euler equations might sometimes fail to describe a fluid’s flow. A new computer-assisted proof marks a major breakthrough in that quest.

According to reports from Spanish newspaper El País, researchers have discovered a way to speed up, slow down, and even reverse quantum time by taking advantage of unusual properties within a quantum world in specific ways. It’s a huge breakthrough, which the researchers have detailed in a series of six new papers featured in Advancing Physics.

The papers were originally published in 2018, and they detail how researchers were able to rewind time to a previous scene, as well as even skip several scenes forward. Being able to reverse and even control quantum time is a huge step forward, especially as we’ve seen increasing movements into quantum simulators.

The realm of quantum physics is a complex one, no doubt, and with analog quantum computers showing such promise at solving intense problems, it only seens fitting that research into controlling and reversing quantum time would prove so fruitful. The researchers say that the control they can acquire on the quantum world is very similar to controlling a movie.

Elon Musk/courtesy of Yichuan Cao/NurPhoto via Getty Images

In 2022, Elon Musk’s Neuralink tried – and failed – to secure permission from the FDA to run a human trial of its implantable brain-computer interface (BCI), according to a Reuters report published Thursday.

Citing seven current and former employees, speaking on the condition of anonymity, Reuters reported that the regulatory agency found “dozens of issues” with Neuralink’s application that the company must resolve before it can begin studying its tech in humans.

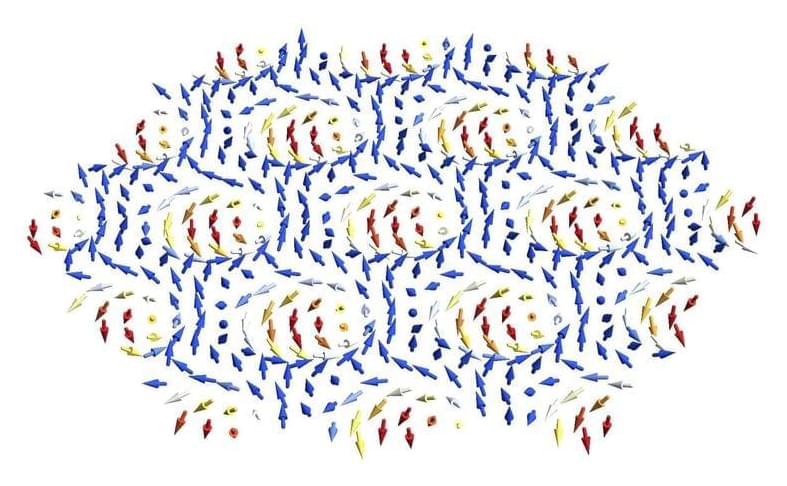

In work that could lead to important new physics with potentially heady applications in computer science and more, MIT scientists have shown that two previously separate fields in condensed matter physics can be combined to yield new, exotic phenomena.

The work is theoretical, but the researchers are excited about collaborating with experimentalists to realize the predicted phenomena. The team includes the conditions necessary to achieve that ultimate goal in a paper published in the February 24 issue of Science Advances.

“This work started out as a theoretical speculation, and ended better than we could have hoped,” says Liang Fu, a professor in MIT’s Department of Physics and leader of the work. Fu is also affiliated with the Materials Research Laboratory. His colleagues are Nisarga Paul, a physics graduate student, and Yang Zhang, a postdoctoral associate who is now a professor at the University of Tennessee.

Researchers at MIT have developed an X-Ray vision headset similar to Superman’s powers. Researchers at the Massachusetts Institute of Technology have developed an X-ray vision headset. The device combines computer vision and wireless perception that automatically locates items located under a stack of papers or inside a box.

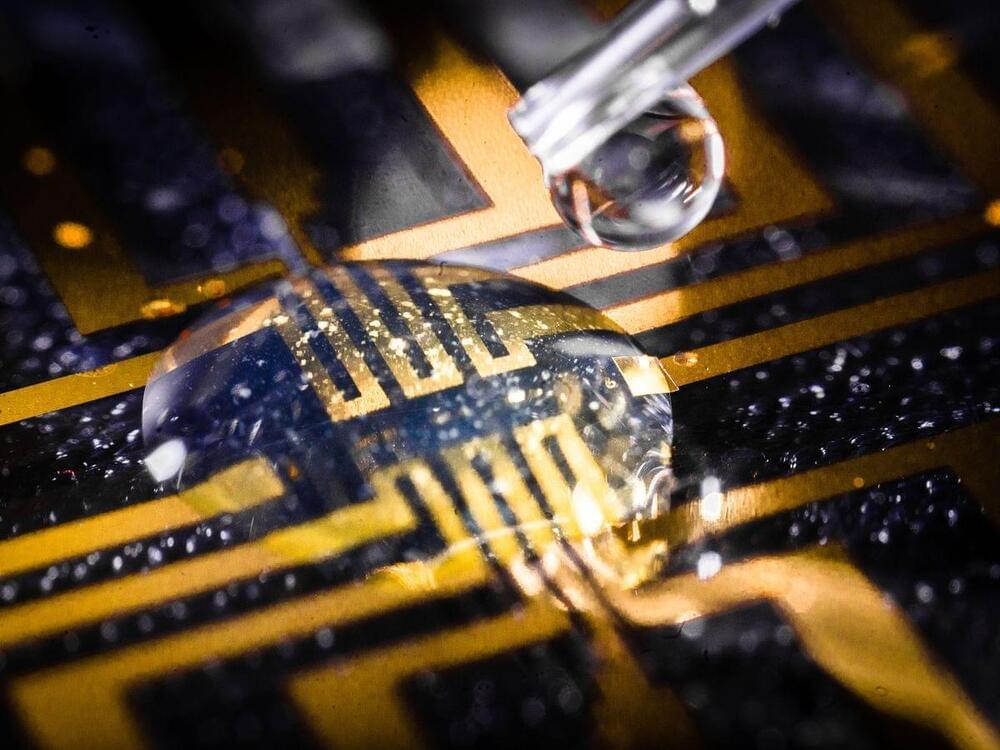

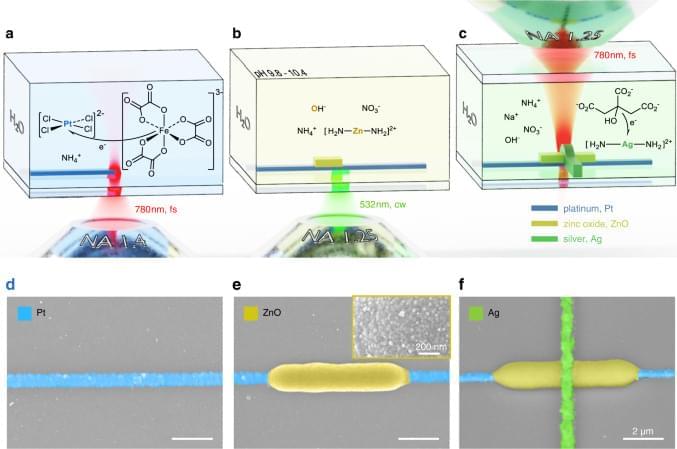

Printed organic and inorganic electronics continue to be of large interest for several applications. Here, the authors propose laser printing as a facile process for fabricating printed electronics with minimum feature sizes below 1 µm and demonstrate functional diodes, memristors, and physically unclonable functions.

Just in case people are curious how accurate the news is, the following article says “Nvidia, AMD, and TSMC will still bear the bulk of the risk for establishing manufacturing within the United States.” The reality is that neither Nvidia or AMD makes chips. In that list, only TSMC is a chip manufacturer.

The U.S. Secretary of Commerce reminds investors that the federal government supports a sweeping shift in how and where chips are made.

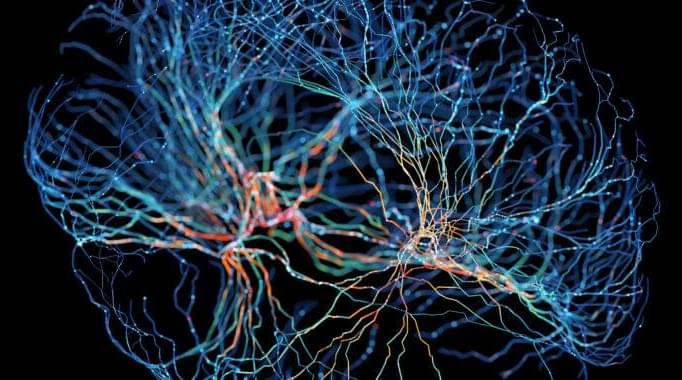

Trying to make computers more like human brains isn’t a new phenomenon. However, a team of researchers from Johns Hopkins University argues that there could be many benefits in taking this concept a bit more literally by using actual neurons, though there are some hurdles to jump first before we get there.

In a recent paper, the team laid out a roadmap of what’s needed before we can create biocomputers powered by human brain cells (not taken from human brains, though). Further, according to one of the researchers, there are some clear benefits the proposed “organoid intelligence” would have over current computers.

“We have always tried to make our computers more brain-like,” Thomas Hartung, a researcher at Johns Hopkins University’s Environmental Health and Engineering department and one of the paper’s authors, told Ars. “At least theoretically, the brain is essentially unmatched as a computer.”

We’ll send you a myFT Daily Digest email rounding up the latest Tech Tonic news every morning.

In a new season of Tech Tonic, FT tech journalists Madhumita Murgia and John Thornhill investigate the race to build a quantum computer, the impact they could have on security, innovation and business, and the confounding physics of the quantum world.