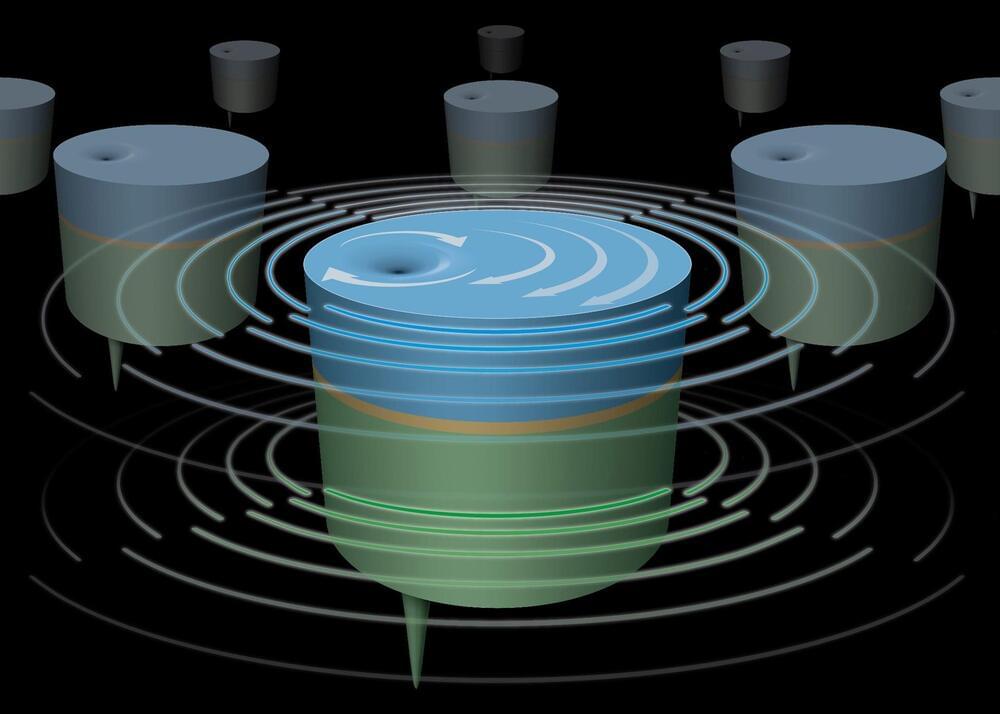

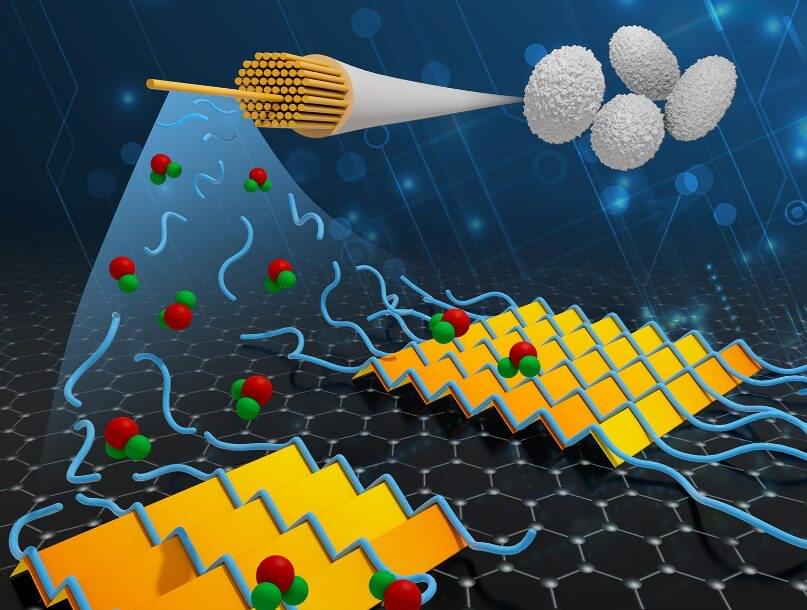

Brookhaven National Laboratory researchers are working to develop ways to synchronize the magnetic spins in nanoscale devices to build tiny signal-generating or receiving antennas and other electronics.

Upton, New York — Scientists at the U.S. Department of Energy’s Brookhaven National Laboratory are seeking ways to synchronize the magnetic spins in nanoscale devices to build tiny yet more powerful signal-generating or receiving antennas and other electronics. Their latest work, published in Nature Communications, shows that stacked nanoscale magnetic vortices separated by an extremely thin layer of copper can be driven to operate in unison, potentially producing a powerful signal that could be put to work in a new generation of cell phones, computers, and other applications.

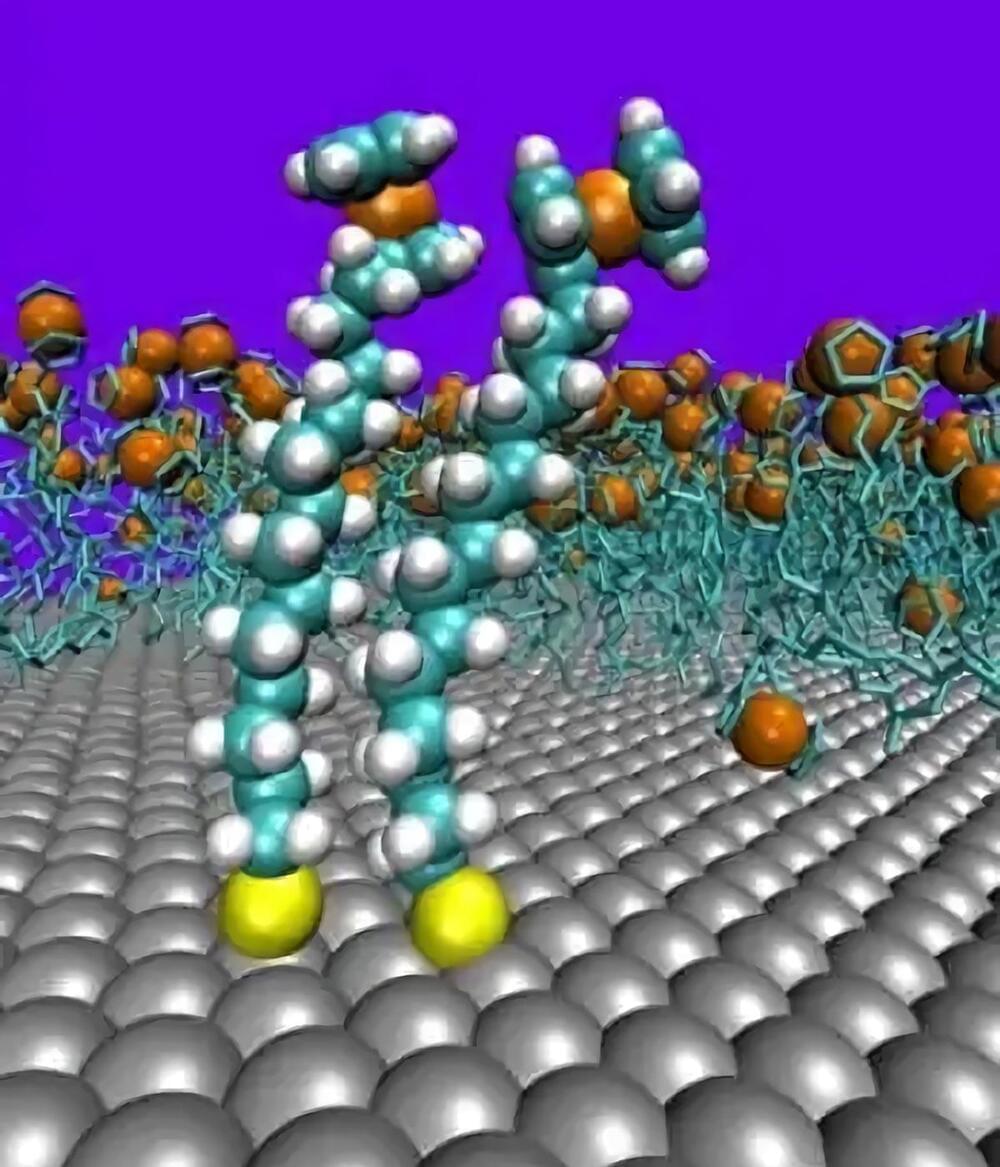

The aim of this “spintronic” technology revolution is to harness the power of an electron’s “spin,” the property responsible for magnetism, rather than its negative charge.