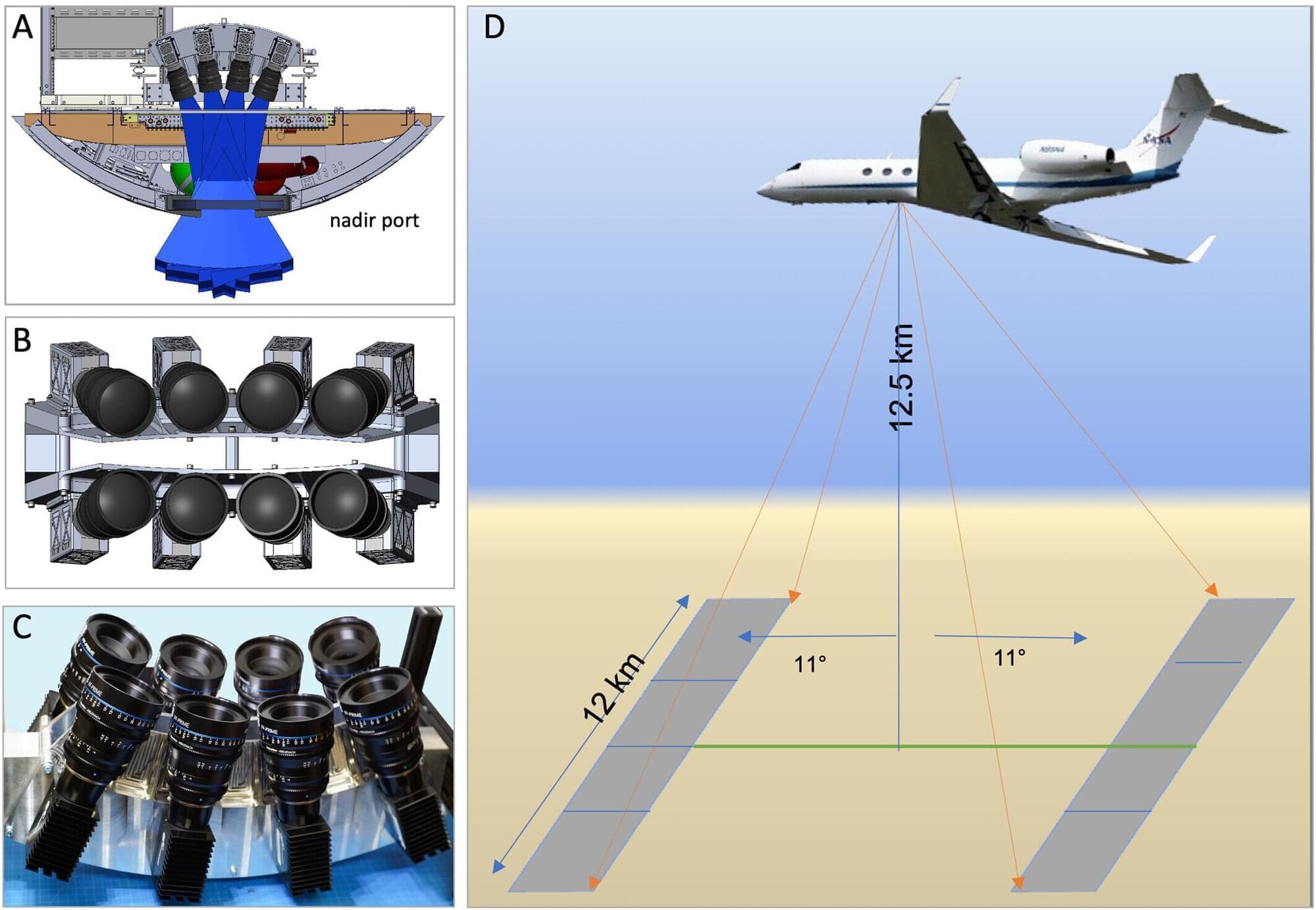

Sometimes to truly study something up close, you have to take a step back. That’s what Andrea Donnellan does. An expert in Earth sciences and seismology, she gets much of her data from a bird’s-eye view, studying the planet’s surface from the air and space, using the data to make discoveries and deepen understanding about earthquakes and other geological processes.

“The history of Earth processes is written in the landscapes,” Donnellan said. “Studying Earth’s surface can help us understand what is happening now and what might happen in the future.”

Donnellan, professor and head of the Department of Earth, Atmospheric, and Planetary Sciences in Purdue’s College of Science, has watched Earth for a long time. Her original research was studying and tracking glaciers in Antarctica.