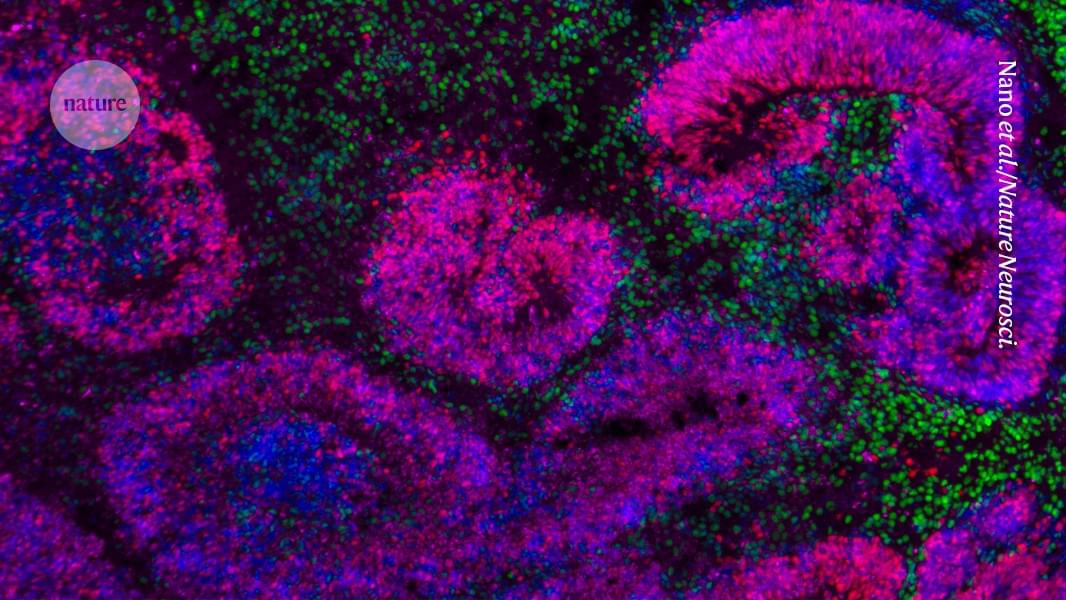

A collection of studies that chart how mammalian brain cells grow and differentiate is a ‘very valuable’ tool for neuroscientists.

Seoul National University College of Engineering announced that a research team led by Professor Hyun Oh Song from the Department of Computer Science and Engineering has developed a new AI technology called KVzip that intelligently compresses the conversation memory of large language model (LLM)-based chatbots used in long-context tasks such as extended dialog and document summarization. The study is published on the arXiv preprint server.

The term conversation memory refers to the temporary storage of sentences, questions, and responses that a chatbot maintains during interaction, which it uses to generate contextually coherent replies. Using KVzip, a chatbot can compress this memory by eliminating redundant or unnecessary information that is not essential for reconstructing context. The technique allows the chatbot to retain accuracy while reducing memory size and speeding up response generation—a major step forward in efficient, scalable AI dialog systems.

Modern LLM chatbots perform tasks such as dialog, coding, and question answering using enormous contexts that can span hundreds or even thousands of pages. As conversations grow longer, however, the accumulated conversation memory increases computational cost and slows down response time.

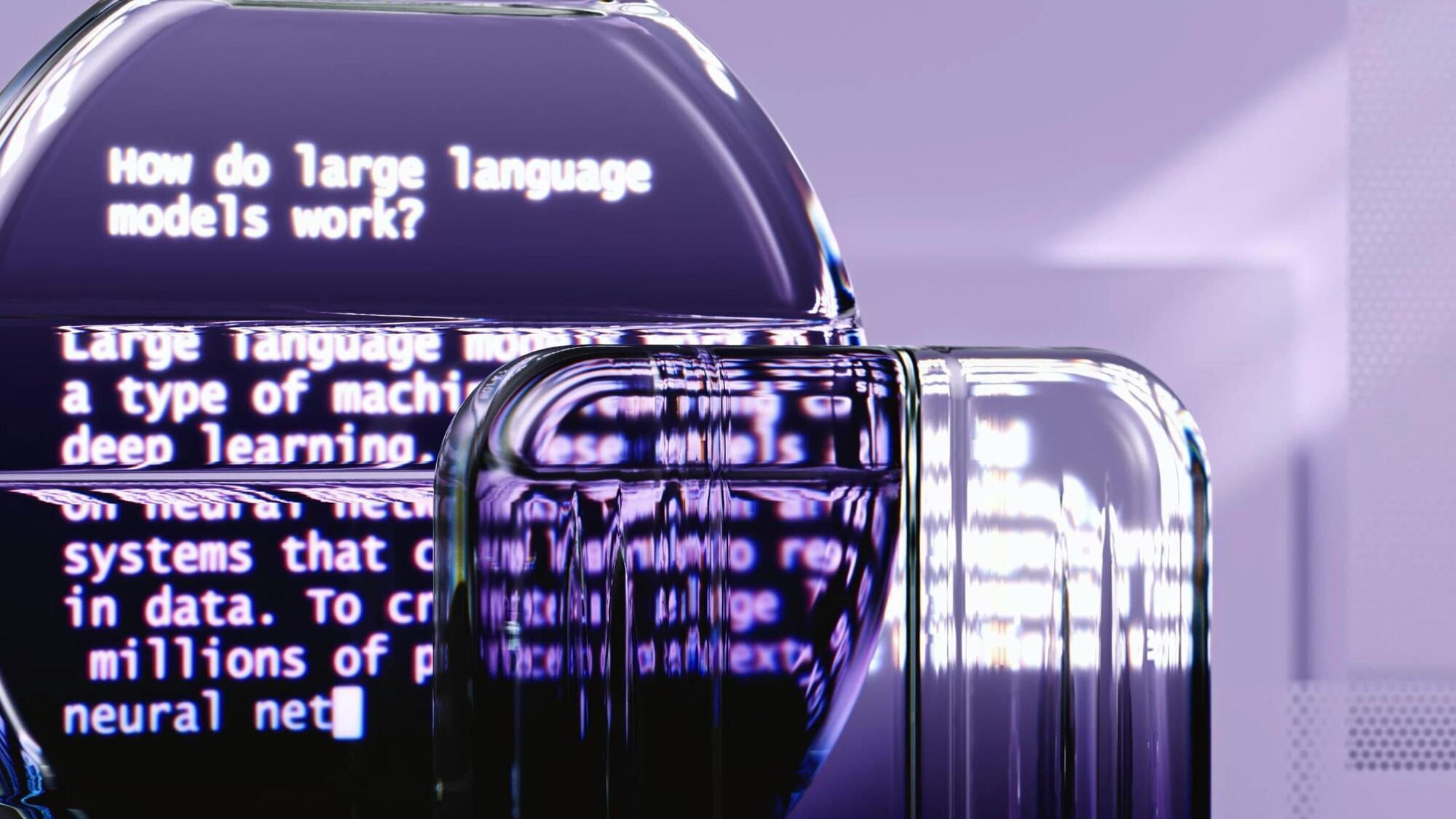

npj Quantum Inf ormation volume 11, Article number: 171 (2025) Cite this article.

A newly developed material has been used to create a gel capable of repairing and rebuilding tooth enamel, offering a potential breakthrough in both preventive and restorative dental care.

Scientists from the University of Nottingham’s School of Pharmacy and Department of Chemical and Environmental Engineering designed this bioinspired substance to restore damaged or eroded enamel, reinforce existing enamel, and help guard against future decay. Their findings were published in Nature Communications.

This protein-based gel, which contains no fluoride, can be quickly applied to teeth using the same method dentists use for traditional fluoride treatments. It imitates the natural proteins responsible for guiding enamel formation early in life. Once in place, the gel forms a thin, durable coating that seeps into the tooth surface, filling small cracks and imperfections.

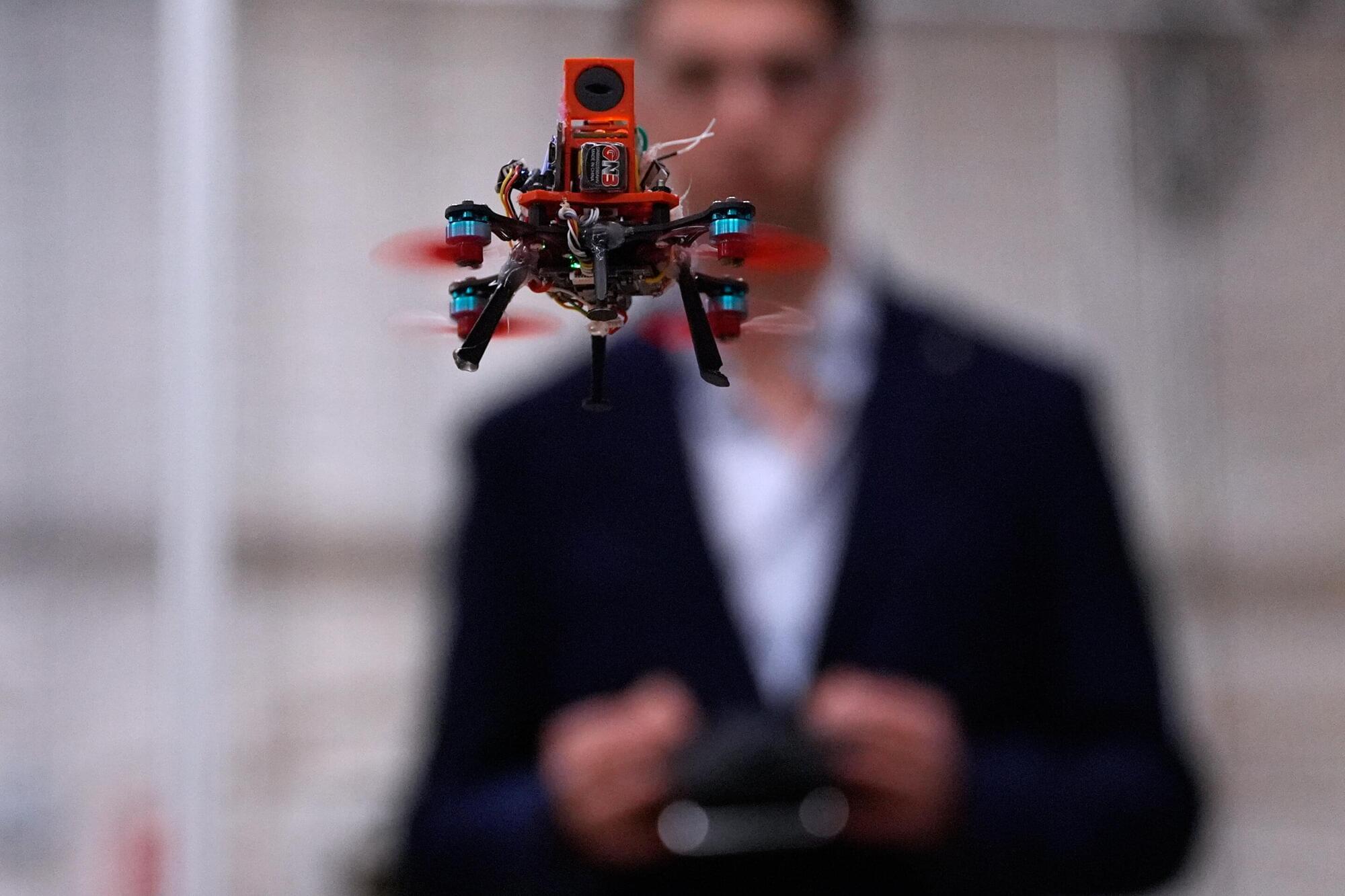

Don’t be fooled by the fog machine, spooky lights and fake bats: the robotics lab at Worcester Polytechnic Institute lab isn’t hosting a Halloween party.

Instead, it’s a testing ground for tiny drones that can be deployed in search and rescue missions even in dark, smoky or stormy conditions.

“We all know that when there’s an earthquake or a tsunami, the first thing that goes down is power lines. A lot of times, it’s at night, and you’re not going to wait until the next morning to go and rescue survivors,” said Nitin Sanket, assistant professor of robotics engineering. “So we started looking at nature. Is there a creature in the world which can actually do this?”

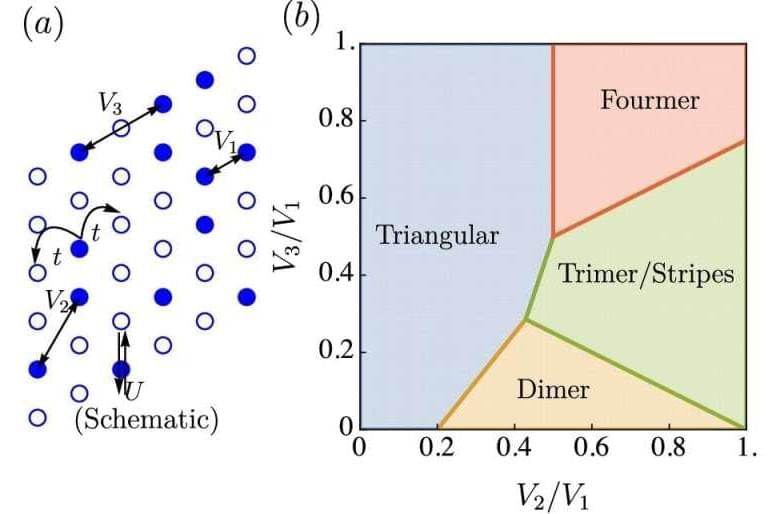

Physicists at Florida State University (FSU) have uncovered a fascinating new phase of matter — a “ quantum pinball state” in which electrons act both as conductors and insulators at the same time. In this bizarre quantum regime, some electrons freeze into a rigid crystalline lattice while others move freely around them, much like balls ricocheting around fixed pins in a pinball machine. The discovery offers a new perspective on how quantum materials behave and could pave the way for breakthroughs in quantum computing, spintronics, and superconductivity.

The research, published in npj Quantum Materials, was led by Dr. Aman Kumar, Prof. Hitesh Changlani, and Prof. Cyprian Lewandowski of FSU’s National High Magnetic Field Laboratory. Their study explores how electrons in a two-dimensional “moiré lattice” can transition between solid-like and liquid-like states under certain conditions, forming what physicists call a generalized Wigner crystal.

About 68% of respondents said the pressure to publish their research is greater than it was two to three years ago and only 45% agreed that they have sufficient time for research (see ‘Researchers are feeling the pressure’). Another concern is uncertainty over funding — just 33% of respondents expect funding in their field to grow in the next 2–3 years. And that proportion fell to just 11% in North America, reflecting unprecedented cuts to US research funding this year.

“As a researcher based in Brazil, I strongly relate to the survey’s findings, particularly the growing pressure to publish despite limited time and resources,” says Claudia Suemoto, a gerontologist at the University of São Paulo Medical School. “The demand for productivity has indeed increased in recent years, yet opportunities for funding and access to qualified personnel remain constrained in Brazil and other low-and middle-income countries.”

Suemoto says this imbalance of high demands and restricted resources often forces researchers to do more with less, which could affect the quality and innovation of research. Comments researchers made as part of the survey indicate that the lack of time is down to factors including growing administrative and teaching demands and trying to identity and acquire funding.