Letters to the Editor.

The Letters section represents an opportunity for ongoing author debate and post-publication peer review. View our submission guidelines for Letters to the Editor before submitting your comment.

Letters to the Editor.

The Letters section represents an opportunity for ongoing author debate and post-publication peer review. View our submission guidelines for Letters to the Editor before submitting your comment.

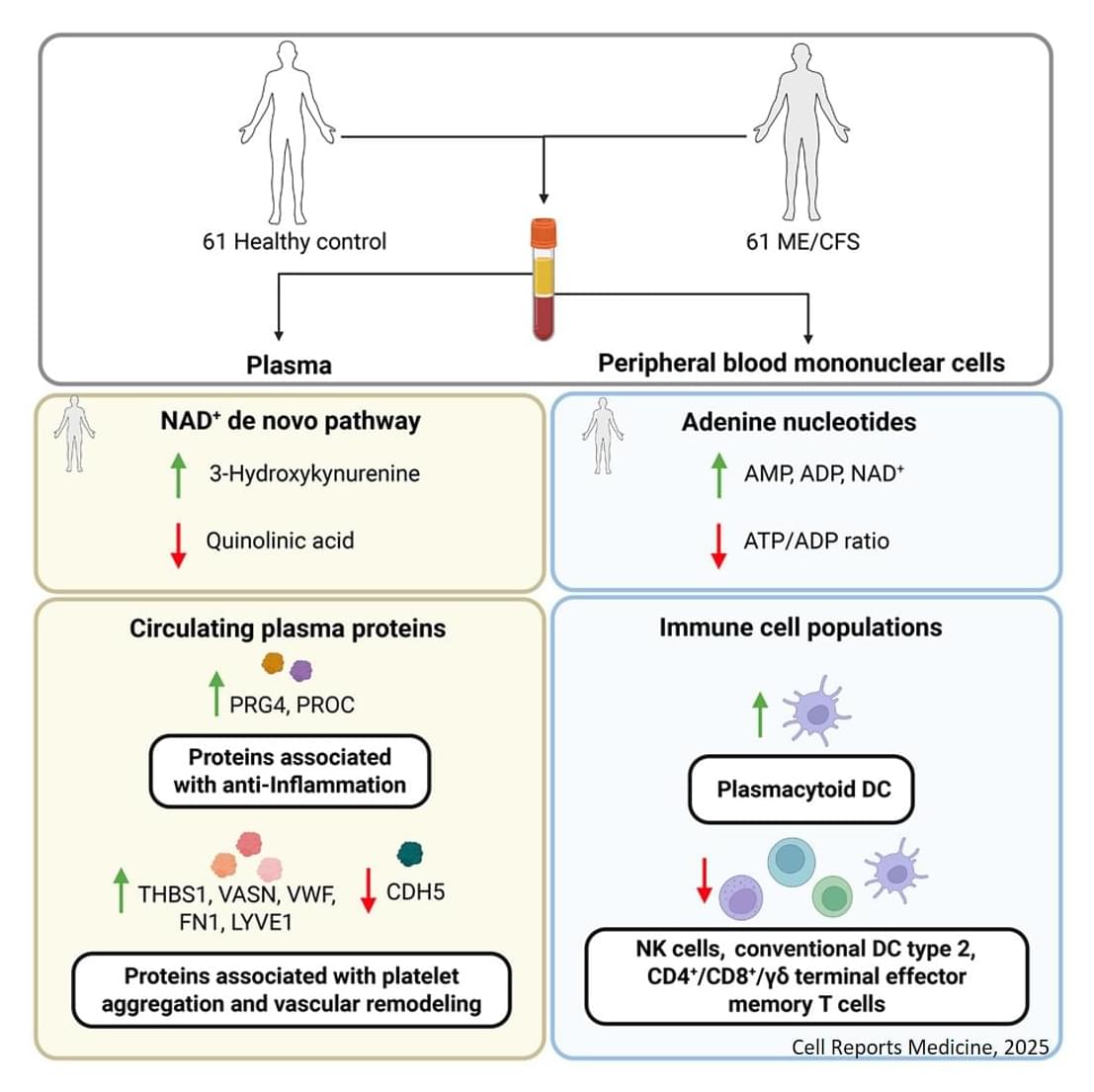

The study compared whole blood samples from 61 people meeting clinical diagnostic criteria for myalgic encephalomyelitis/chronic fatigue syndrome (ME/CFS) with samples from healthy age-and sex-matched volunteers.

White blood cells from ME/CFS patients showed evidence of ‘energy stress’ in the form of higher levels of adenosine monophosphate (AMP) and adenosine diphosphate (ADP), indicating reduced generation of adenosine triphosphate (ATP), the key energy source within cells.

Profiling of immune cell populations revealed a trend toward less mature subsets of T-lymphocyte subsets, dendritic cells and natural killer cells in people with ME/CSF.

Comprehensive analysis of plasma proteins highlighted disruptions of vascular and immune homeostasis in patients with ME/CFS. Levels of proteins associated with activation of the endothelium – the innermost lining of blood vessels – and remodelling of vessel walls were higher, while levels of circulating immunoglobulin-related proteins were lower.

Although cellular energy dysfunction and altered immune profiles have been noted before in patients with ME/CFS, previous studies have often focused on a single analytical platform without looking at concurrence and interactions.

“ME/CFS is a complex disorder with undefined mechanisms, limited diagnostic tools and treatments,” said the senior author of the study. “Our findings provide further insights into the clinical and biological complexity of ME/CFS.”

Wearable technologies have the potential to transform gastrointestinal care by enabling continuous monitoring of activity in patients with cirrhosis and aiding in the early detection of hepatic encephalopathy. While these innovations provide valuable clinical insights, further efforts are needed to address challenges related to implementation and data management.

Current research into wearable technology in liver disease supports these possibilities. Studies of wrist-worn activity monitors have shown that reduced activity is associated with increased waitlist mortality among liver transplant candidates, as well as increased hospital admissions and mortality in patients with cirrhosis. Other investigations with wearables have linked sleep disturbances to poorer post-liver transplant outcomes and explored skin patches and transdermal sensors for detecting blood alcohol levels and inflammatory markers predictive of outcomes in cirrhosis, Buckholz said.

A major barrier to widespread implementation in clinical practices is the so-called “wearable paradox,” whereby early adopters of wearable technology tend to be relatively healthy, whereas those at highest risk are less likely to already use such devices, Buckholz noted. Increasing access, understanding, and uptake in vulnerable populations will therefore be critical.

Additional challenges include determining how to distill massive volumes of wearable data into concise formats that can be incorporated into electronic medical records (EMRs) and easily communicated to patients.

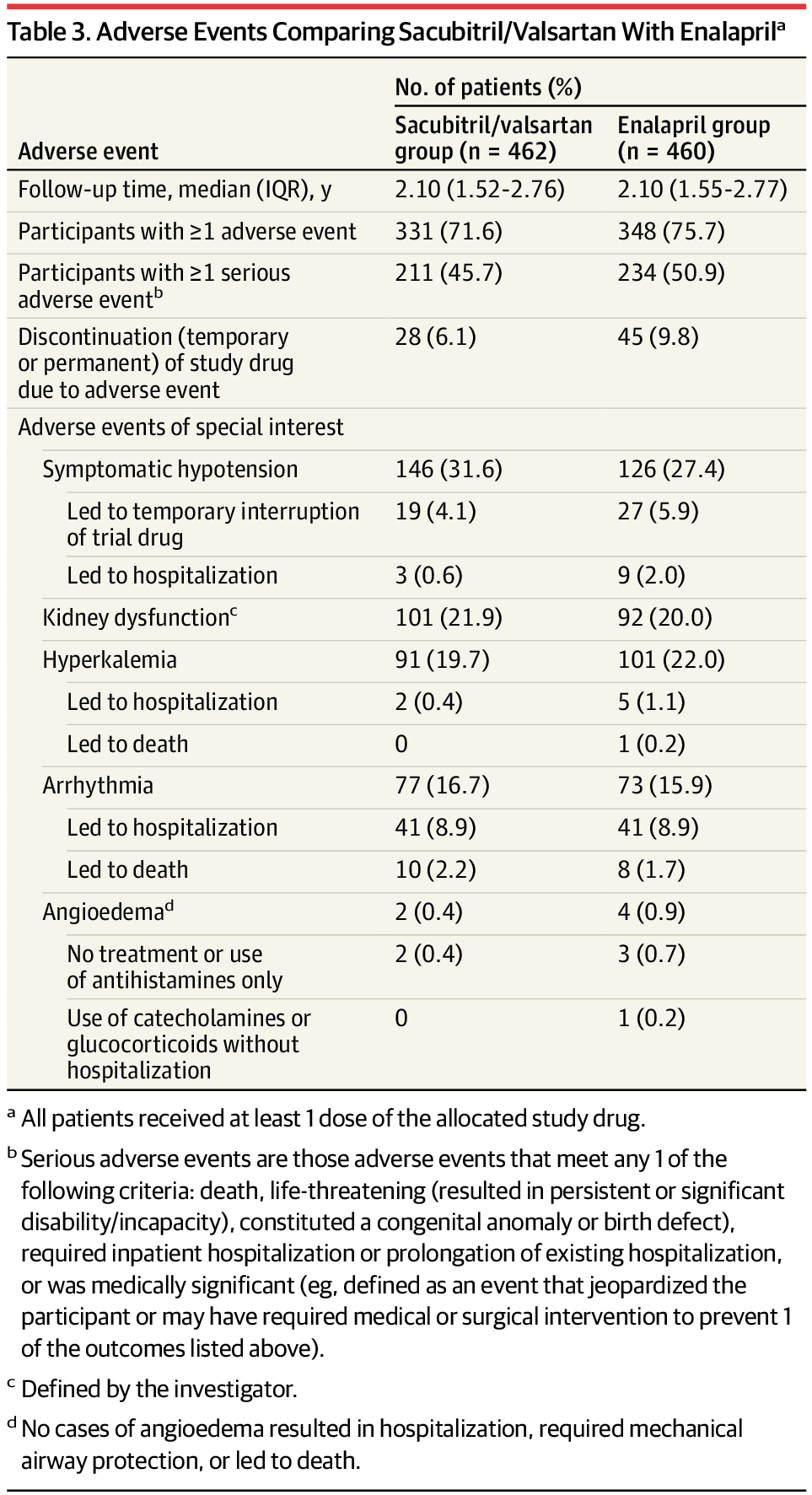

RCT: In patients with Chagas-related HFrEF, sacubitril/valsartan did not reduce rates of cardiovascular death or heart failure hospitalization compared with enalapril.

Although there was a greater reduction in NT-proBNP with sacubitril/valsartan, this did not impact observed clinical outcomes. These findings, in line with the BENEFIT trial, highlight the limited effect of biomarker improvements and antiparasitic therapy on clinical endpoints for Chagas disease.

The most common and severe complication of Chagas disease in its chronic phase is cardiomyopathy, which occurs in 30% to 40% of persons who are infected and can present as chronic myocarditis, conduction system abnormalities, cardioembolic episodes, heart failure (HF), and sudden death.5,11,12 Chagas cardiomyopathy is distinguished by its unique clinical features, including focal myocardial fibrosis, arrhythmogenicity, and ventricular aneurysm formation as well as a markedly high mortality rate, even in the absence of typical comorbidities.13 The reasons patients with HF due to Chagas disease have such a poor prognosis are not fully understood but may include persistent immune-mediated myocardial inflammation triggered by chronic parasitic infection, hypercoagulable state, right ventricular dysfunction, microvascular dysfunction, autonomic disturbance, high rates of ventricular arrhythmias, elevated risk of stroke, conduction disturbances, and ventricular aneurysm formation.5,13,14

Whether guideline-recommended medical therapies for HF are effective in patients with Chagas cardiomyopathy is unknown. No randomized clinical trial to date has been powered to test the efficacy and safety of any treatment in patients with HF caused by Chagas disease, and these patients were not adequately represented in pivotal HF trials.14 Although large randomized clinical trials are lacking, enalapril was selected as the comparator as a standard of care for HF management, including in patients with Chagas cardiomyopathy. The study by Szajnbok et al demonstrated that enalapril improved functional class and reduced heart size in patients with chronic Chagas heart disease, suggesting a beneficial hemodynamic effect.15 More recently, Penitente et al reported that enalapril reduced myocardial fibrosis and improved cardiac function in a murine model of chronic Chagas disease, supporting its role in modulating disease progression.16 Sacubitril/valsartan may offer incremental benefit over enalapril in patients with Chagas disease not only through neurohormonal and vasodilator effects but also by mitigating myocardial fibrosis and arrhythmias.14 Additionally, fully understanding the safety of HF treatments in this population is important, as these patients have more dysfunction of the right ventricle and lower blood pressure compared with other HF etiologies.14

Therefore, the PARACHUTE-HF (Prevention and Reduction of Adverse Outcomes in Chagasic Heart Failure Trial Evaluation) trial was designed to prospectively evaluate the efficacy and safety of the angiotensin receptor-neprilysin inhibitor sacubitril/valsartan in patients with HF with reduced ejection fraction (HFrEF) caused by Chagas disease.

The jawbones and vertebrae of a hominin that lived 773,000 years ago have been found in North Africa and could represent a common ancestor of Homo sapiens, Neanderthals and Denisovans.

Celebrating a 7-year anniversary of the first edition of my book The Syntellect Hypothesis (2019)! I can’t help but feel like I’m watching a long-launched probe finally begin to transmit back meaningful data. What started as a speculative framework—half philosophy, half systems theory—has aged into something uncannily timely, as if reality itself had been quietly reading the manuscript and taking notes. In those seven years, AI has gone from clever tool to cognitive co-actor, collective intelligence has accelerated from metaphor to measurable force, and the idea of a convergent, self-reflective Syntellect no longer feels like science fiction so much as a working hypothesis under active experimental validation.

Looking back, the book captured a moment just before the curve went vertical. Looking forward, it reads less like a prediction and more like an early cartography of a terrain we’re now actively inhabiting. The signal is stronger, the noise louder, and the questions sharper—but the core intuition remains intact: intelligence doesn’t merely grow, it integrates. And once it does, history stops being a line and starts behaving more like a phase transition.

Here’s what Google summarizes about the book: The Syntellect Hypothesis: Five Paradigms of the Mind’s Evolution by Alex M. Vikoulov is a book that explores the idea of a future phase transition where human consciousness merges with technology to form a global supermind, or “Syntellect”. It covers topics like digital physics, the technological singularity, consciousness, and the evolution of humanity, proposing that we are on the verge of becoming a single, self-aware superorganism. The book is structured around five paradigms: Noogenesis, Technoculture, the Cybernetic Singularity, Theogenesis, and Universal Mind.

Key Concepts.

Syntellect: A superorganism-level consciousness that emerges when the intellectual synergy of a complex system (like humanity and its technology) reaches a critical threshold. Phase Transition: The book posits that humanity is undergoing a metamorphosis from individual intellect to a collective, higher-order consciousness.

Five Paradigms: The book is divided into five parts that map out this evolutionary journey: Noogenesis: The emergence of mind through computational biology. Technoculture: The rise of human civilization and technology. The Cybernetic Singularity: The point of Syntellect emergence. Theogenesis: Transdimensional propagation and expansion. Universal Mind: The ultimate cosmic level of awareness.

Themes and Scope.

“When we see a region on the sun with an extremely complex magnetic field, we can assume that there is a large amount of energy there that will have to be released as solar storms,” said Dr. Louise Harra.

How can astronomers observe and study the Sun’s activity in the most efficient way despite the Sun and Earth orbiting each other at different speeds? This is what a recent study published in Astronomy & Astrophysics hopes to address as a team of scientists investigated new methods for studying the Sun with the goal of better understanding its activity and how it influences Earth.

For the study, the researchers collected data from the Sun using NASA’s Solar Dynamics Observatory spacecraft and the European Space Agency’s Solar Orbiter, which orbits the Sun once every six months and is fixed on the nearside of the Sun towards Earth, respectively. The goal of the study was to observe the solar active region called NOAA 13,664, which is one of the most misunderstood and active regions observed over the last 20 years.

During the 94-day study period, lasting from April to June 2024, the researchers successfully observed a full cycle of activity from NOAA 13,664, including an initial 20-day buildup of energy, peaking approximately one month after initiation, followed by a wind-down period lasting approximately two months. These results could help scientists better understand the magnetic field activity of the Sun and predict future solar activity.

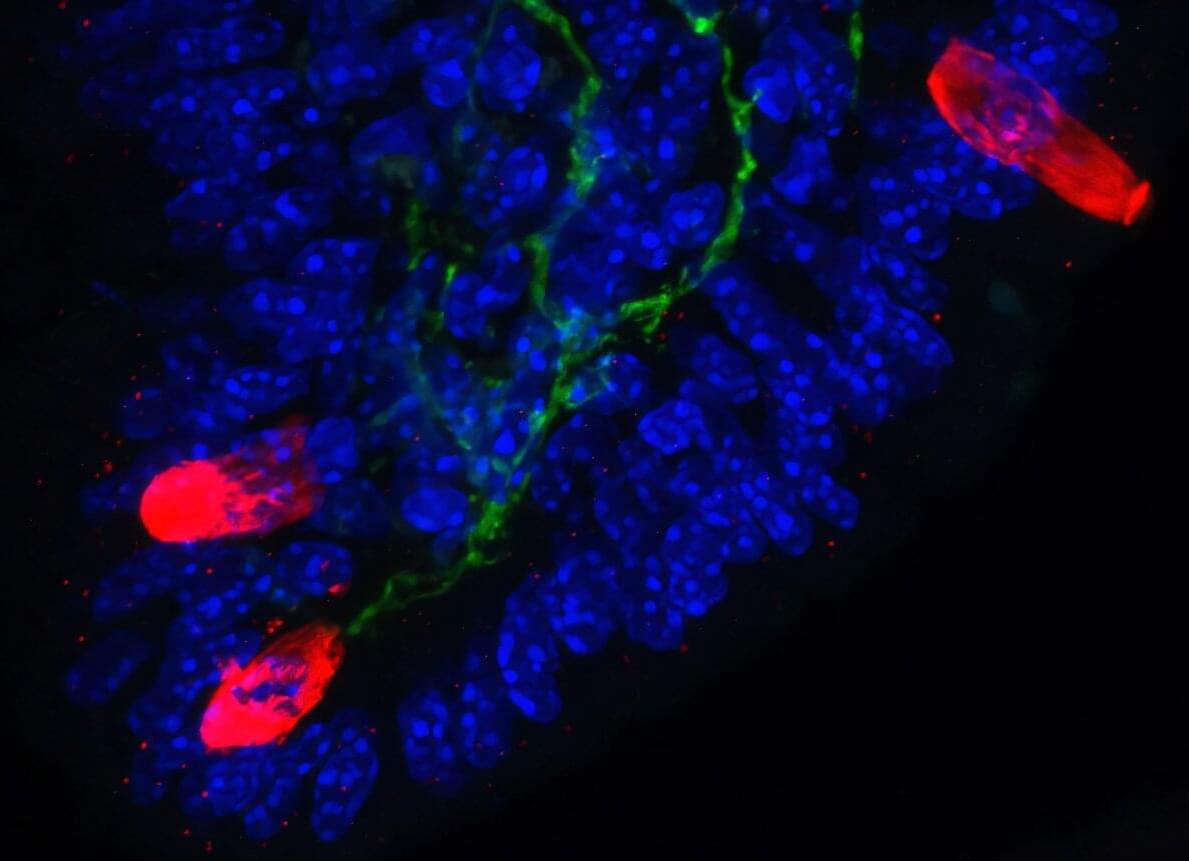

Pain-sensing neurons in the gut kindle inflammatory immune responses that cause allergies and asthma, according to a new study by Weill Cornell Medicine. The findings, published in Nature, suggest that current drugs may not be as effective because they only address the immune component of these conditions, overlooking the contribution of neurons.

“Today’s blockbuster biologics are sometimes only 50% effective and when the treatments do work, they sometimes lose their efficacy over time,” said senior author Dr. David Artis, director of the Jill Roberts Institute for Research in Inflammatory Bowel Disease and the Michael Kors Professor in Immunology at Weill Cornell.

While the idea may be new to the field, Dr. Artis has been thinking about the role the nervous system may play in allergies and asthma for about two decades. For example, many of the symptoms that characterize these conditions, like itching and wheezing, are known to be neuronally controlled. “That was one of the clues that prompted us to look closer for a connection,” Dr. Artis said.

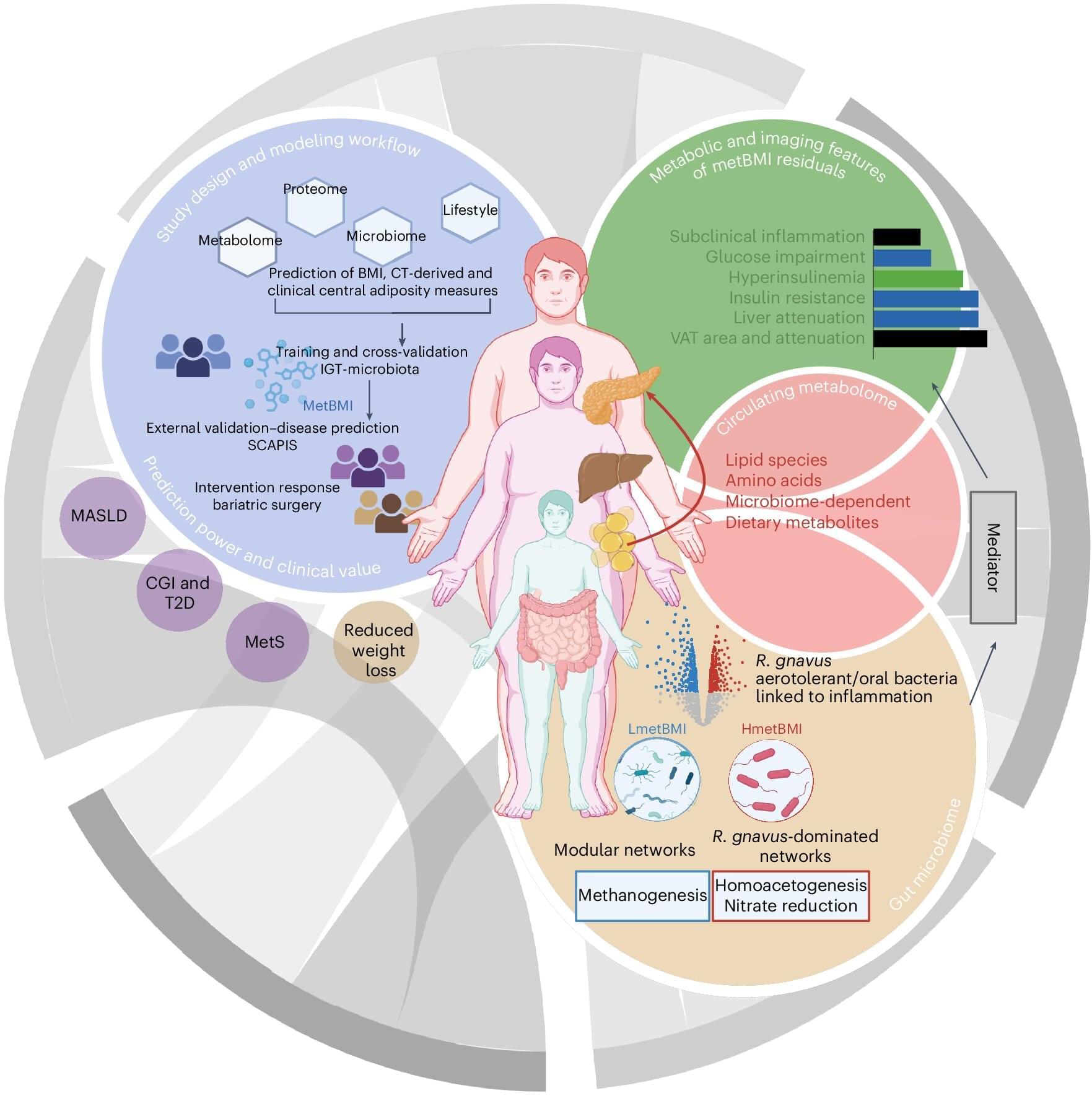

Researchers at Leipzig University and the University of Gothenburg have developed a novel approach to assessing an individual’s risk of metabolic diseases such as diabetes or fatty liver disease more precisely. Instead of relying solely on the widely used body mass index (BMI), the team developed an AI-based computational model using metabolic measurements. This so-called metabolic BMI shows that people of normal weight with a high metabolic BMI have up to a fivefold higher risk of metabolic disease. The findings have been published in the journal Nature Medicine.

The conventional body mass index, calculated using height and weight, may indicate overweight but does not reflect how healthy or unhealthy body fat actually is. According to BMI classifications, up to 30% of people are considered to be of normal weight but already show dangerous metabolic changes. Conversely, there are individuals with an elevated BMI whose metabolism remains largely unremarkable. This discrepancy can lead to at-risk patients being identified and treated too late.

For the current scientific study, the international research team analyzed data from two large Swedish population studies involving a total of almost 2,000 participants. In addition to standard health and lifestyle parameters, extensive laboratory data from blood samples and analyses of the gut microbiome were collected. Based on this dataset, the researchers developed a computational model that predicts metabolic BMI.