CAR-T expert Terry Fry of the University of Colorado Cancer Center talks about new data and future applications for this immunotherapy.

Behind the Veil of Ignorance, no one knows who they are. They lack clues as to their class, their privileges, or their disadvantages. They exist as an impartial group, tasked with designing a new society with its own conception of justice.

And — here’s the kicker — what if you had to make those decisions without knowing who you would be in this new society?

“But the nature of man is sufficiently revealed for him to know something of himself and sufficiently veiled to leave much impenetrable darkness, a darkness in which he ever gropes, forever in vain, trying to understand himself.”

Philosopher John Rawls asked just that in a thought experiment known as “the Veil of Ignorance” in his 1971 book, Theory of Justice.

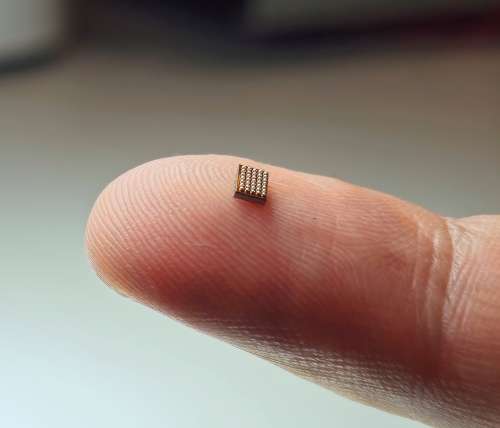

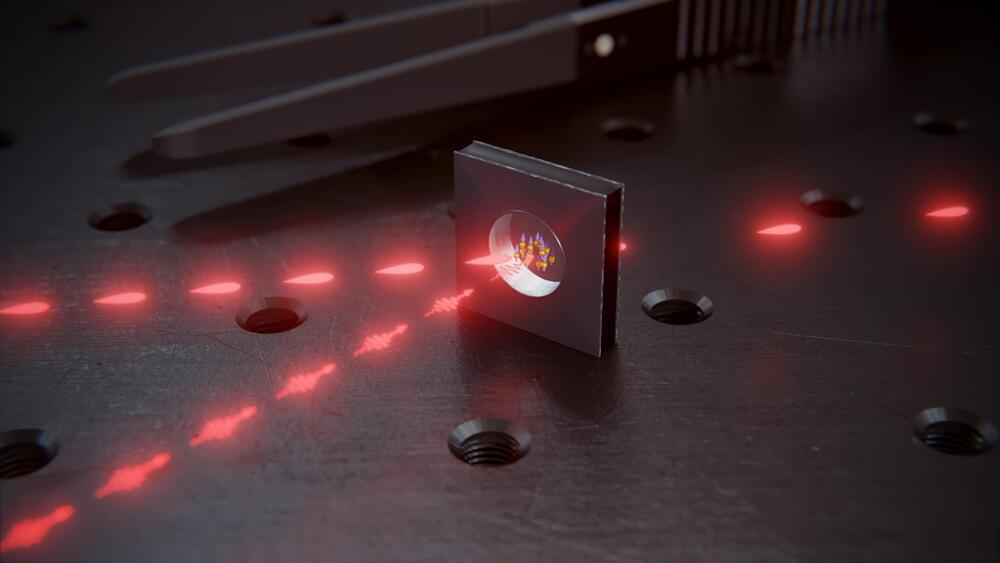

Light pulses can be stored and retrieved in the glass cell, which is filled with rubidium atoms and is only a few millimeters in size.

Light particles are particularly suited to transmitting quantum information.

Researchers at the University of Basel have built a quantum memory element based on atoms in a tiny glass cell. In the future, such quantum memories could be mass-produced on a wafer.

It is hard to imagine our lives without networks such as the internet or mobile phone networks. In the future, similar networks are planned for quantum technologies that will enable the tap-proof transmission of messages using quantum cryptography and make it possible to connect quantum computers to each other.

Like their conventional counterparts, such quantum networks require memory elements in which information can be temporarily stored and routed as needed. A team of researchers at the University of Basel led by Professor Philipp Treutlein has now developed such a memory element, which can be micro-fabricated and is, therefore, suitable for mass production. Their results were published in Physical Review Letters.

Tesla late on Thursday started customer deliveries of its semi-trailer truck, which is dubbed the Semi.

The first customer was Pepsi, which placed an order for 100 of the Class 8 trucks following the debut in 2017. Budweiser and Walmart are among the other customers for the fully electric semi truck which features a central driving position, just like the McLaren F1 supercar.

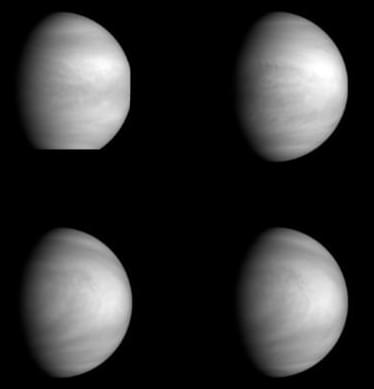

Researchers may have identified the missing component in the chemistry of the Venusian clouds that would explain their color and splotchiness in the UV range, solving a long-standing mystery.

What are the clouds of Venus made of? Scientists know it’s mainly made of sulfuric acid droplets, with some water, chlorine, and iron. Their concentrations vary with height in the thick and hostile Venusian atmosphere. But until now they have been unable to identify the missing component that would explain the clouds’ patches and streaks, only visible in the UV range.

In a new study published in Science Advances, researchers from the University of Cambridge synthesised iron-bearing sulfate minerals that are stable under the harsh chemical conditions in the Venusian clouds.

The findings are published in Science.

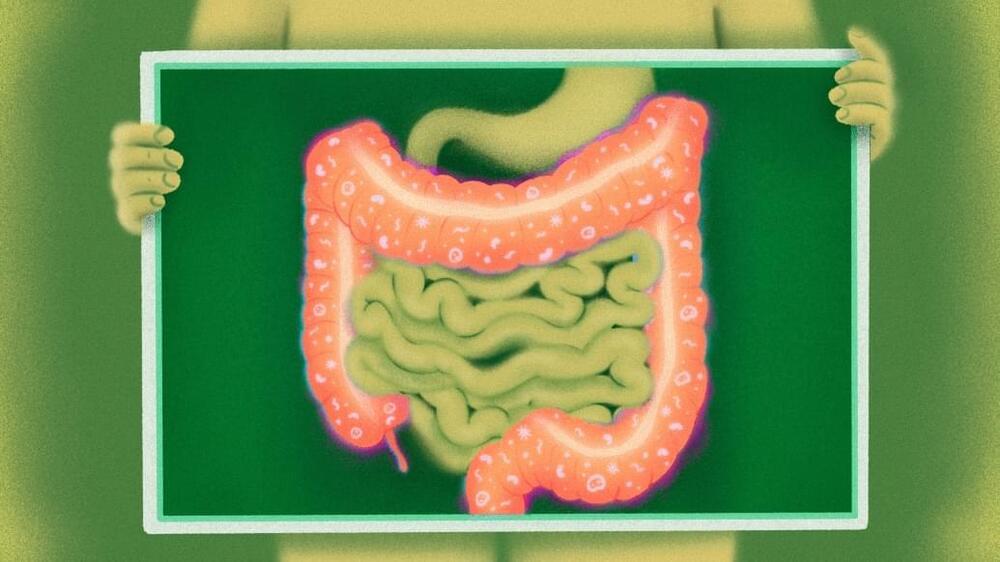

“This is a good example of how understanding a mechanism helps you to develop an alternative therapy that’s more beneficial. Once we identified the mechanism causing the colitis, we could then develop ways to overcome this problem and prevent colitis while preserving the anti-tumor effect,” said senior study author Gabriel Nunez, M.D., Paul de Kruif Professor of Pathology at Michigan Medicine.

Recent research suggests that a number of neuronal characteristics, traditionally believed to stem from the cell body or soma, may actually originate from processes in the dendrites. This discovery has significant implications for the study of degenerative diseases and for understanding the different states of brain activity during sleep and wakefulness.

The brain is an intricate network comprising billions of neurons. Each neuron’s cell body, or soma, engages in simultaneous communication with thousands of other neurons through its synapses. These synapses act as links, facilitating the exchange of information. Additionally, each neuron receives incoming signals through its dendritic trees, which are highly branched and extend for great lengths, resembling the structure of a complex and vast arboreal network.

For the last 75 years, a core hypothesis of neuroscience has been that the basic computational element of the brain is the neuronal soma, where the long and ramified dendritic trees are only cables that enable them to collect incoming signals from its thousands of connecting neurons. This long-lasting hypothesis has now been called into question.