We’ve been looking everywhere for decades.

Register for our 8-week seminar, Religion and Science!: https://www.religiondepartment.com/ReligionAndScience.

Religion and science don’t have to be enemies. Andrew Henry talks with scholar Andrew Aghapour about what the Religion and Science series revealed, how science studies religious experience, and where scientific approaches can quietly mislead.

The encounter, deep beneath the surface of Indonesia’s Maluku Islands, did not just produce striking images. It opened a rare window on one of the ocean’s most mysterious creatures: the coelacanth, a fish once written off as extinct for millions of years.

In October 2024, French divers Alexis Chappuis and Julien Leblond descended to around 145 metres off the Maluku archipelago, in eastern Indonesia. They were using advanced rebreather systems and specialised suits designed for long, deep technical dives.

For two years, Chappuis had been poring over charts and sonar data, mapping underwater cliffs and cold upwellings that might harbour coelacanths. The terrain he targeted was steep, fragmented rock riddled with ledges and crevices, a layout similar to known coelacanth habitats in other parts of the Indian Ocean.

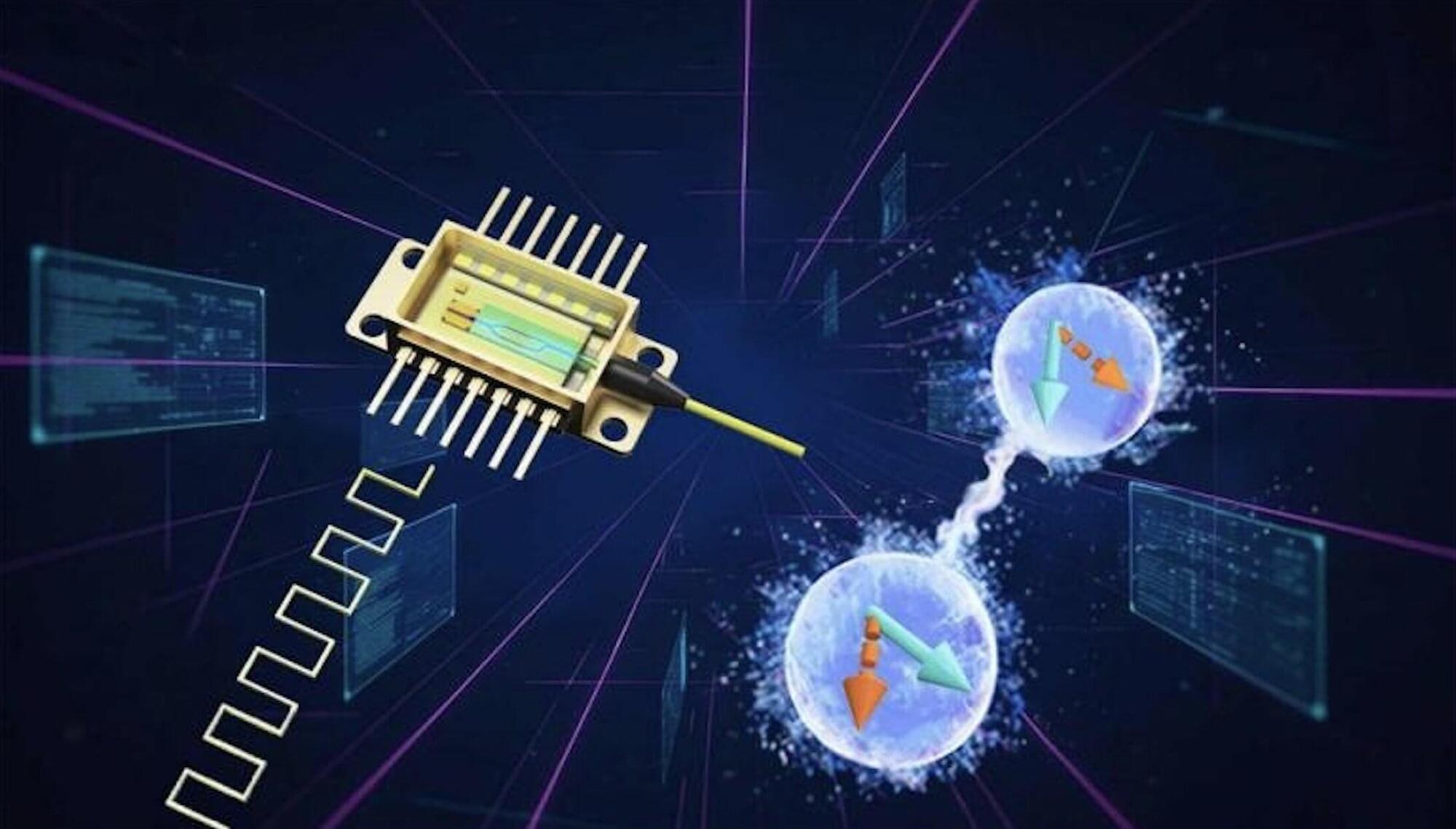

Quantum technologies are cutting-edge systems that can process, transfer, or store information leveraging quantum mechanical effects, particularly a phenomenon known as quantum entanglement. Entanglement entails a correlation between two or more distant particles, whereby measuring the state of one also defines the state of the others.

In recent years, quantum physicists and engineers have been trying to realize devices that operate leveraging the entanglement between individual particles of light (i.e., photons). The reliable operation of these devices relies on so-called entangled photon sources (EPSs), components that can generate entangled pairs of photons.

Researchers at University of Science and Technology of China, Jinan Institute of Quantum Technology, CAS Institute of Semiconductors and other institutes recently realized a new EPS integrated onto a single photonic chip, which can generate entangled photons via an electrically powered laser. Their study is published in Physical Review Letters.

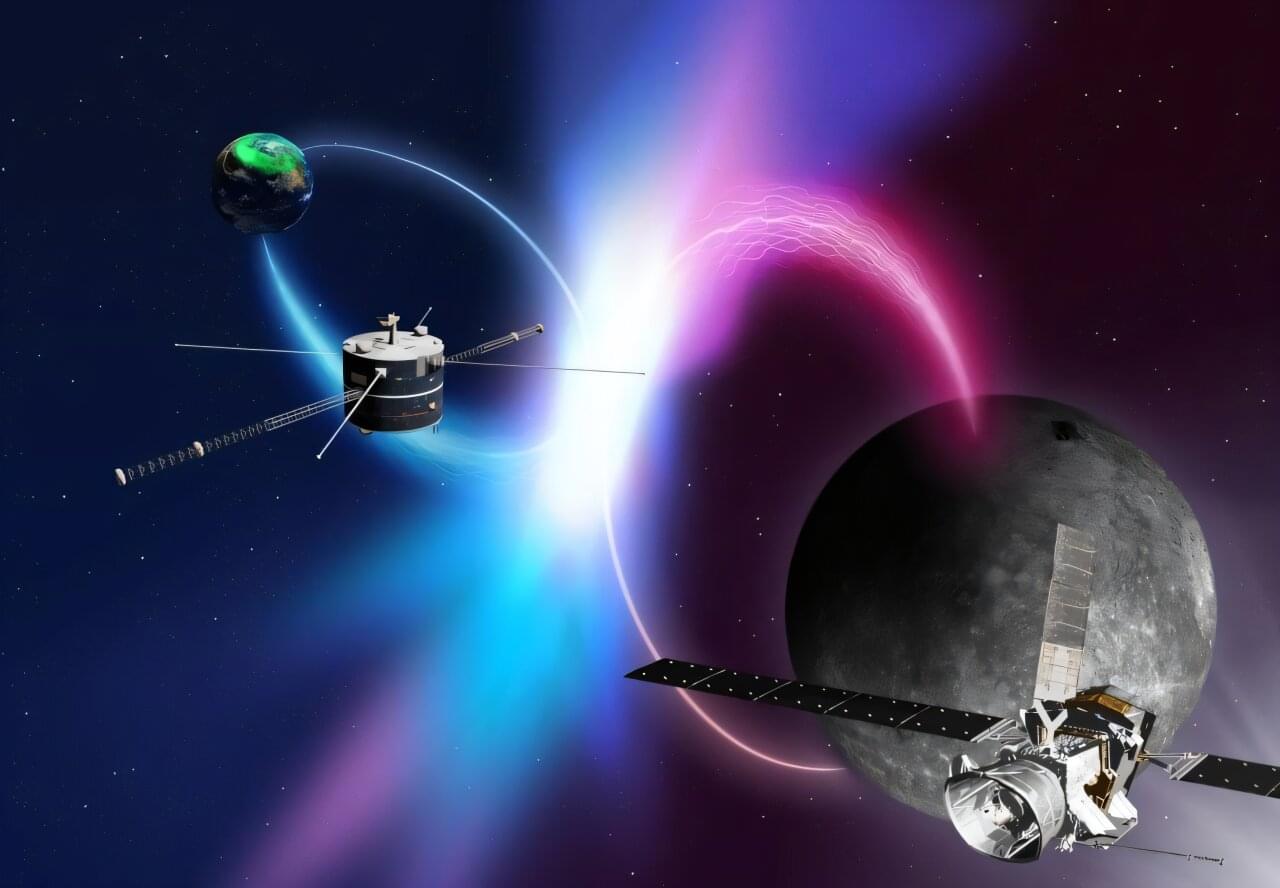

An international team from Kanazawa University (Japan), Tohoku University (Japan), LPP (France), and partners has demonstrated that chorus emissions, natural electromagnetic waves long studied in Earth’s magnetosphere, also occur in Mercury’s magnetosphere exhibiting similar chirping frequency changes.

Using the Plasma Wave Investigation instrument aboard BepiColombo’s Mercury orbiter Mio, six Mercury flybys between 2021 and 2025 detected plasma waves in the audible range. Comparison with decades of GEOTAIL data confirmed identical instantaneous frequency changes.

This provides the first reliable evidence of intense electron activity at Mercury, advancing understanding of auroral processes across the solar system.

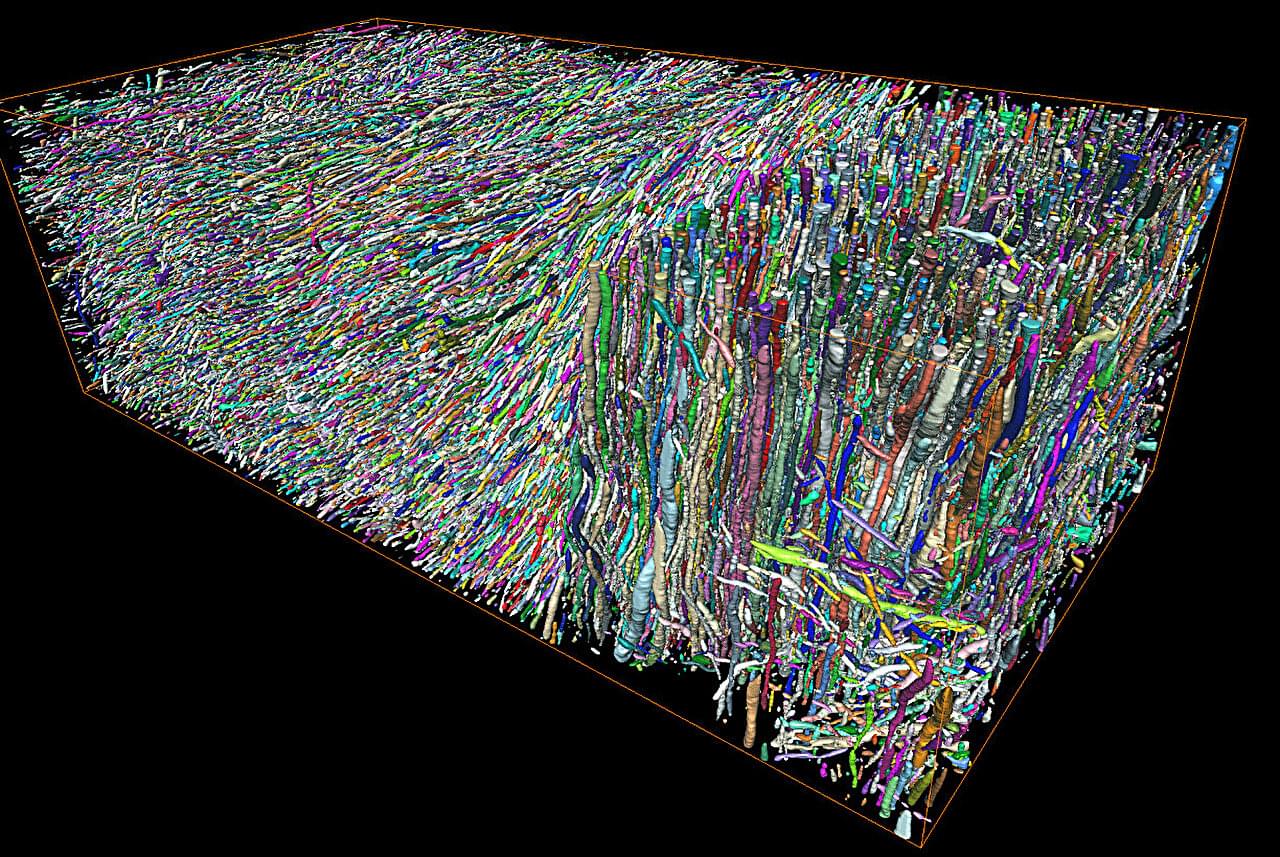

Early diagnosis and noninvasive monitoring of neurological disorders require sensitivity to elusive cellular-level alterations that emerge much earlier than volumetric changes observable with millimeter-resolution medical imaging.

Morphological changes in axons—the tube-like projections of neurons that transmit electrical signals and constitute the bulk of the brain’s white matter—are a common hallmark of a wide range of neurological disorders, as well as normal development and aging.

A study from the University of Eastern Finland (UEF) and the New York University (NYU) Grossman School of Medicine establishes a direct analytical link between the axonal microgeometry and noninvasive, millimeter-scale diffusion MRI (dMRI) signals—diffusion MRI measures the diffusion of water molecules within biological tissues and is sensitive to tissue microstructure.