I hear this author; however, can it pass military basic training/ boot camp? Think not.

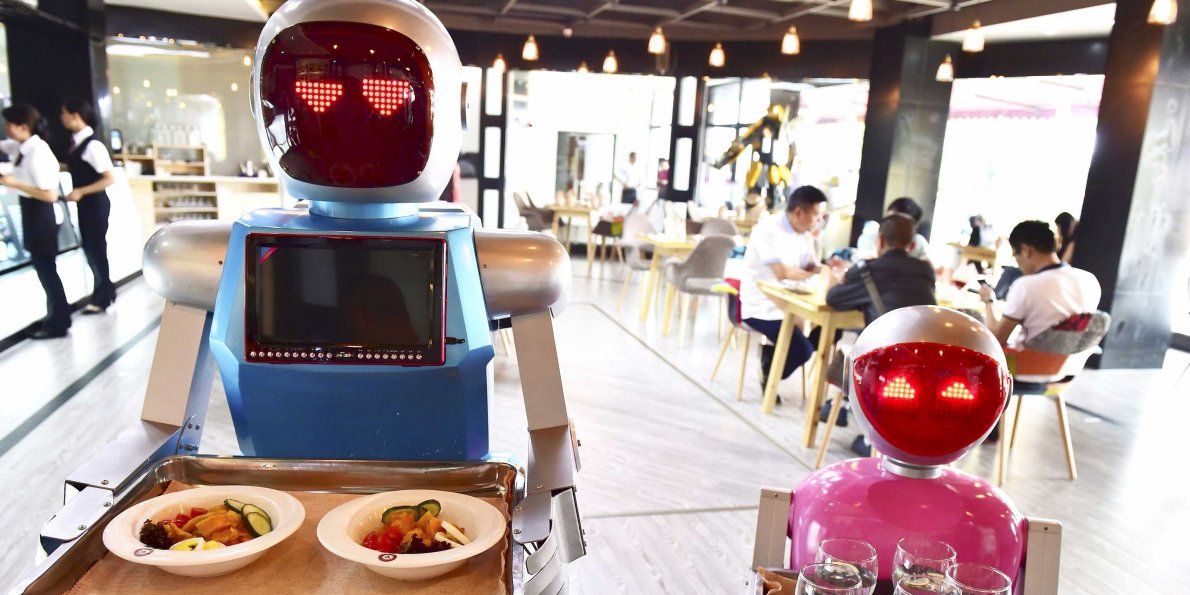

Back when Alphabet was known as Google, the company bought Boston Dynamics, makers of the amazingly advanced robot named Atlas. At the time, Google promised that Boston Dynamics would stop taking military contracts, as it often did. But here’s the open secret about Atlas: She can enlist in the US military anytime she wants.

Technology transfer is a two-way street. Traditionally we think of technology being transferred from the public to the private sector, with the internet as just one example. The US government invests in and develops all kinds of important technologies for war and espionage, and many of those technologies eventually make their way to American consumers in one way or another. When the government does so consciously with both military and civilian capabilities in mind, it’s called dual-use tech.

But just because a company might not actively pursue military contracts doesn’t mean that the US military can’t buy (and perhaps improve upon) technology being developed by private companies. The defense community sees this as more crucial than ever, as government spending on research and development has plummeted. About one-third of R&D was being done by private industry in the US after World War II, and two-thirds was done by the US government. Today it’s the inverse.