DLD (Digital-Life-Design) is a global network on innovation, digitization, science and culture which connects business, creative and social leaders, opinion-formers and influencers for crossover conversation and inspiration.

DLD (Digital-Life-Design) is a global network on innovation, digitization, science and culture which connects business, creative and social leaders, opinion-formers and influencers for crossover conversation and inspiration.

My first article for TechCrunch. The story is on disability & transhumanism:

Radical technologies around the world may soon overhaul the field of disability and immobility, which affects in some way more than a billion people around the world.

MIT bionics designer Hugh Herr, who lost both his legs in a mountain climbing accident, recently said in a TED Talk on disability, “A person can never be broken. Our built environment, our technologies, are broken and disabled. We the people need not accept our limitation, but can transcend disability through technological innovation.”

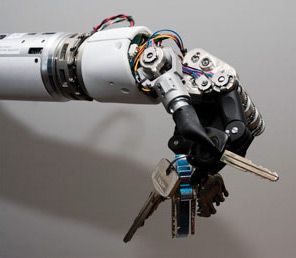

His words are coming true. Around the world, the deaf hear via cochlear implants, paraplegics walk with exoskeletons and the once limbless have functioning limbs. For example, some amputees have mind-controlled robotic arms that can grab a glass of water with amazing precision. In 15 or 20 years, that bionic arm could very well be better than the natural arm, and people may even electively remove their biological arms in favor of robotic ones. After all, who doesn’t want to be able to do a hundred pull ups in a row or lift the front end of a car up to quickly change a flat tire?

Interesting article in The Telegraph on biohacking and recent Grindfest, where the Immortality Bus stopped:

Immortality aside, DIY “bio-hacking” could provide solutions to everyday problems, despite the risks involved.

A growing number of tech moguls are trying to solve their biggest problem yet: aging.

From reprogramming DNA to printing organs, some of Silicon Valley’s most successful and wealthy leaders are investing in biomedical research and new technologies with hopes of discovering the secret to living longer.

And their investments are beginning to move the needle, said Zoltan Istvan, a futurist and transhumanist presidential candidate.

AOL running an energetic 2-min video on transhumanism and longevity on their morning show:

Zoltan Istvan of the Transhumanist Party is running for President on one platform: longevity.

My 4 min interview on transhumanism and longevity with BuzzFeed came out as a stand-alone video. About 800 comments under the YouTube video:

Because we all deserve a chance at immortality!

A new story on transhumanism from Tech Insider which is Business Insider’s new tech site:

This presidential candidate wants you to live forever.

A new article from The Daily Dot on transhumanism and my campaign:

The Daily Dot is the hometown newspaper of the World Wide Web, reporting on Reddit, YouTube, Facebook, Twitter, Pinterest, and more.

The stock market is tanking, North and South Korea are on the brink of war, and a cartoon character from a dystopian future is the most popular candidate for US President at the moment. But don’t despair. While most things are garbage, there are some things in the world that aren’t. Like this adorable kid who just got his own high-tech bionic hand.

Nine-year-old Josh Cathcart was often bullied in school for having just one hand. But he’s about to become the coolest kid in school, thanks to his new i-limb, developed by a company called Touch Bionics. The hand can be programmed via an iPad app.

“I made myself a bagel yesterday. I can open bottles and packets with it. I can stack up blocks, I can build Lego with it and I can pull my trousers up,” he told The Guardian. While he’s not the youngest to ever get a bionic hand, he’s the youngest that this company has fitted the device for. He’ll grow out of it in about a year and need a new one, and his parents have started fundraising to pay for it.