Now its building one that even bigger and even more sophisticated.

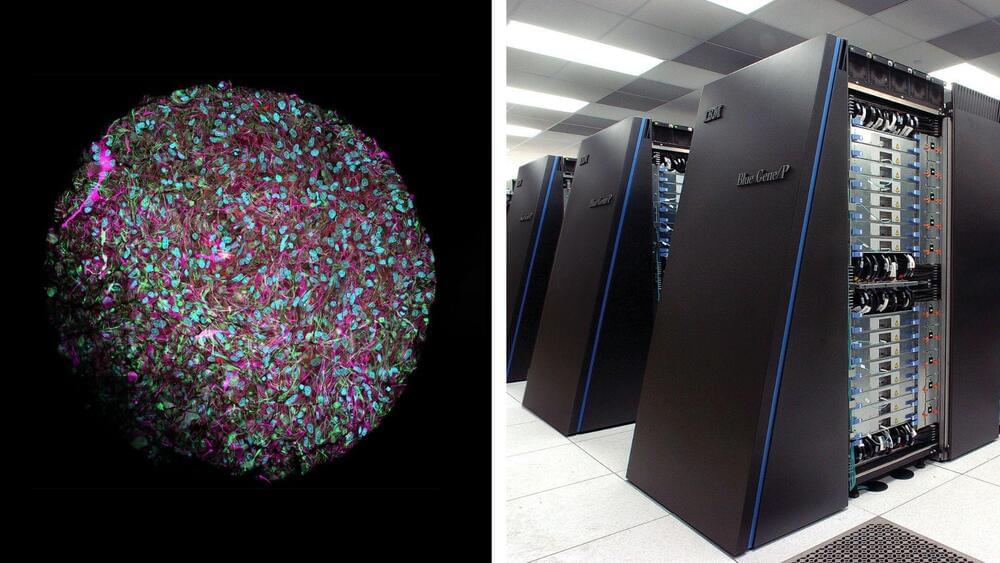

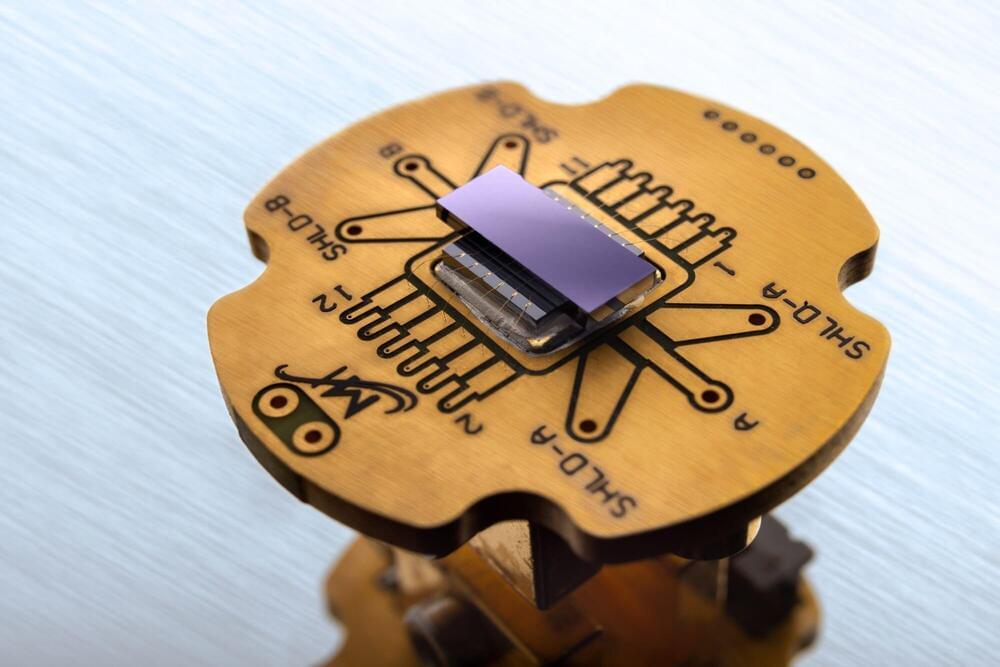

Nearly five years ago, a little-known company approached Microsoft with a special request to put together computing horsepower to the scale it had never done before. Microsoft then spent millions of dollars in putting together tens of thousands of powerful chips to build a supercomputer. OpenAI used this to train its large language model, GPT, and the rest, as they say, is history.

Microsoft is no stranger to building artificial intelligence (AI) models that help users work more efficiently. The automatic spell checker that has helped millions of users is an example of an AI model trained on language.

Microsoft.

How Microsoft put together a supercomputer for OpenAI.