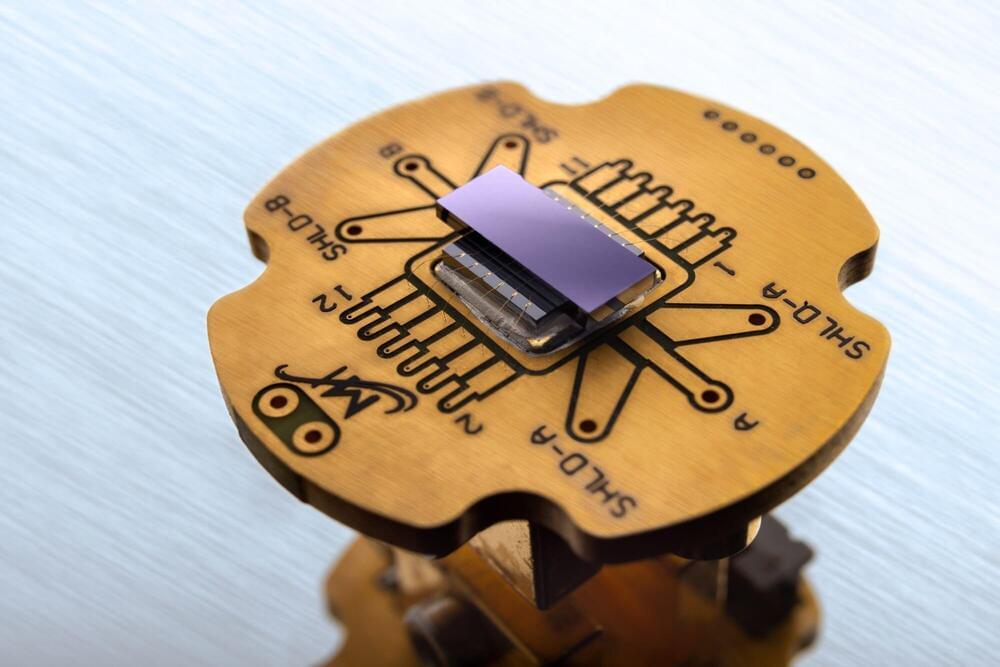

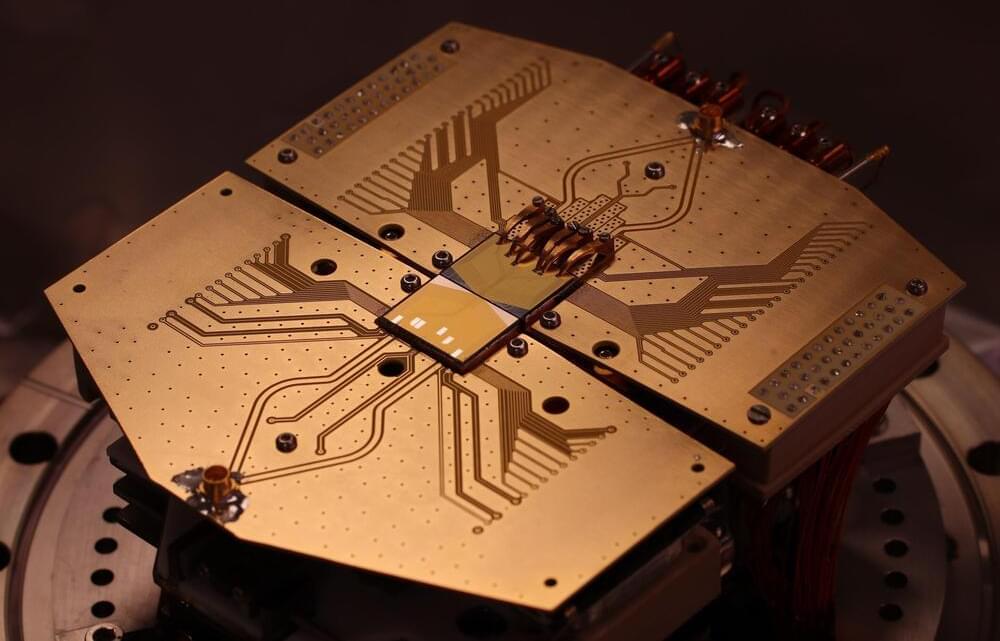

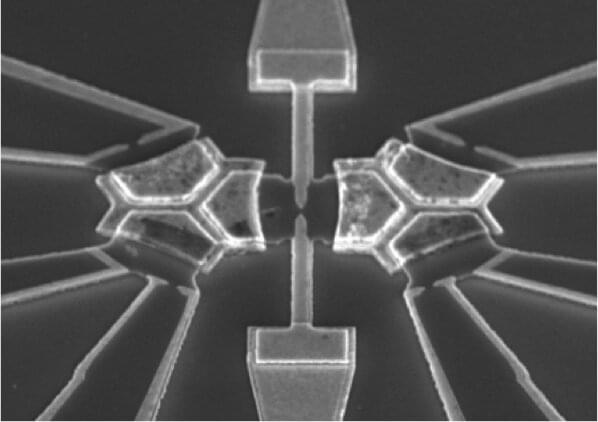

Quantum computers are highly energy-efficient and extremely powerful supercomputers. But for these machines to realize their full potential in new applications like artificial intelligence or machine learning, researchers are hard at work at perfecting the underlying electronics to process their calculations. A team at Fraunhofer IZM are working on superconducting connections that measure a mere ten micrometers in thickness, moving the industry a substantial step closer to a future of commercially viable quantum computers.

With the extreme computing power they promise, quantum computers have the potential to become the driving force for technological innovations in all areas of modern industry. By contrast with the run-of-the-mill computers of today, they do not work with bits, but with qubits: No longer are these units of information restricted to the binary states of 1 or 0.

With quantum superposition or entanglement added, qubits mean a great leap forward in terms of sheer speed and power and the complexity of the calculations they can handle. One simple rule still holds, though: More qubits mean more speed and more computing power.