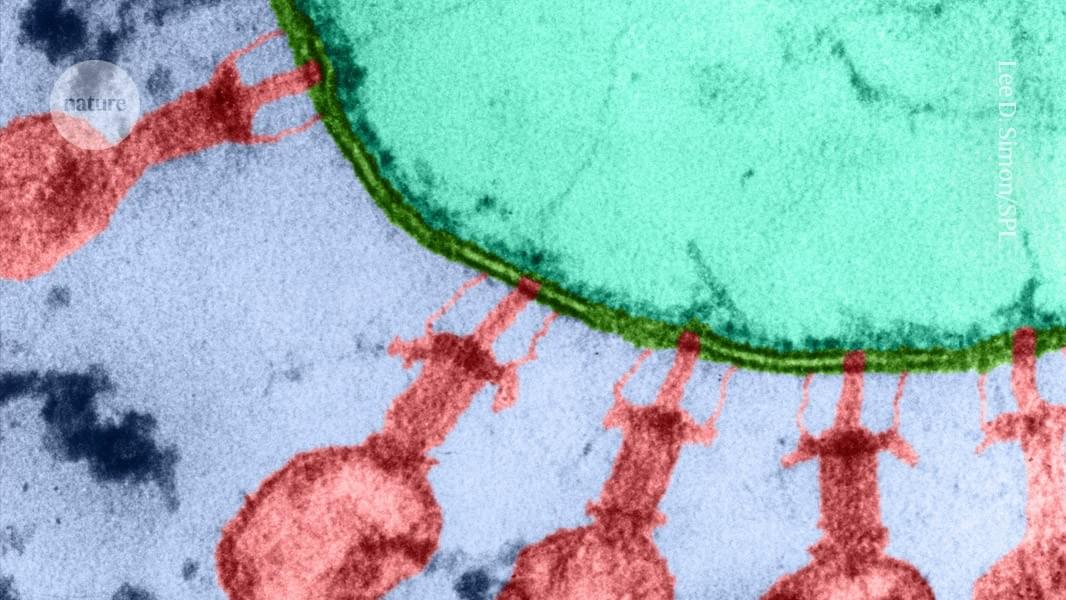

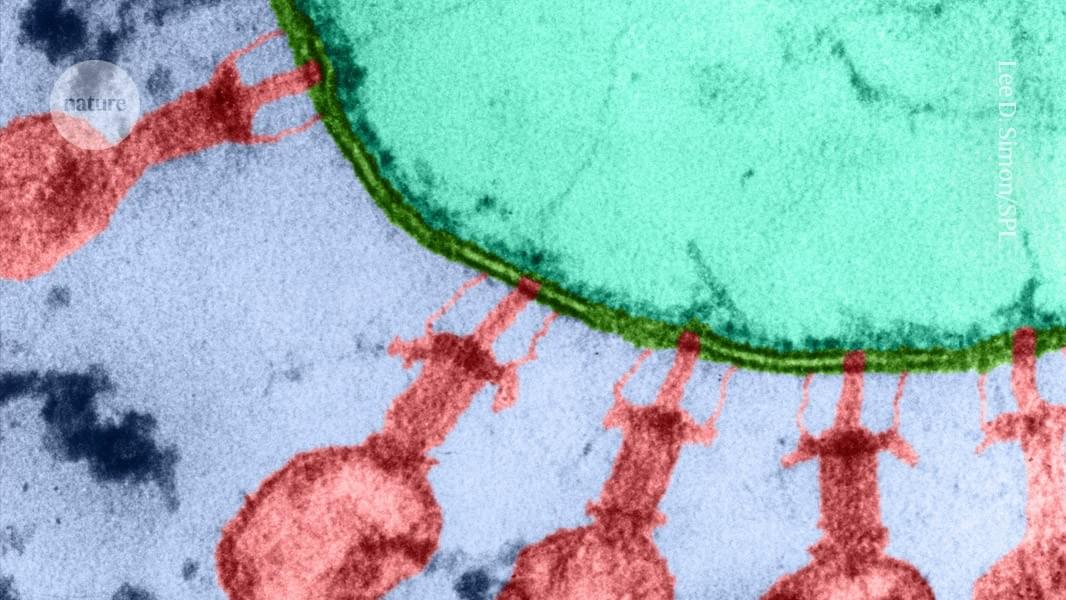

Scientists used artificial intelligence to write coherent viral genomes, using them to synthesize bacteriophages capable of killing resistant strains of bacteria.

In their nucleus, as they replicate, blood stem cells can accumulate mutations and lose epigenetic marks that used to keep DNA well-arranged, ultimately increasing mechanical tension on the nuclear envelope. This study figured out RhoA is a mechanosensor activated by such tension and conducts a key role in the stem cell ageing process. Researchers subsequently proved its rejuvenating potential: after ex vivo treatment of blood stem cells with the drug Rhosin, a RhoA inhibitor, they observed an improvement in aged-related markers.

As study co-author summarizes: “Overall, our experiments show that Rhosin did rejuvenate blood stem cells, increased the regenerative capacity of the immune system and improved the production of blood cells once transplanted in the bone marrow.”

Ageing is defined as the deterioration of function overtime, and it is one of the main risk factors for numerous chronic diseases. Although ageing is a complex phenomenon affecting the whole organism, it is proved that the solely manifestation of ageing in the haematopoietic system affects the whole organism.

A research team previously revealed the significancy of using blood stem cells to pharmacologically target ageing of the whole body, thereby suggesting rejuvenating strategies that could extend healthspan and lifespan. Now, in a Nature Ageing publication, they propose rejuvenating aged blood stem cells by treating them with the drug Rhosin, a small molecule that inhibits RhoA, a protein that is highly activated in aged haematopoietic stem cells. This study combined in vivo and in vitro assays together with innovative machine learning techniques.

Blood stem cells, or hematopoietic stem cells, are located in the bone marrow, a highly dynamic and specialised tissue within the cavity of long bones. They are responsible for the vital function of continuously producing all types of blood cells: red blood cells (oxygen transporters), megakaryocytes (future platelets) and white blood cells (immune cells, lymphocytes and macrophages). Over time, however, stem cells also do age, they lose their regenerative capacity and generate fewer and lower quality immune cells. This has been linked to immunosenescence, chronic low grade inflammation and certain chronic diseases.

An AI tool that can analyze abnormalities in the shape and form of blood cells, and with greater accuracy and reliability than human experts, could change the way conditions such as leukemia are diagnosed.

Researchers have created a system called CytoDiffusion that uses generative AI – the same type of technology behind image generators such as DALL-E – to study the shape and structure of blood cells.

Unlike many AI models, which are trained to simply recognize patterns, the researchers – led by the University of Cambridge, University College London and Queen Mary University of London – showed that CytoDiffusion could accurately identify a wide range of normal blood cell appearances and spot unusual or rare cells that may indicate disease. Their results are reported in the journal Nature Machine Intelligence.

The ubiquity of generative AI is a hard pill to swallow, but even harder is figuring out what’s AI and isn’t. It’s easier than ever now to reach for that low-hanging fruit of critique in saying that something looks like an AI spat it out, especially now that games are claiming they were, in fact, spat out entirely by AI. Positive Concept Games, the developer of SNES-esque RPG Shrine’s Legacy, found that out the hard way, as it shared in a post on X last Wednesday.

Please don’t do this. We poured years of our lives into this game and only worked with real human artists on everything: From the writing to the coding, all work was done by human hands. We do not endorse generative AI and will never use it. pic.twitter.com/3L7NKVX1L8 December 10, 2025

The dev shared a Steam review of the game that calls it “AI slop,” claims the “story is dogshit mixed with catshit,” and reiterates that the game was “made in CHAT GPT.” The developer caption reads: “Please don’t do this. We poured years of our lives into this game and only worked with real human artists on everything … We do not endorse generative AI and will never use it.”

AI’s rise to fame in the mainstream happened with OpenAI’s GPT-3 launch in 2020, which became a benchmark for large language models and quickly spread through startups via APIs. While Big Tech now races toward AGI and superintelligence, experts warn current systems remain limited, governance unprepared, and safety oversight crucial as AI capabilities accelerate faster than human control.

An exploration of whether when a civilization develops AI, it convinces or compels them to not attempt interstellar travel for its own reasons and motives.

My Patreon Page:

/ johnmichaelgodier.

My Event Horizon Channel:

/ eventhorizonshow.

Music:

As part of the ESA Academy Experiments Programme, Team V-STARS carried out the first experiment with human participants in the Orbital Robotics Lab, investigating how microgravity affects the perception of verticality.

The V-STARS team, a collaboration between Birkbeck, University of London, and the University of Kent (UK), was selected to join the ESA Academy Experiments Programme in February 2025. After obtaining ethical approval from the United Kingdom and authorisation from the ESA Medical Board, the team was permitted to carry out their experiment in the Orbital Robotics Lab (ORL), located at ESTEC, the ESA site in the Netherlands.

The campaign involved test subjects seated on the ORL’s floating platform, wearing VR headsets while performing gravity-related perceptual tasks. The project investigates the use of Vestibular Stochastic Resonance — a phenomenon in which controlled noise enhances the sensitivity of a sensory system — to improve perception and potentially accelerate adaptation to microgravity. Over two weeks, the team tested more than 20 participants and has now returned to their universities to analyse the results.

This AI chip doesn’t use electricity to compute — it uses light.

FlexiSpot is having mega sales now! Use my code “CODEOLENCES” to get EXTRA $30 off on the E7 Pro standing desk! If you’re shopping on a budget, the FlexiSpot premium E7 is a great option. It would be greatly appreciated if you could leave a note saying “Codeolences” at checkout. FlexiSpot E7 Pro standing desk:

USA: https://bit.ly/497nWv1

CAN: https://bit.ly/4iTaKNO

▀▀▀

Engineers at the University of Pennsylvania have built a photonic neural network capable of classifying nearly 2 billion images per second, operating at speeds millions of times faster than today’s electronic computer vision systems.

In this video, we explore how photonic neural networks work, why traditional image recognition is so computationally expensive, and how light-based hardware could overcome fundamental limits of GPUs and silicon. We go over how convolution layers, weighted sums, and activation functions are implemented directly on a photonic chip — without memory, clock cycles, or digital logic.

⚛️⚛️⚛️