Google might have invented the transformer models that led to the glut of generative AI we see today, but it wasn’t the first to cash in. The search giant threw its AI work into overdrive in the wake of ChatGPT’s appearance in Microsoft products, adding the Gemini AI to every product it can. A new report claims Gemini is about to come to the Google app on Android, and this may signal the beginning of the end for Assistant.

Gemini is the current brand for all of Google’s commercial AI models—whether it sticks to that is hard to say, but the Bard branding is in the rearview mirror. Gemini has been available on the web, and Google released a mobile app earlier this year. Installing that app on Android prompts you to replace Assistant despite Gemini’s comparative lack of features. Google is just that committed to getting everyone using its AI. Even if you don’t install that app, Google aims to get Gemini in front of your eyes by cramming it into the Google app.

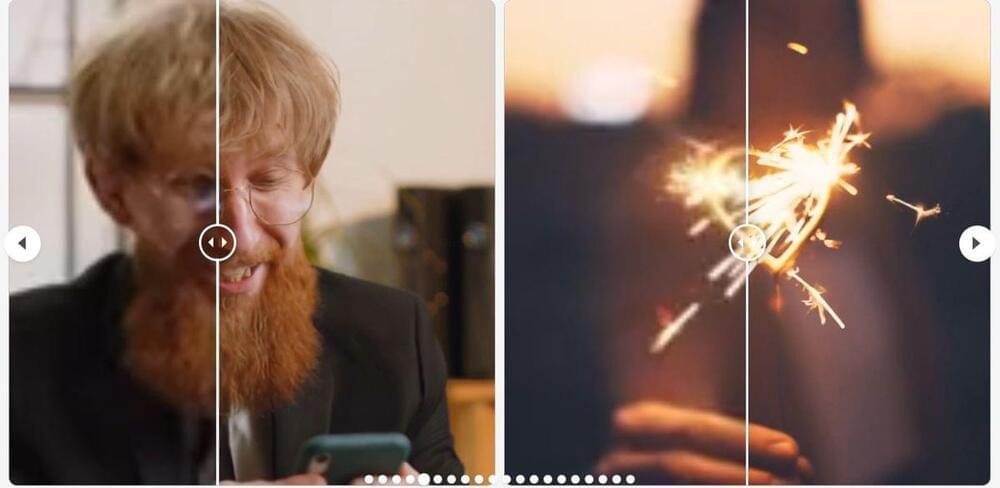

While there’s no official announcement yet, a video of the latest app update is already circulating (see below). The new version has a toggle at the top to switch between search and Gemini. If you’ve seen the iOS Google app recently, it’s essentially the same. There is another wrinkle for Android users, though. That toggle will also switch your phone to use Assistant everywhere, reports 9to5Google. The Google app will now encourage people to switch from Assistant to Gemini, and unlike the new Gemini app, it’s already installed on virtually every Android phone.