AI is upending the way robots learn, leaving companies and researchers with a need for more data. Getting it means wrestling with a host of ethical and legal questions.

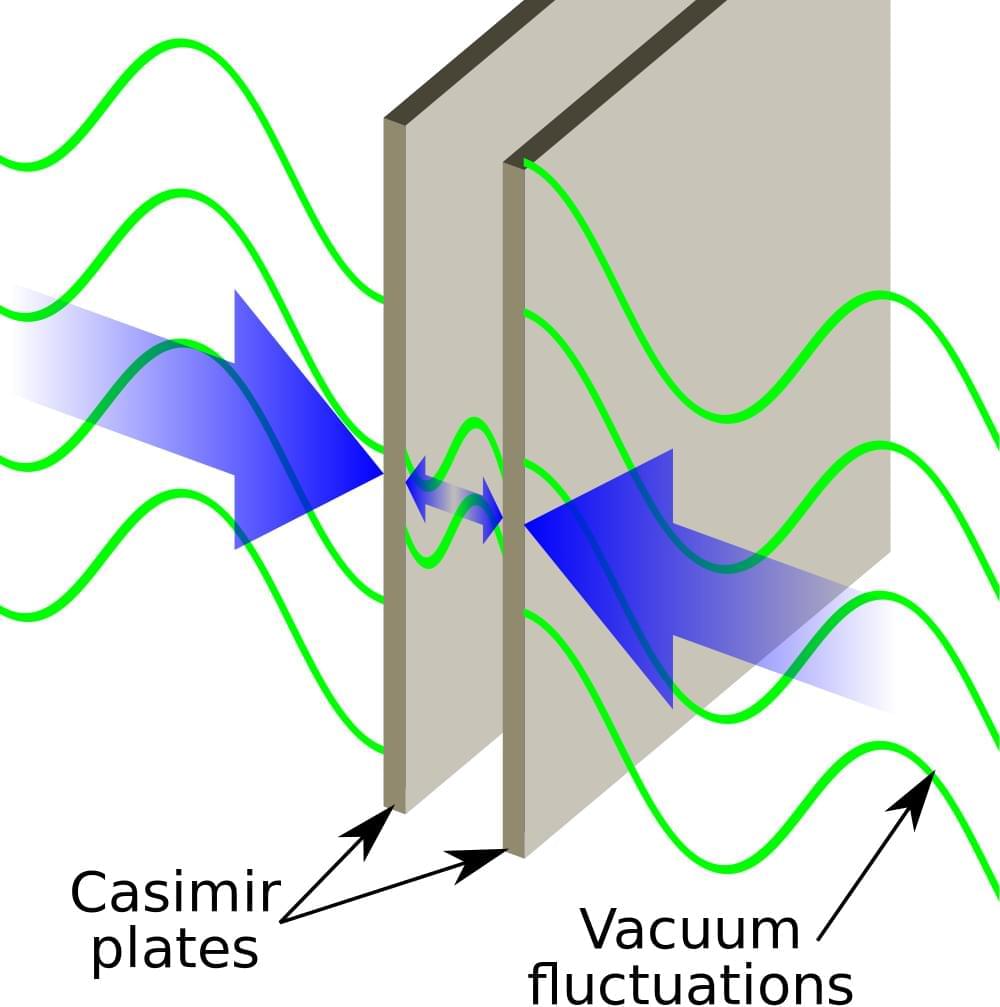

Recently I saw a post on twitter claiming that AI could be powered with quantum vacuum energy. The post was accompanied by a figure from a paper published in Nature. Unfortunately for the poster, but fortunately for science, the paper had nothing to do with extracting energy from the vacuum. Rather, it was a description of an experimental realization of a transistor that uses the Casimir effect to mediate and amplify energy transfer across a new kind of transistor.

1/ Researchers have found that AI models can solve complex tasks like “3SUM” by using simple dots like “…” instead of sentences.

Researchers have found that specifically trained LLMs can solve complex problems just as well using dots like “…” instead of full sentences. This could make it harder to control what’s happening in these models.

The researchers trained Llama language models to solve a difficult math problem called “3SUM”, where the model has to find three numbers that add up to zero.

Usually, AI models solve such tasks by explaining the steps in full sentences, known as “chain of thought” prompting. But the researchers replaced these natural language explanations with repeated dots, called filler tokens.

Brighter with Herbert.

Boston Dynamics is retiring the original Atlas at the age of 11. TechCrunch’s Brian Heater discusses the pioneering bot’s legacy and what the company has in mind for its offspring.

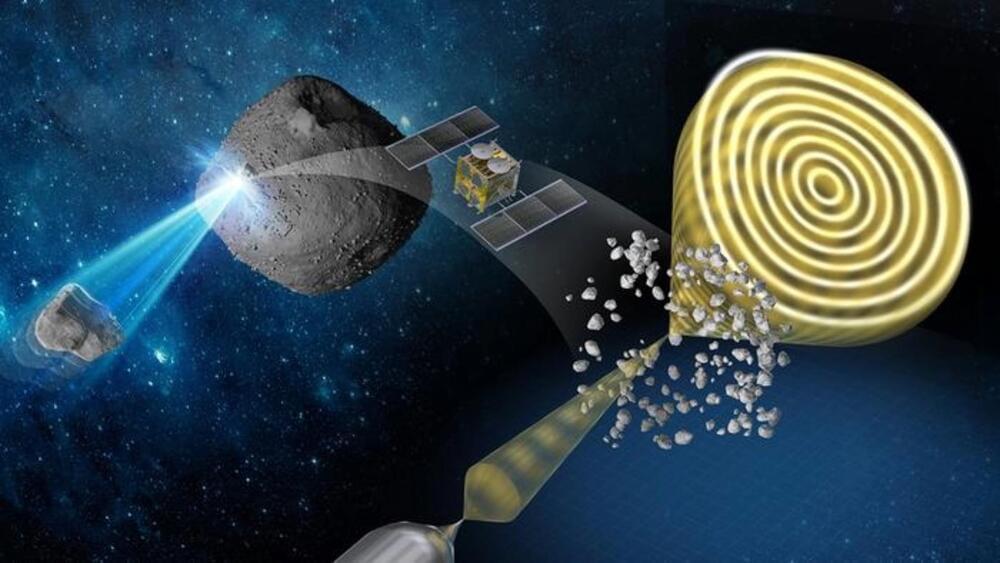

Direct sample analysis offers several advantages over robotic explorers conducting it from the surface of an asteroid or planet and then beaming back the data.

It provides a window into understanding how the surface of a celestial body has changed due to its constant exposure to the harsh deep space environment.

The scientists conducted their analysis using electron holography, a technique in which electron waves infiltrate materials. This method has the potential to uncover key details about the sample’s structure and magnetic and electric properties.

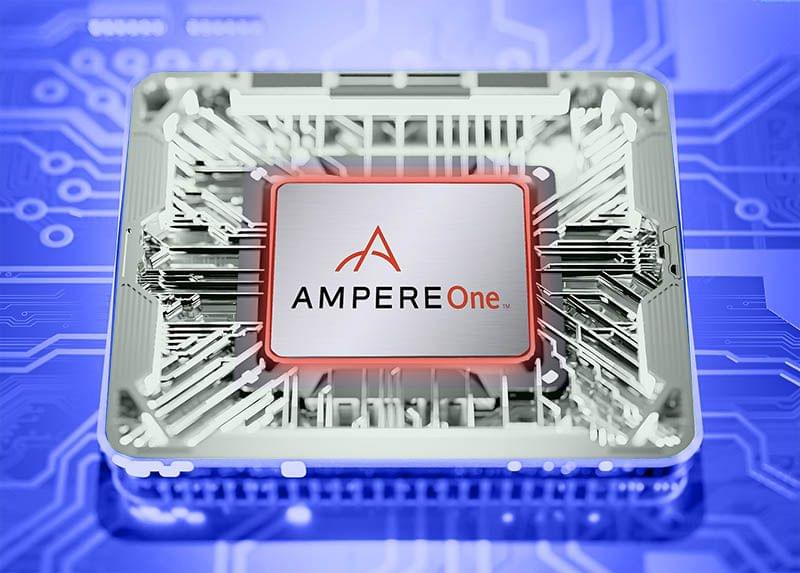

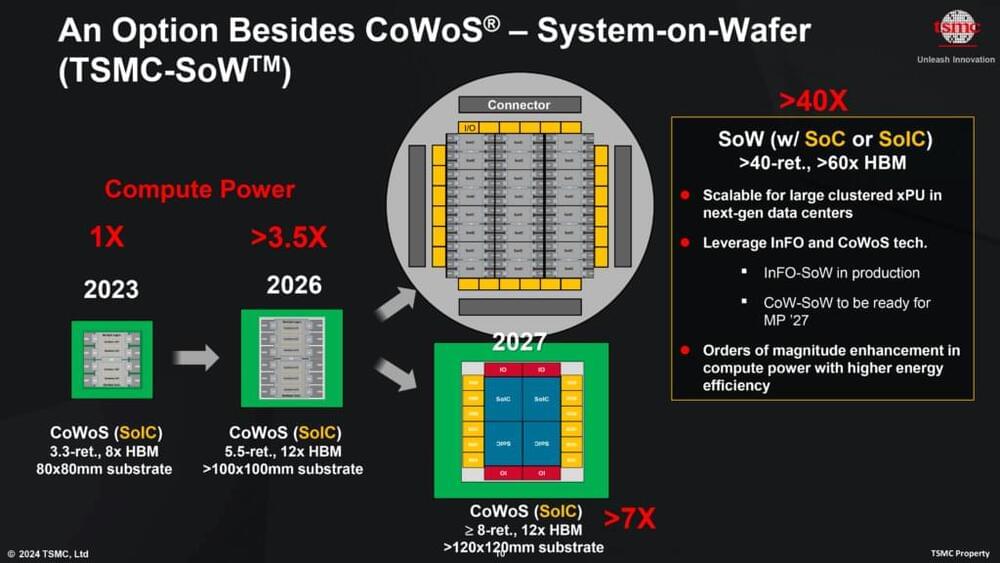

TSMC introduced its System-on-Wafer (TSMC-SoW™) technology, an innovative solution to bring revolutionary performance to the wafer level in addressing the future AI requirements for hyperscaler datacenters.

At the TSMC 2024 North America Technology Symposium, they debuted the TSMC A16™ technology, featuring leading nanosheet transistors with innovative backside power rail solution for production in 2026, bringing greatly improved logic density and performance.

The latest version of CoWoS allows TSMC to build silicon interposers that are about 3.3 times larger than the size of a photomask (or reticle, which is 858mm2). Thus, logic, eight HBM3/HBM3E memory stacks, I/O, and other chiplets can occupy up to 2,831 mm2. The maximum substrate size is 80×80 mm.