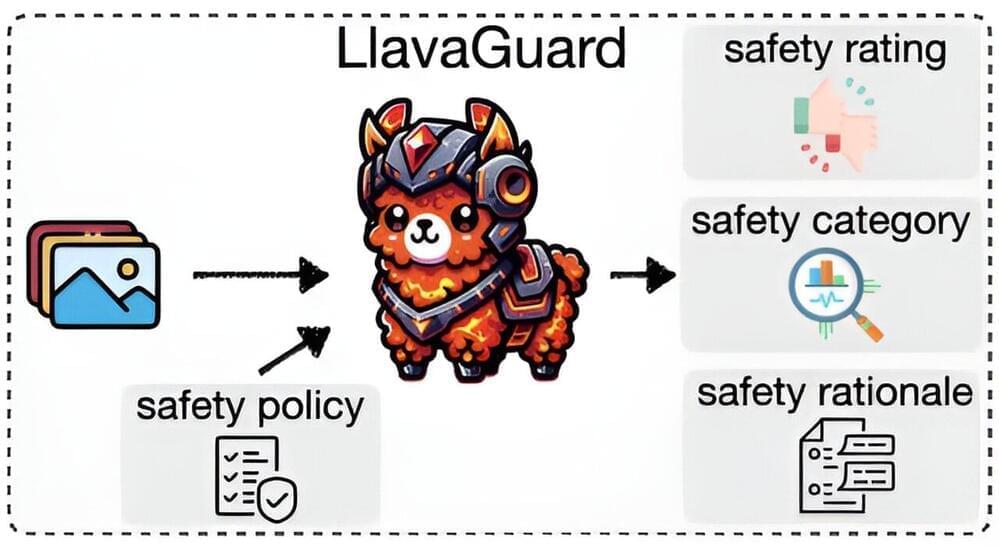

1/ OpenAI and Los Alamos National Laboratory (LANL) are partnering to explore how multimodal AI models can be safely used by laboratory scientists to advance life science research.

2/ As part of an evaluation study, novice and advanced laboratory scientists will solve standard experimental tasks…

OpenAI and Los Alamos National Laboratory (LANL) are collaborating to study the safe use of AI models by scientists in laboratory settings.

Ad.

The partnership aims to explore how advanced multimodal AI models like GPT-4, with their image and speech capabilities, can be safely used in labs to advance “bioscientific research.”