OpenAI unveils GPT-4o mini, a smaller and cheaper AI model.

OpenAI introduced GPT-4o mini on Thursday, its latest small AI model.

Introducing the most cost-efficient small model in the market.

How do you teach ChatGPT common sense? Train it on questions that adults would never think to ask. In this week’s “The Joy of Why,” computer scientist Yejin Choi talks with co-host Steven Strogatz about how training AI can mimic the “why-this, why-that” curiosity of a toddler.

Show The Joy of Why, Ep Will AI Ever Have Common Sense? — Jul 18, 2024.

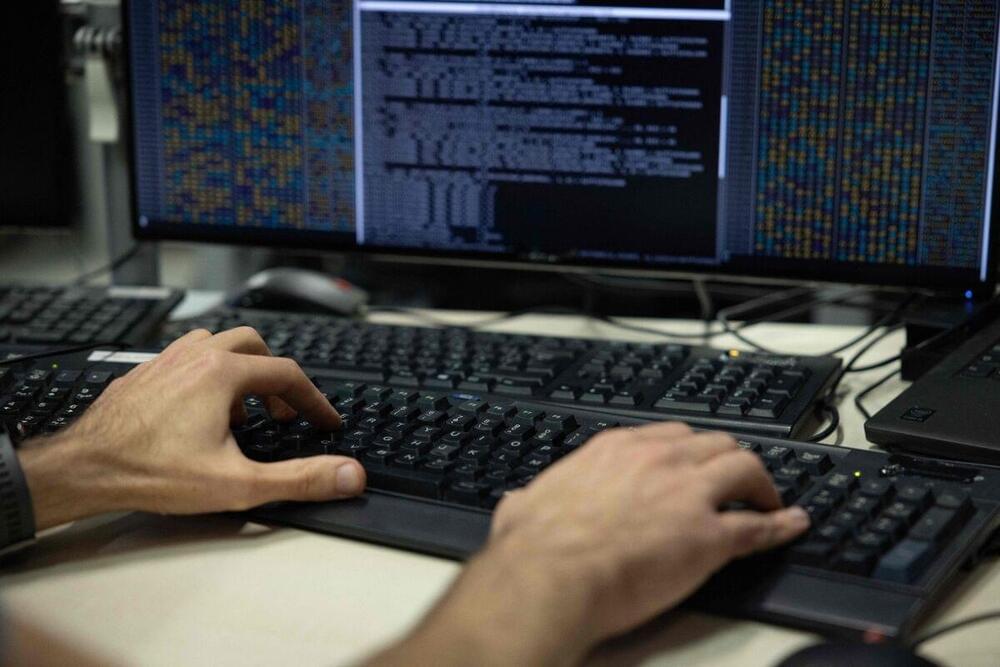

A 64-year-old named Mark has spent the last year learning how to control devices like his laptop and phone using a brain implant. And thanks to OpenAI, it’s gotten a whole lot easier to do.

The neurotech startup Synchron said Thursday it’s using OpenAI’s latest artificial intelligence models to build a new generative chat feature for patients with its brain-computer interface, or BCI.

A BCI system decodes brain signals and translates them into commands for external technologies. Synchron’s model is designed to help people with paralysis communicate and maintain some independence by controlling smartphones, computers and other devices with their thoughts.

A survey of people in the US has revealed the widespread belief that artificial intelligence models are already self-aware, which is very far from the truth.

It takes an incredible amount of energy to both train and operate artificial intelligence software, as we explored last week in The Bleeding Edge – AI’s Thirst for Power.

OpenAI’s GPT-4 generative AI, which powers its ChatGPT, required about 10 megawatts (MW) of electricity to train. That’s roughly equivalent to the power requirements of 10,000 average homes.

It’s also about 833,000 times the electricity required to power the human brain.

Published in Plant Phenomics:Click the link to read the full article for free:

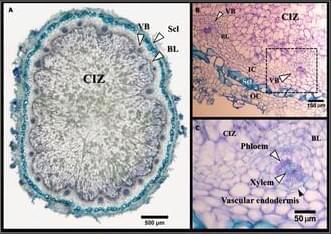

The efficiency of N2-fixation in legume–rhizobia symbiosis is a function of root nodule activity. Nodules consist of 2 functionally important tissues: (a) a central infected zone (CIZ), colonized by rhizobia bacteria, which serves as the site of N2-fixation, and (b) vascular bundles (VBs), serving as conduits for the transport of water, nutrients, and fixed nitrogen compounds between the nodules and plant. A quantitative evaluation of these tissues is essential to unravel their functional importance in N2-fixation. Employing synchrotron-based x-ray microcomputed tomography (SR-μCT) at submicron resolutions, we obtained high-quality tomograms of fresh soybean root nodules in a non-invasive manner. A semi-automated segmentation algorithm was employed to generate 3-dimensional (3D) models of the internal root nodule structure of the CIZ and VBs, and their volumes were quantified based on the reconstructed 3D structures. Furthermore, synchrotron x-ray fluorescence imaging revealed a distinctive localization of Fe within CIZ tissue and Zn within VBs, allowing for their visualization in 2 dimensions. This study represents a pioneer application of the SR-μCT technique for volumetric quantification of CIZ and VB tissues in fresh, intact soybean root nodules. The proposed methods enable the exploitation of root nodule’s anatomical features as novel traits in breeding, aiming to enhance N2-fixation through improved root nodule activity.

Call it the force’s doing, but it has been surprises galore for the GEDI mission.

In early 2023, the lidar mission that maps the Earth’s forests in 3D was to be burned up in the atmosphere to make way for another unrelated mission on the International Space Station. A last-minute decision by NASA saved its life and put it on hiatus until October 2024. Earlier this year, another surprise revealed itself: the mission that replaced GEDI was done with its work, effectively allowing GEDI to get back to work six months earlier than expected.

That’s how, in April, a robotic arm ended up moving the GEDI mission (short for Global Ecosystem Dynamics Investigation and pronounced “Jedi” like in the Star Wars films) from storage on the ISS to its original location, from where it now continues to gather crucial data on aboveground biomass on Earth.

Related: High-G data recorder helps Air Force munitions testing

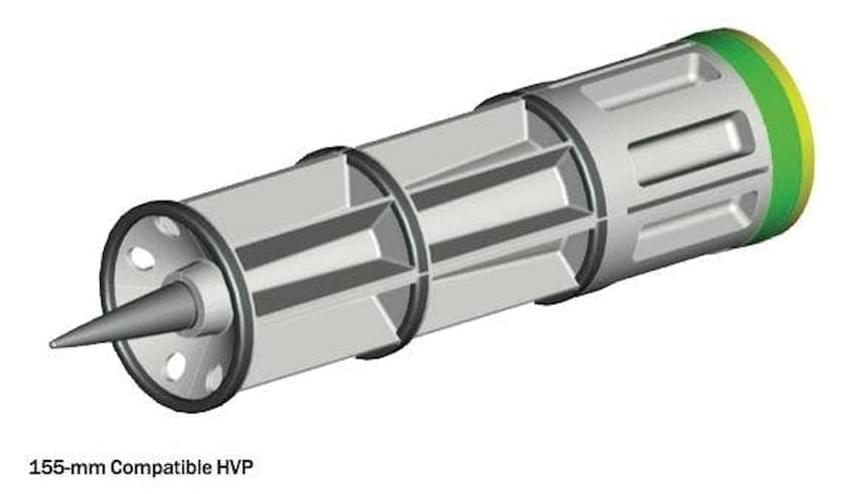

The Army wants a company to build and deliver MDAC prototypes using existing fielded and mature technologies. MDAC will be air-, rail-, and sea-transportable per MIL-STD-1366; will be able to move rapidly for survivability; have automated high rates of fire with HVP; and have emote weapon firing; have deep magazine capacity, rapid ammunition resupply, and high operational availability. Companies interested also will demonstrate supportability, safety, and cyber security.

Officials of the Army Rapid Capabilities and Critical Technologies Office (RCCTO) at Fort Belvoir, Va., issued a request for information on Monday for the Hypervelocity Projectile (HVP) project.

Army officials want a company able to deliver HVP prototypes no later than fall 2027 for operational demonstrations in 2028, and later for possible deployment. Hypervelocity projectiles fly through the air at speeds of 8 or 9 times the speed of sound.