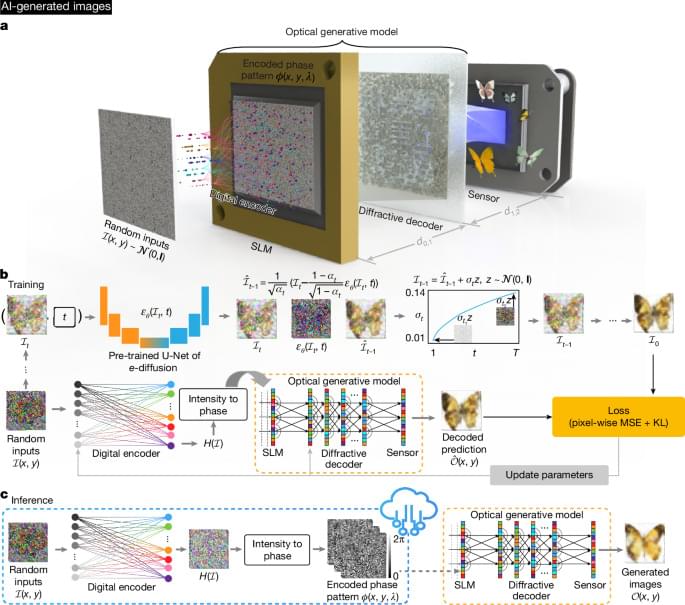

Optical generative models are demonstrated for the rapid and power-efficient creation of never-seen-before images of handwritten digits, fashion products, butterflies, human faces and Van Gogh-style artworks.

Gemini Robotics 1.5 can use digital tools to solve tasks. For example, if a robot was asked, Based on my location, can you sort these objects into the corre…

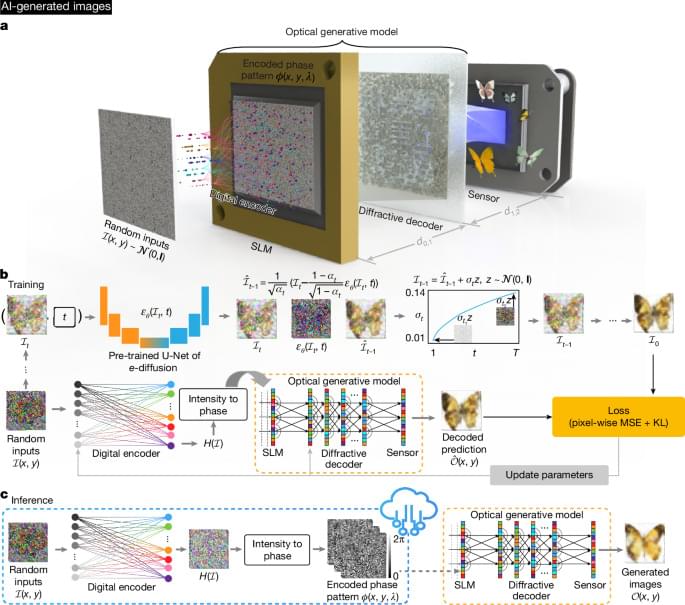

In the world around us, many things exist in the context of time: a bird’s path through the sky is understood as different positions over a period of time, and conversations as a series of words occurring one after another.

Computer scientists and statisticians call these sequences time series. Although statisticians have found ways to understand these patterns and make predictions about the future, modern deep learning AI models struggle to perform just as well, if not worse, than statistical models.

Engineers at the University of California, Santa Cruz, developed a new method for time series forecasting, powered by deep learning, that can improve its predictions based on data from the near future. When they applied this approach to the critical task of seizure prediction using brain wave data, they found that their strategy offers up to 44.8% improved performance for predicting seizures compared to baseline methods. While they focused on this critical health care application, the researchers’ method is designed to be relevant for a wide range of fields. A new study in Nature Communications reports their results.

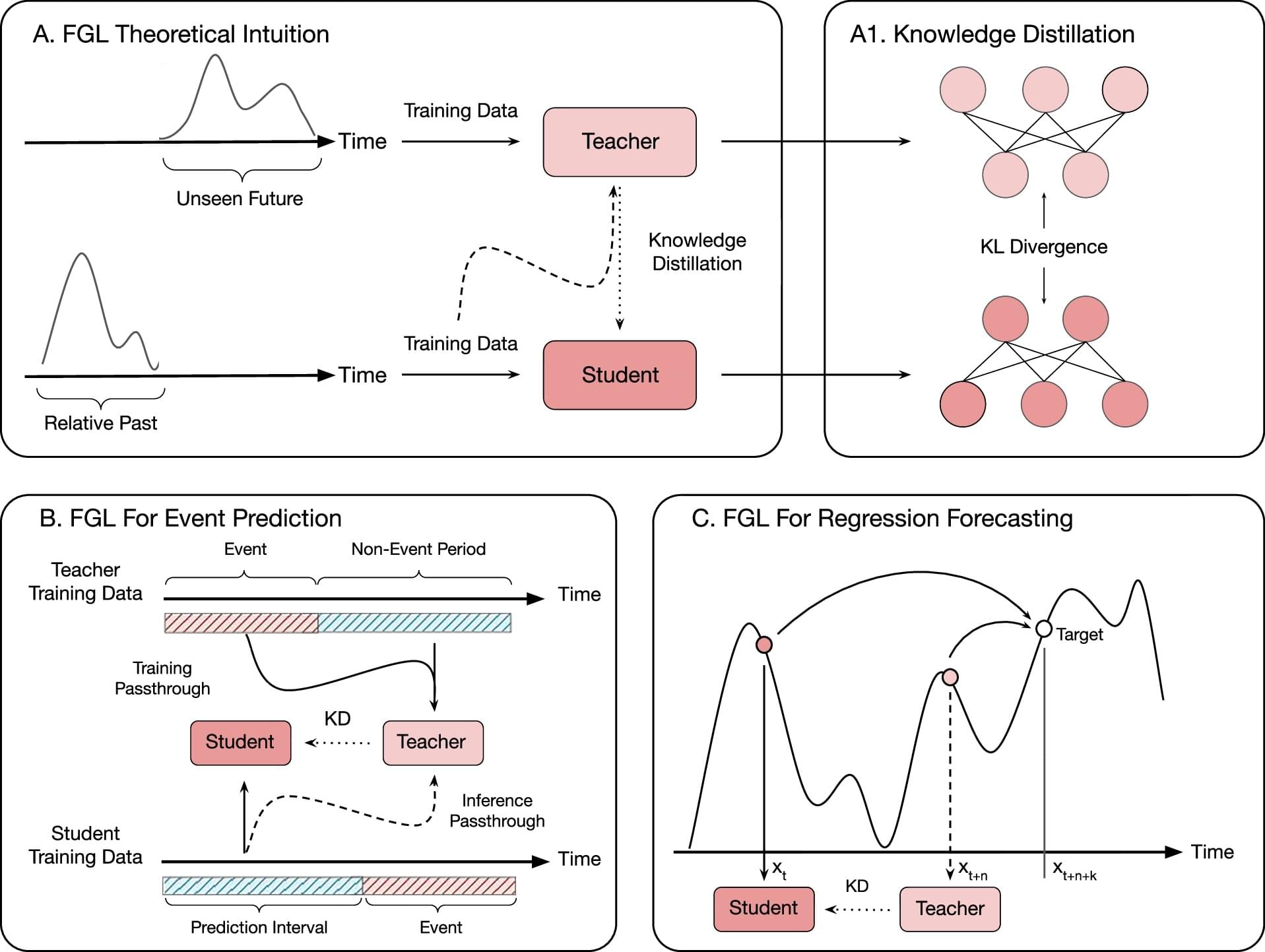

Quantum computing is still in its early stages of development, but researchers have extensively explored its potential uses. A recent study conducted at São Paulo State University (UNESP) in Brazil proposed a hybrid quantum-classical model to support breast cancer diagnosis from medical images.

The work was published as part of the 2025 IEEE 38th International Symposium on Computer-Based Medical Systems (CBMS), organized by the Institute of Electrical and Electronics Engineers (IEEE). In the publication, the authors describe a hybrid neural network that combines quantum and classical layers using an approach known as a quanvolutional neural network (QNN). They applied the model to mammography and ultrasound images to classify lesions as benign or malignant.

“What we wanted to bring to this work was a very basic architecture that used quantum computing but contained a minimum of quantum and classical devices,” says Yasmin Rodrigues, the first author of the study. The work is part of her scientific initiation project, supervised by João Paulo Papa, full professor in the Department of Computing at the Bauru campus of UNESP. Papa also co-authored the article.

Though it might seem like science fiction, scientists are working to build nanoscale molecular machines that can be designed for myriad applications, such as “smart” medicines and materials. But like all machines, these tiny devices need a source of power, the way electronic appliances use electricity or living cells use ATP (adenosine triphosphate, the universal biological energy source).

Researchers in the laboratory of Lulu Qian, Caltech professor of bioengineering, are developing nanoscale machines made out of synthetic DNA, taking advantage of DNA’s unique chemical bonding properties to build circuits that can process signals much like miniature computers. Operating at billionth-of-a-meter scales, these molecular machines can be designed to form DNA robots that sort cargos or to function like a neural network that can learn to recognize handwritten numerical digits.

One major challenge, however, has remained: how to design and power them for multiple uses.

Imagine watching a favorite movie when suddenly the sound stops. The data representing the audio is missing. All that’s left are images. What if artificial intelligence (AI) could analyze each frame of the video and provide the audio automatically based on the pictures, reading lips and noting each time a foot hits the ground?

That’s the general concept behind a new AI that fills in missing data about plasma, the fuel of fusion, according to Azarakhsh Jalalvand of Princeton University. Jalalvand is the lead author on a paper about the AI, known as Diag2Diag, that was recently published in Nature Communications.

“We have found a way to take the data from a bunch of sensors in a system and generate a synthetic version of the data for a different kind of sensor in that system,” he said. The synthetic data aligns with real-world data and is more detailed than what an actual sensor could provide. This could increase the robustness of control while reducing the complexity and cost of future fusion systems. “Diag2Diag could also have applications in other systems such as spacecraft and robotic surgery by enhancing detail and recovering data from failing or degraded sensors, ensuring reliability in critical environments.”

We also discuss some of the lesser known options for augmentation and explore the notion of man-machine integration.

Join this channel to get access to perks:

/ @isaacarthursfia.

Visit our Website: http://www.isaacarthur.net.

Join the Facebook Group: / 1583992725237264

Support the Channel on Patreon: / isaacarthur.

Visit the sub-reddit: / isaacarthur.

Listen or Download the audio of this episode from Soundcloud: / cyborgs.

Cover Art by Jakub Grygier: https://www.artstation.com/artist/jak… by: Dexter Britain “Seeing the Future” Lombus “Hydrogen Sonata” Sergey Cheremisinov “Labyrinth” Kai Engel “Endless Story about Sun and Moon” Frank Dorittke “Morninglight” Koalips “Kvazar” Kevin MacLeod “Spacial Winds” Lombus “Amino” Brandow Liew “Into the Storm”

Music by:

Dexter Britain.

\

Researchers have found that giving AI “peers” in virtual reality (VR) a body that can interact with the virtual environment can help students learn programming. Specifically, the researchers found students were more willing to accept these “embodied” AI peers as partners, compared to voice-only AI, helping the students better engage with the learning experience.

“Using AI agents in a VR setting for teaching students programming is a relatively recent development, and this proof-of-concept study was meant to see what kinds of AI agents can help students learn better and work more effectively,” says Qiao Jin, corresponding author of a paper on the work and an assistant professor of computer science at North Carolina State University.

“Peer learning is widespread in the programming field, as it helps students engage in the learning process. For this work, we focused on ‘pAIr’ learning, where the programming peer is actually an AI agent. And the results suggest that embodying AI in the VR environment makes a real difference for pAIr learning.”