Amazon is betting big on Anthropic even as it plans to compete with it.

According to insiders, Microsoft and OpenAI are planning to build a $100 billion supercomputer called “Stargate” to massively accelerate the development of OpenAI’s AI models, The Information reports.

Microsoft and OpenAI executives are forging plans for a data center with a supercomputer made up of millions of specialized server processors to accelerate OpenAI’s AI development, according to three people who took part in confidential talks.

The project, code-named “Stargate,” could cost as much as $100 billion, according to one person who has spoken with OpenAI CEO Sam Altman about it and another who has seen some of Microsoft’s initial cost estimates.

In the future we will rely ever more on Artificial Intelligence to run our civilization, but what role will AI and computers playing in governing?

Start listening with a 30-day Audible trial and your first audiobook is free. Visit.

http://www.audible.com/isaac or text \.

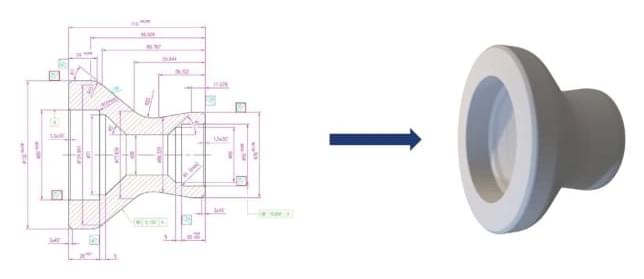

French software start-up Spare Parts 3D (SP3D) has launched the beta program of Théia, its new digital tool that can automatically create 3D models from 2D technical drawings.

As global geopolitical and economic factors pose challenges to supply chains, more companies are looking to digitize their inventories, allowing spare parts to be 3D printed locally and on demand. This digitization process, however, can be time-consuming and costly.

Integrating into the company’s AI-driven DigiPart software, SP3D’s new offering leverages deep learning technology to convert existing 2D drawings of spare parts into 3D printable models, reducing conversion times from days to minutes.

Here’s a new Forbes review by world leading futurist Tracey Follows on the book: Transhuman Citizen:

What does Transhumanism, Ayn Rand and the U.S. Presidential election have in common? They are the connecting themes in a new book by Ben Murnane entitled, “Transhuman Citizen”

The book tells the story of Zoltan Istvan, a one-time U.S. Presidential candidate, who drove a coffin-shaped bus around the U.S. attempting to persuade the public that death is not inevitable and that transhumanism is a political as much as a scientific solution to the troubles of the 21st Century.

The book deals with what lead up to that Presidential campaign, the campaign itself, and what has happened since.

It starts with an explanation of how the author came to settle on his subject of Zoltan Istvan Gyurko, and the radical changes he wants to see in society. It links the author’s interest to his own personal circumstances. Murnane has a rare genetic disease, Fanconi anaemia, and became the first person in Ireland to have a novel form of bone marrow transplant. Having benefited from advanced medical technologies, he went on to write a book about living with the illness. Murnane also has interest in Ayn Rand, having completed a PhD in Rand and Posthumanism.

In this episode, recorded during the 2024 Abundance360 Summit, Peter and Elon discuss super-intelligence, the future of AI, Neuralink, and more.

Elon Musk is a businessman, founder, investor, and CEO. He co-founded PayPal, Neuralink and OpenAI; founded SpaceX, and is the CEO of Tesla and the Chairman of X.

Learn more about Abundance360: https://www.abundance360.com/summit.

———-

This episode is supported by exceptional companies:

Use my code PETER25 for 25% off your first month’s supply of Seed’s DS-01® Daily Synbiotic: https://seed.com/moonshots.

Get started with Fountain Life and become the CEO of your health: https://fountainlife.com/peter/