Inspired by microscopic worms, Liquid AI’s founders developed a more adaptive, less energy-hungry kind of neural network. Now the MIT spin-off is revealing several new ultra-efficient models.

ABOUT THE LECTURE

Animals and humans understand the physical world, have common sense, possess a persistent memory, can reason, and can plan complex sequences of subgoals and actions. These essential characteristics of intelligent behavior are still beyond the capabilities of today’s most powerful AI architectures, such as Auto-Regressive LLMs.

I will present a cognitive architecture that may constitute a path towards human-level AI. The centerpiece of the architecture is a predictive world model that allows the system to predict the consequences of its actions. and to plan sequences of actions that that fulfill a set of objectives. The objectives may include guardrails that guarantee the system’s controllability and safety. The world model employs a Joint Embedding Predictive Architecture (JEPA) trained with self-supervised learning, largely by observation.

The JEPA simultaneously learns an encoder, that extracts maximally-informative representations of the percepts, and a predictor that predicts the representation of the next percept from the representation of the current percept and an optional action variable.

We show that JEPAs trained on images and videos produce good representations for image and video understanding. We show that they can detect unphysical events in videos. Finally, we show that planning can be performed by searching for action sequences that produce predicted end state that match a given target state.

ABOUT THE SPEAKER yann lecun, VP & chief AI scientist, meta; professor, NYU; ACM turing award laureate.

Yann LeCun is VP & Chief AI Scientist at Meta and a Professor at NYU. He was the founding Director of Meta-FAIR and of the NYU Center for Data Science. After a PhD from Sorbonne Université and research positions at AT&T and NEC, he joined NYU in 2003 and Meta in 2013. He received the 2018 ACM Turing Award for his work on AI. He is a member of the US National Academies and the French Académie des Sciences.

Inspired by the external skeleton of a spider, the robot leg is more flexible than conventional robots.

A small robot that resembles a spider’s leg has been developed by engineers at the University of Tartu. Inspired by nature, the fingernail-long leg is more flexible than conventional robots.

Its dexterous movements are expected to help people rescued from rubble and other danger zones in the future.

The robot leg modeled after the leg of a cucumber spider was created by researchers from the Institute of Technology of the University of Tartu and the Italian Institute of Technology. In the near future, it’s expected to move where humans cannot.

The AI system is dubbed a “quantum-tunneling deep neural network” and combines neural networks with quantum tunneling. A deep neural network is a collection of machine learning algorithms inspired by the structure and function of the brain — with multiple layers of nodes between the input and output. It can model complex non-linear relationships and, unlike conventional neural networks (which include a single layer between input and output) deep neural networks include many hidden layers.

Quantum tunneling, meanwhile, occurs when a subatomic particle, such as an electron or photon (particle of light), effectively passes through an impenetrable barrier. Because a subatomic particle like light can also behave as a wave — when it is not directly observed it is not in any fixed location — it has a small but finite probability of being on the other side of the barrier. When sufficient subatomic particles are present, some will “tunnel” through the barrier.

After the data representing the optical illusion passes through the quantum tunneling stage, the slightly altered image is processed by a deep neural network.

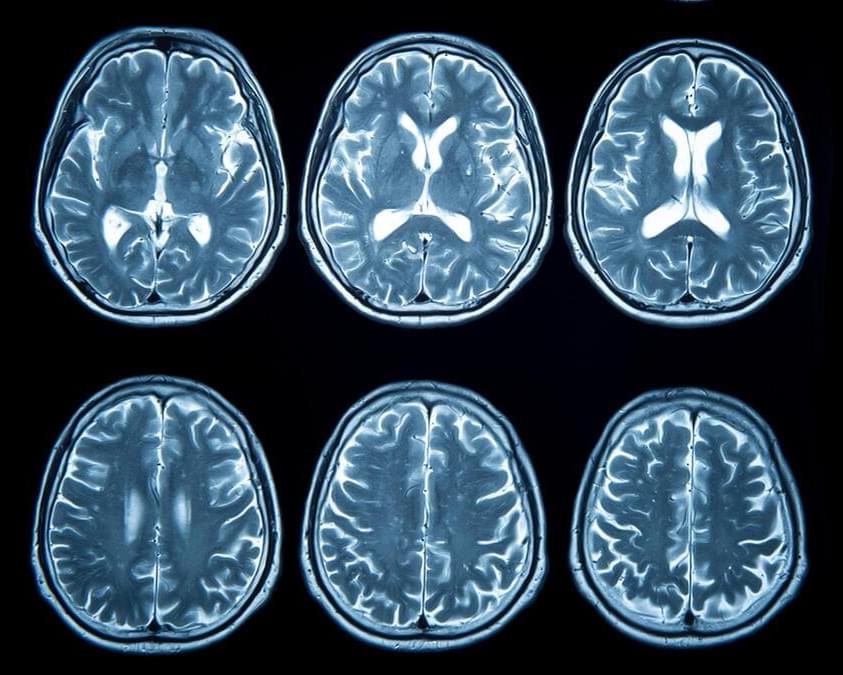

One prevailing hypothesis is that physical fitness mitigates structural brain changes that contribute to cognitive decline. Recent evidence points to a potential role involving myelin —the insulating sheath surrounding neurons that is crucial for efficient neural signaling and overall cognitive health. Myelination facilitates rapid signal transmission and supports neural network integrity.

The degeneration of myelin in the brain is increasingly recognized as a critical factor contributing to disruptions in neural communication, which may play a significant role in the cognitive decline observed in Alzheimer’s disease and other neurodegenerative disorders. Emerging research suggests that myelin breakdown may even precede the formation of amyloid-beta plaques and neurofibrillary tangles—the hallmark pathological features of Alzheimer’s disease. Advanced imaging studies have detected early myelin degeneration in individuals who later develop Alzheimer’s, indicating that myelin damage could be an initial event in the disease’s progression.

Age-related deterioration of myelin is closely associated with cognitive decline. Reduced white matter integrity—often resulting from myelin damage—is correlated with declines in memory, executive function, and processing speed in older adults. As myelin degradation leads to the slowing of cognitive processes and disrupts the synchronization of neural networks, preserving myelin integrity is essential for sustaining cognitive health across the lifespan.