PRESS RELEASE — Physicists have discovered a simpler way to create quantum entanglement between two distant photons — without starting with entanglement, without resorting to Bell-state measurements, and even without detecting all ancillary photons — an advance that challenges long-held assumptions in quantum networking.

And all it took was a friendly nudge from an artificial intelligence tool.

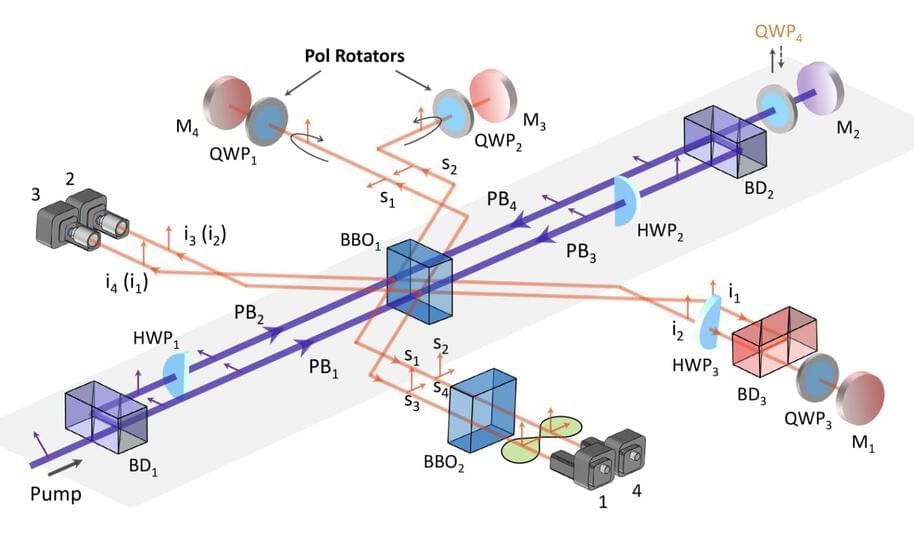

An international team of scientists led by researchers from Nanjing University and the Max Planck Institute for the Science of Light described their method in Physical Review Letters — accessed for this article through arXiv — that demonstrated entanglement can emerge from the indistinguishability of photon paths alone. Instead of relying on standard procedures that start from prepared entangled pairs and complex joint measurements, their technique leverages a basic quantum principle: when multiple photons could have come from several possible sources, erasing the clues to their origins can produce entanglement where none existed before.