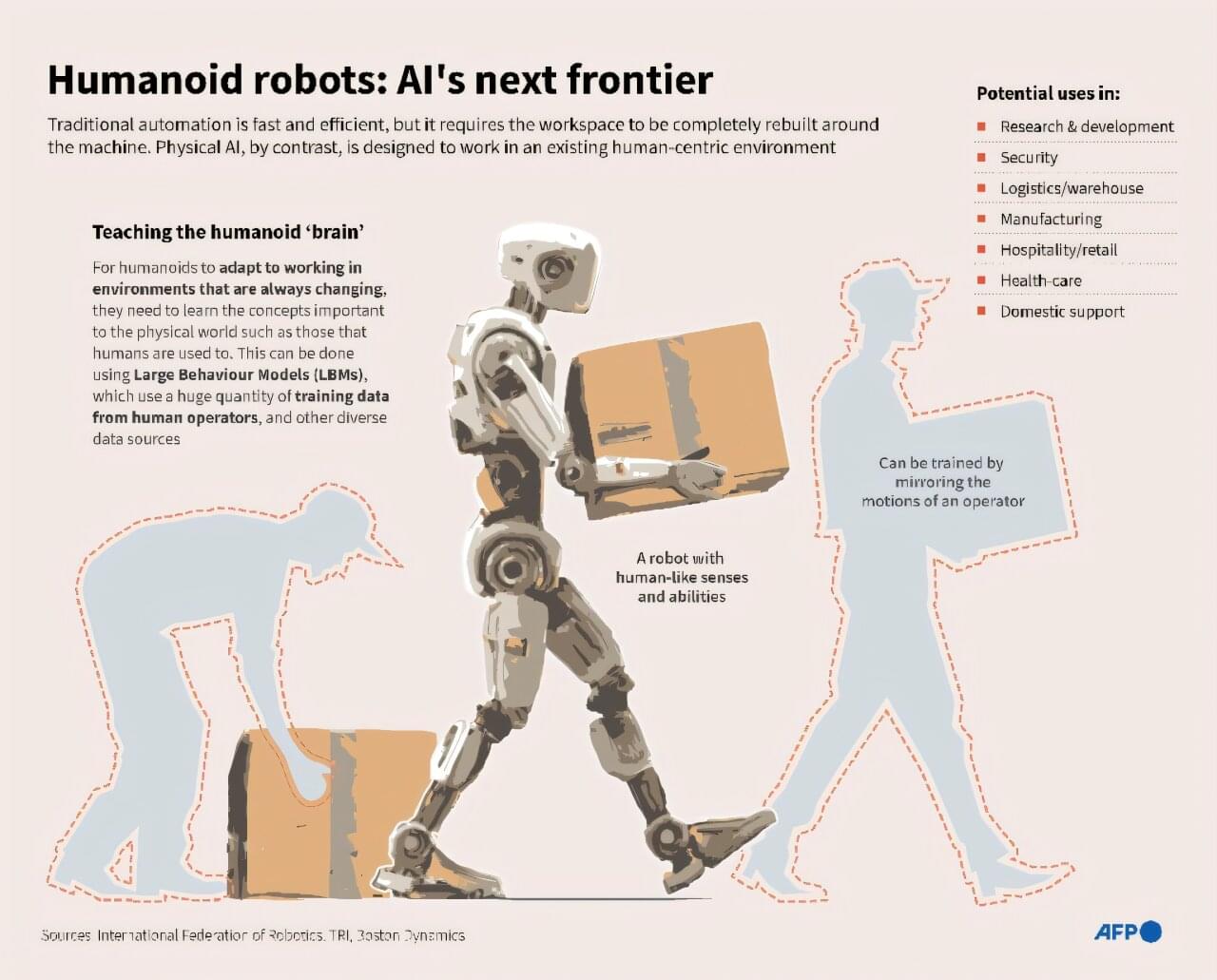

Elon Musk just made a bold announcement that could completely redefine Tesla’s future — but almost no one noticed. At the 2025 Tesla Shareholder Meeting, Elon revealed a deeper vision that goes far beyond cars. From AI and humanoid robots to clean energy and automation, Tesla is positioning itself as the driving force behind humanity’s next great leap.

In this video, Chris Smedley and the Ideal Wealth Grower team break down the hidden message behind Elon’s words, why the singularity may already be unfolding, and how Tesla’s shift toward artificial intelligence could reshape the global economy — and your investment strategy. Stay tuned till the end to discover why this could be the most important turning point in Tesla’s history.

Know what Type of Business suits you first at https://quiz.franchisewithbob.com/rg — and COPY THE RIGHT BUSINESS FOR YOU!

Thanks, Franchise with Bob, for sponsoring this episode!

Watch on Social media profiles:

LinkedIn: https://www.linkedin.com/posts/ameier…

https://twitter.com/IdealGrower/status/1986

… Welcome to Ideal Wealth Grower, the channel that helps you build real wealth, create passive income, and achieve true time freedom. I’m Axel Meierhoefer — former US Air Force officer, real estate investor, and lifelong learner inspired by visionaries like Elon Musk. After more than 20 years of successful real estate investing, I reached my own financial freedom point, and now I’m here to help you do the same. If you’re ready to stop trading time for money, take control of your financial future, and live life on your terms — you’re in the right place. Stay connected with us! ✅ X: twitter.com/idealgrower ✅ Our community: cutt.ly/0rDZ1fNI ✅ Linked In: / ameierhoefer ✨ ✨

/ @idealwealthgrower Top Data Scientist Exposes Quantum AI Disruption, Digital AI Twins | Anthony Scriffignano

• Top Data Scientist Exposes Quantum AI Disr… Is INFINITE BANKING the Future of Sustainable Wealth? with Chris Naugle | Age of Abundance

• Is INFINITE BANKING the Future of Sustaina… Elon Musk Just Changed Everything at Tesla — And No One’s Talking About It #elonmusk #tesla #chrissmedley #idealwealthgrower #teslanews #teslastock #teslainvestors #teslashareholdermeeting #teslaupdate #teslafuture #teslabot #teslaai #artificialintelligence #teslainnovation #futuretech #robotics #teslainvesting #wealthbuilding #financialfreedom #stockmarket #investing #technologynews #innovation #elonmusknews #teslamasterplan #ai what elon musk said at tesla 2025 meeting tesla AI day 2025 full presentation tesla master plan 4 explained tesla robot update 2025 elon musk latest tesla news elon musk tesla 2025 updates tesla shareholder meeting highlights.

X: https://twitter.com/IdealGrower/status/1986…