A team of math and AI researchers at Microsoft Asia has designed and developed a small language model (SLM) that can be used to solve math problems. The group has posted a paper on the arXiv preprint server outlining the technology and math behind the new tool and how well it has performed on standard benchmarks.

Over the past several years, multiple tech giants have been working hard to steadily improve their LLMs, resulting in AI products that have in a very short time become mainstream. Unfortunately, such tools require massive amounts of computer power, which means they consume a lot of electricity, making them expensive to maintain.

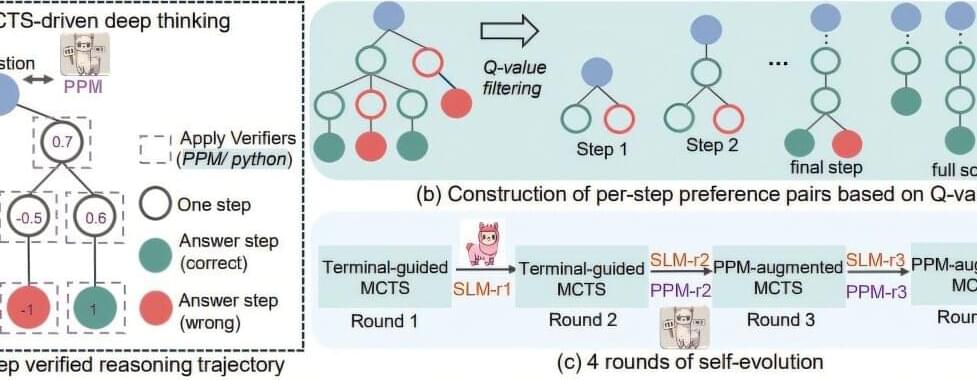

Because of that, some in the field have been turning to SLMs, which as their name implies, are smaller and thus far less resource intensive. Some are small enough to run on a local device. One of the main ways AI researchers make the best use of SLMs is by narrowing their focus—instead of trying to answer any question about anything, they are designed to answer questions about something much more specific—like math. In this new effort, Microsoft has focused its efforts on not just solving math problems, but also in teaching an SLM how to reason its way through a problem.