*This video was recorded at Foresight’s Vision Weekend 2025 in Puerto Rico*

https://foresight.org/vw2025pr/

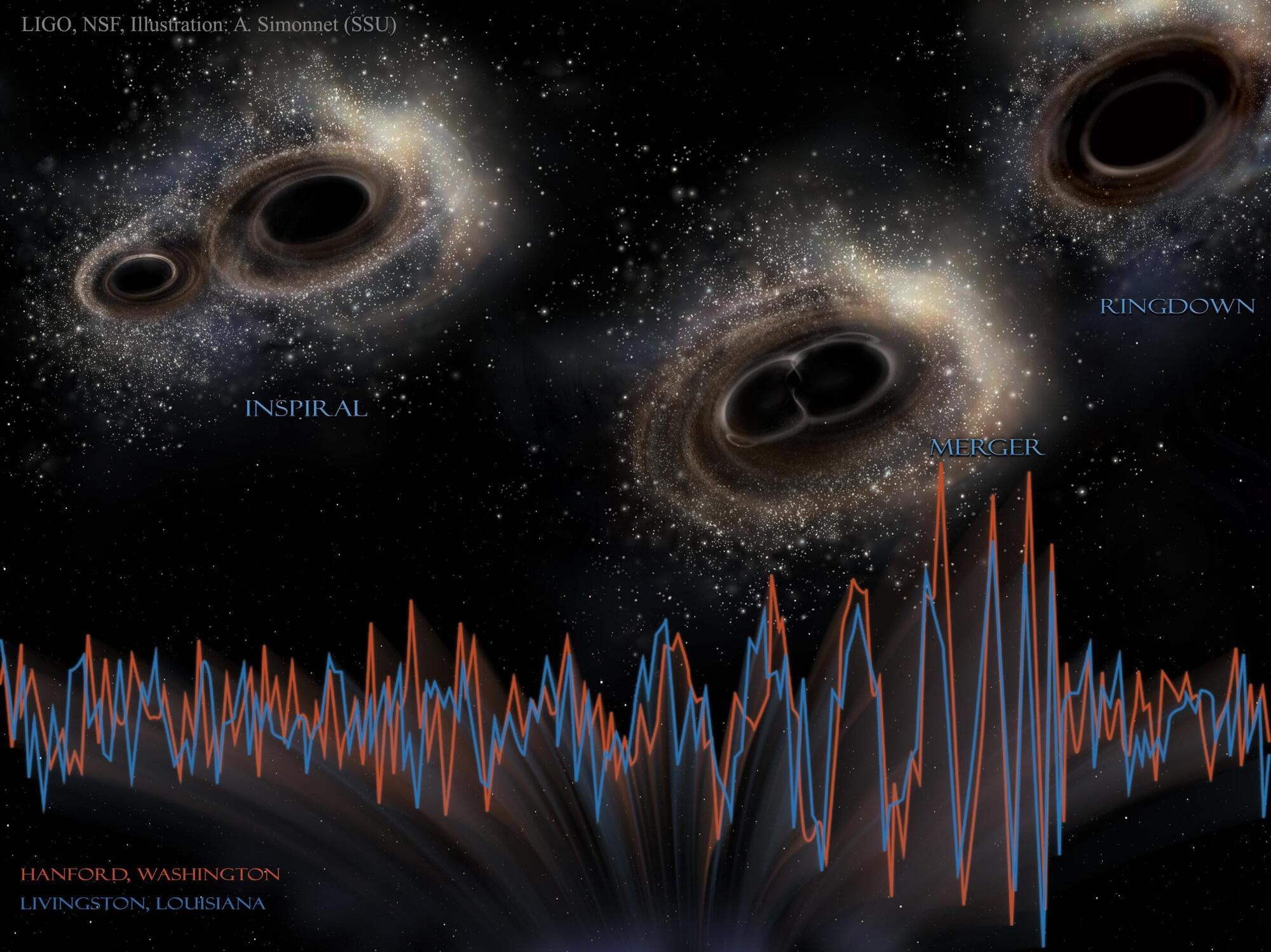

Our Vision Weekends are the annual festivals of Foresight Institute. Held in two countries, over two weekends, you are invited to burst your tech silos and plan for flourishing long-term futures. This playlist captures the magic of our Puerto Rico edition, held February 21–23, 2025, in the heart of Old San Juan. Come for the ideas: join the conference, unconference, mentorship hours, curated 1-1s, tech demos, biohacking sessions, prize awards, and much more. Stay for fun with new friends: join the satellite gatherings, solarpunk future salsa night, beach picnic, and surprise island adventures. This year’s main conference track is dedicated to “Paths to Progress”; meaning you will hear 20+ invited presentations from Foresight’s core community highlighting paths to progress in the following areas: Existential Hope Futures, Longevity, Rejuvenation, Cryonics, Neurotech, BCIs & WBEs, Cryptography, Security & AI, Fusion, Energy, Space, and Funding, Innovation, Progress.

══════════════════════════════════════

*About The Foresight Institute*

The Foresight Institute is a research organization and non-profit that supports the beneficial development of high-impact technologies. Since our founding in 1986 on a vision of guiding powerful technologies, we have continued to evolve into a many-armed organization that focuses on several fields of science and technology that are too ambitious for legacy institutions to support. From molecular nanotechnology, to brain-computer interfaces, space exploration, cryptocommerce, and AI, Foresight gathers leading minds to advance research and accelerate progress toward flourishing futures.

*We are entirely funded by your donations. If you enjoy what we do please consider donating through our donation page:* https://foresight.org/donate/

*Visit* https://foresight.org, *subscribe to our channel for more videos or join us here:*