AI may appear human, but it is an illusion we must tackle.

All manner of companies and tech leaders have been predicting when AGI will be achieved, but we might have one of the surest signs that it’s already here — or is just around the corner.

Google Deepmind is hiring a Post-AGI researcher for its London office. As per a job listing on internet boards, Google Deepmind is looking for a “Research Scientist, Post-AGI Research”. “We are seeking a Research Scientist to explore the profound impact of what comes after AGI,” the job listing says.

“At Google DeepMind, we’ve built a unique culture and work environment where long-term ambitious research can flourish. We are seeking a highly motivated Research Scientist to join our team and contribute to groundbreaking research that will focus on what comes after Artificial General Intelligence (AGI). Key questions include the trajectory of AGI to artificial superintelligence (ASI), machine consciousness, the impact of AGI on the foundations of human society,” says the job listing.

Elon Musk’s Tesla is on the verge of launching a self-driving platform that could revolutionize transportation with millions of affordable robotaxis, positioning the company to outpace competitors like Uber ## ## Questions to inspire discussion ## Tesla’s Autonomous Driving Revolution.

🚗 Q: How is Tesla’s unsupervised FSD already at scale? A: Tesla’s unsupervised FSD is currently deployed in 7 million vehicles, with millions of units of hardware 4 dormant in older vehicles, available at a price point of $30,000 or less.

🏭 Q: What makes Tesla’s autonomous driving solution unique? A: Tesla’s solution is vertically integrated with end-to-end ownership of the entire system, including silicon design, software platform, and OEM, allowing them to keep costs low and push down utilization on ride-sharing networks. Impact on Ride-Sharing Industry.

💼 Q: How will Tesla’s autonomous vehicles affect Uber drivers? A: Tesla’s unsupervised self-driving cars will likely replace Uber’s 1.2 million US drivers, being 4x more useful due to no breaks and no human presence, operating at a per-mile cost below 50% of current Uber rates.

💰 Q: What economic pressure will Tesla’s solution put on Uber? A: Tesla’s autonomous driving solution will create tremendous pressure on Uber, with its cost structure acting as a magnet for high utilization, maintaining low pre-pressure costs for Tesla due to their fundamentally different design. Future Implications.

🤝 Q: What potential strategy might Uber adopt to compete with Tesla? A: Uber may need to approach Tesla to pre-buy their first 2 million Cyber Caps upfront, including production costs, as potentially the only path to compete with Tesla’s autonomous driving solution.

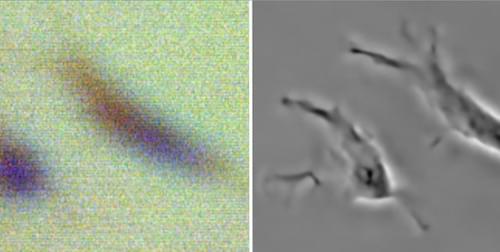

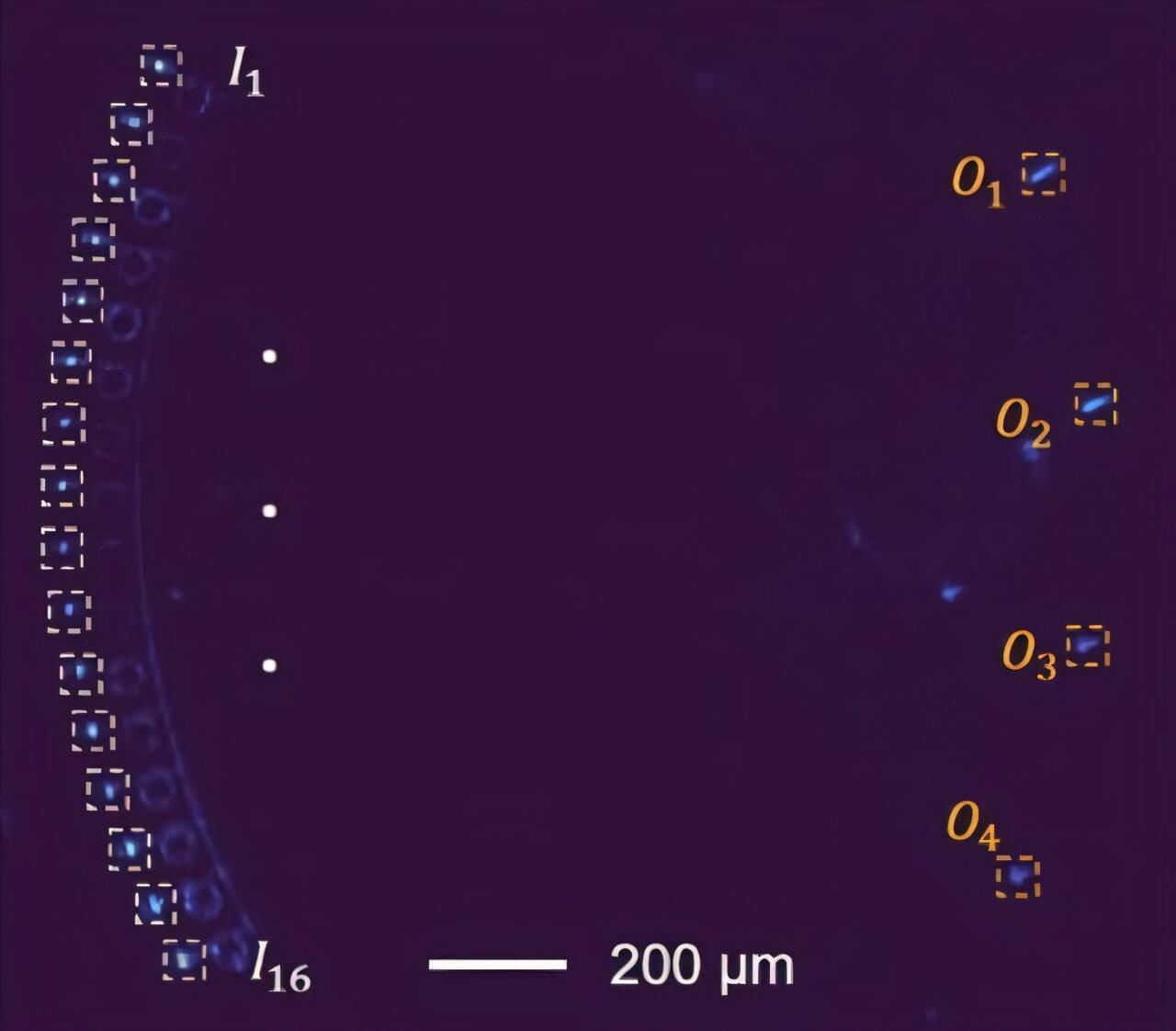

Penn Engineers have developed the first programmable chip that can train nonlinear neural networks using light—a breakthrough that could dramatically speed up AI training, reduce energy use and even pave the way for fully light-powered computers.

While today’s AI chips are electronic and rely on electricity to perform calculations, the new chip is photonic, meaning it uses beams of light instead. Described in Nature Photonics, the chip reshapes how light behaves to carry out the nonlinear mathematics at the heart of modern AI.

“Nonlinear functions are critical for training deep neural networks,” says Liang Feng, Professor in Materials Science and Engineering (MSE) and in Electrical and Systems Engineering (ESE), and the paper’s senior author. “Our aim was to make this happen in photonics for the first time.”

In this episode of Bloomberg Primer, we explore the world of biocomputing–where scientists are laying the foundation for a field that may blur the lines between the biological and synthetic.

Bloomberg Primer cuts through the complex jargon to reveal the business behind technologies poised to transform global markets. This six-part, planet-spanning series offers a comprehensive look at the \.

*This video was recorded at Foresight’s Vision Weekend 2025 in Puerto Rico*

https://foresight.org/vw2025pr/

Our Vision Weekends are the annual festivals of Foresight Institute. Held in two countries, over two weekends, you are invited to burst your tech silos and plan for flourishing long-term futures. This playlist captures the magic of our Puerto Rico edition, held February 21–23, 2025, in the heart of Old San Juan. Come for the ideas: join the conference, unconference, mentorship hours, curated 1-1s, tech demos, biohacking sessions, prize awards, and much more. Stay for fun with new friends: join the satellite gatherings, solarpunk future salsa night, beach picnic, and surprise island adventures. This year’s main conference track is dedicated to “Paths to Progress”; meaning you will hear 20+ invited presentations from Foresight’s core community highlighting paths to progress in the following areas: Existential Hope Futures, Longevity, Rejuvenation, Cryonics, Neurotech, BCIs & WBEs, Cryptography, Security & AI, Fusion, Energy, Space, and Funding, Innovation, Progress.

══════════════════════════════════════

*About The Foresight Institute*

The Foresight Institute is a research organization and non-profit that supports the beneficial development of high-impact technologies. Since our founding in 1986 on a vision of guiding powerful technologies, we have continued to evolve into a many-armed organization that focuses on several fields of science and technology that are too ambitious for legacy institutions to support. From molecular nanotechnology, to brain-computer interfaces, space exploration, cryptocommerce, and AI, Foresight gathers leading minds to advance research and accelerate progress toward flourishing futures.

*We are entirely funded by your donations. If you enjoy what we do please consider donating through our donation page:* https://foresight.org/donate/

*Visit* https://foresight.org, *subscribe to our channel for more videos or join us here:*

*This video was recorded at Foresight’s Vision Weekend 2025 in Puerto Rico*

https://foresight.org/vw2025pr/

Our Vision Weekends are the annual festivals of Foresight Institute. Held in two countries, over two weekends, you are invited to burst your tech silos and plan for flourishing long-term futures. This playlist captures the magic of our Puerto Rico edition, held February 21–23, 2025, in the heart of Old San Juan. Come for the ideas: join the conference, unconference, mentorship hours, curated 1-1s, tech demos, biohacking sessions, prize awards, and much more. Stay for fun with new friends: join the satellite gatherings, solarpunk future salsa night, beach picnic, and surprise island adventures. This year’s main conference track is dedicated to “Paths to Progress”; meaning you will hear 20+ invited presentations from Foresight’s core community highlighting paths to progress in the following areas: Existential Hope Futures, Longevity, Rejuvenation, Cryonics, Neurotech, BCIs & WBEs, Cryptography, Security & AI, Fusion, Energy, Space, and Funding, Innovation, Progress.

══════════════════════════════════════

*About The Foresight Institute*

The Foresight Institute is a research organization and non-profit that supports the beneficial development of high-impact technologies. Since our founding in 1986 on a vision of guiding powerful technologies, we have continued to evolve into a many-armed organization that focuses on several fields of science and technology that are too ambitious for legacy institutions to support. From molecular nanotechnology, to brain-computer interfaces, space exploration, cryptocommerce, and AI, Foresight gathers leading minds to advance research and accelerate progress toward flourishing futures.

*We are entirely funded by your donations. If you enjoy what we do please consider donating through our donation page:* https://foresight.org/donate/

*Visit* https://foresight.org, *subscribe to our channel for more videos or join us here:*