Twelve‑year‑old Kai Pollnitz from Georgetown received a life‑changing surprise when YouTube creator MrBeast gifted him a custom Open Bionics Hero PRO robotic hand. Kai, who was born with a congenit…

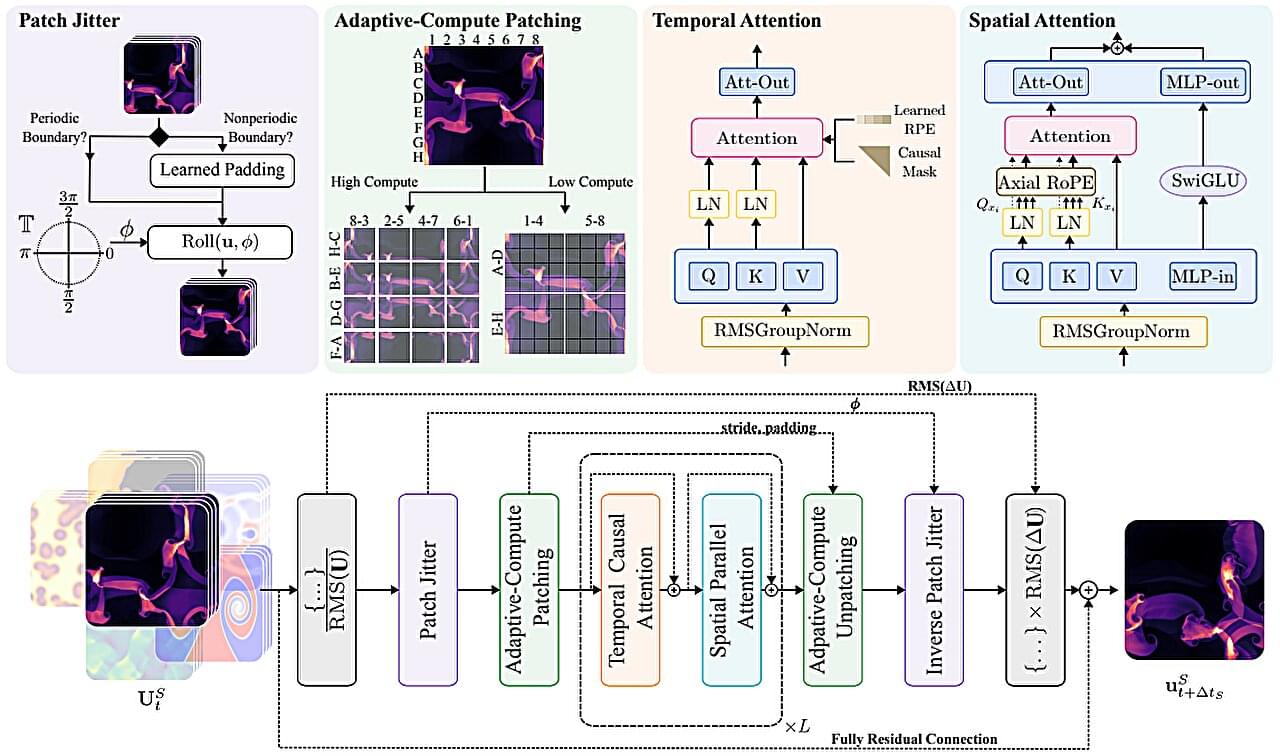

While popular AI models such as ChatGPT are trained on language or photographs, new models created by researchers from the Polymathic AI collaboration are trained using real scientific datasets. The models are already using knowledge from one field to address seemingly completely different problems in another.

While most AI models—including ChatGPT—are trained on text and images, a multidisciplinary team, including researchers from the University of Cambridge, has something different in mind: AI trained on physics.

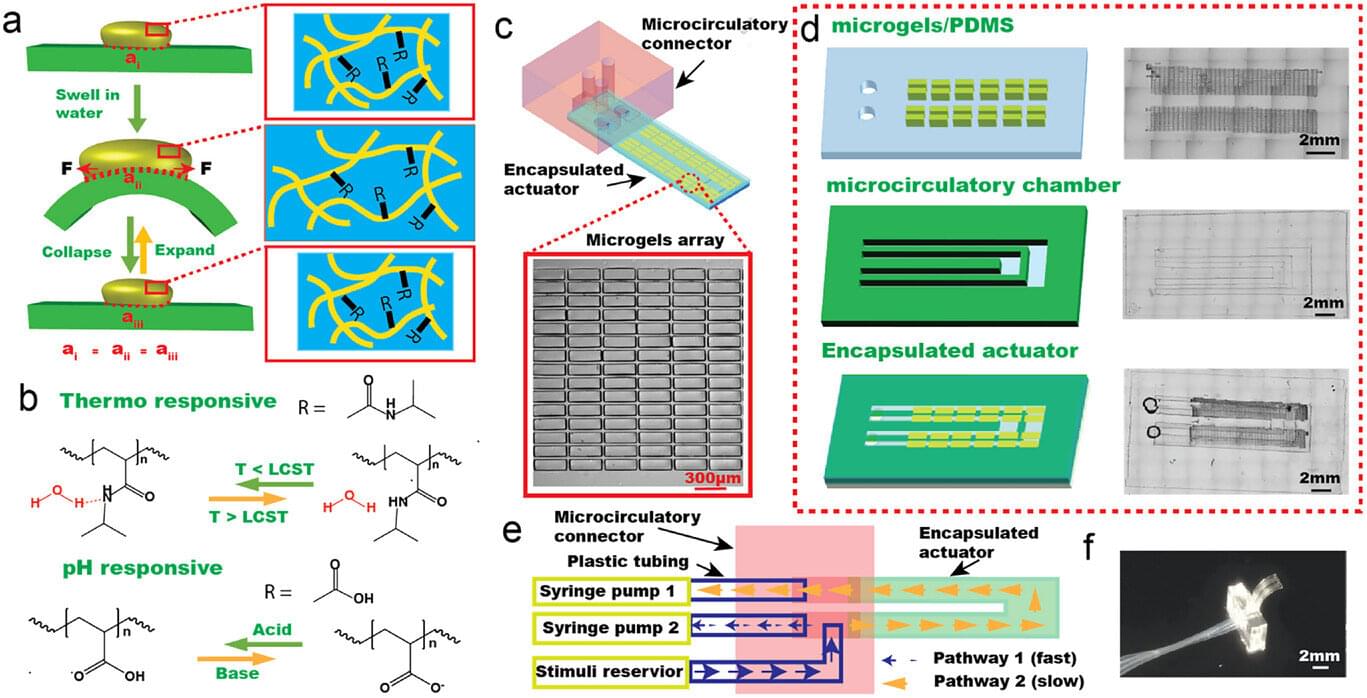

Researchers are continuing to make progress on developing a new synthetic material that behaves like biological muscle, an advancement that could provide a path to soft robotics, prosthetic devices and advanced human-machine interfaces. Their research, recently published in Advanced Functional Materials, demonstrates a hydrogel-based actuator system that combines movement, control and fuel delivery in a single integrated platform.

Biological muscle is one of nature’s marvels, said Stephen Morin, associate professor of chemistry at the University of Nebraska–Lincoln. It can generate impressive force, move quickly and adapt to many different tasks. It is also remarkable in its flexibility in terms of energy use and can draw on sugars, fats and other chemical stores, converting them into usable energy exactly when and where they are needed to make muscles move.

A synthetic version of muscle is one of the Holy Grails of material science.

Generative deep learning models are artificial intelligence (AI) systems that can create texts, images, audio files, and videos for specific purposes, following instructions provided by human users. Over the past few years, the content generated by these models has become increasingly realistic and is often difficult to distinguish from real content.

Many of the videos and images circulating on social media platforms today are created by generative deep learning models, yet the effects of these videos on the users viewing them have not yet been clearly elucidated. Concurrently, some computer scientists have proposed strategies to mitigate the possible adverse effects of fake content diffusion, such as clearly labeling these videos as AI-generated.

Researchers at University of Bristol recently carried out a new study set out to better understand the influence of deepfake videos on viewers, while also assessing user perceptions when AI-generated videos are labeled as “fake.” Their findings, published in Communications Psychology, suggest that knowing that a video was created with AI does not always make it less “persuasive” for viewers.

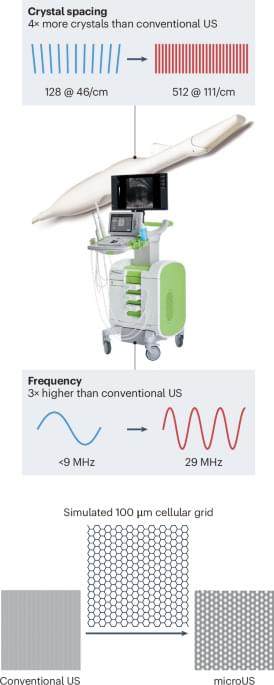

This Review focuses on micro-ultrasound as a new imaging technology in prostate cancer detection, comparing micro-ultrasound performance with that of the current standard MRI. The potential of micro-ultrasound in other applications, including tumour staging and active surveillance, as well as the use of artificial intelligence to support biopsy decision-making, are also discussed, based on completed and ongoing trials.

“Artificial intelligence is a so-called general-purpose technology that will fundamentally change our economic and social system,” said Andreas Raff.

How can fears about AI replacing jobs impact trust in democracy? This is what a recent study published in the Proceedings of the National Academy of Sciences hopes to address as a team of researchers from Germany and Austria investigated how the perception of AI replacing jobs could erode trust in political attitudes. This study has the potential to help scientists, legislators, and the public better understand the impact of AI beyond professional and personal markets, and how it could impact political societies.

For the study, the researchers conducted two separate surveys designed to obtain public perception regarding AI’s impact on the job market and how this could influence political attitudes. The first survey was comprised of 37,079 respondents with an average age of 48 years with 48 percent men and 52 percent women from 38 European countries and conducted from April to May 2021. The goal of this first survey was to ascertain perceptions of whether AI was considered as job-replacing or job-creating and how this impacts trust in political establishments. The second survey was comprised of 1,202 respondents from the United Kingdom with an average age of 47 years, and the goal of this second survey was to ascertain perceptions regarding identify causes for this relationship.

In the end, the researchers found that respondents who viewed AI more as job-replacing than job-creating also carried a perception of a lack of trust in political establishments. The researchers also found that respondents who were informed that AI will replace jobs caused them to have a distrust in political establishments.

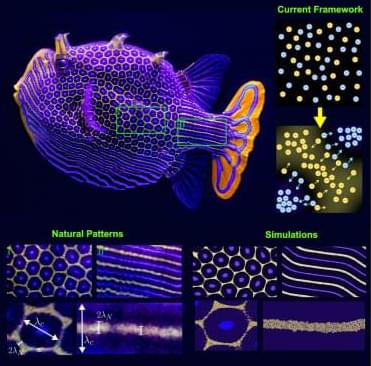

Natural patterns are rarely perfect. We couple classical Turing patterns in chemical gradients to cell motion via diffusiophoresis, showing that this interplay naturally yields textured and multiscale patterns. The patterns are dependent on parameters such as cell size distribution, Péclet number, volume fraction, and cell-cell interactions. These insights bridge idealized theory with real systems and point to routes for programmable materials, surfaces, and soft robotics.

Facebook admitted something that should have been front-page news.

In an FTC antitrust filing, Meta revealed that only 7% of time on Instagram and 17% on Facebook is spent actually socializing with friends and family.

The rest?

Algorithmically selected content. Short-form video. Engagement optimized by AI.

This wasn’t a philosophical confession. It was a legal one. But it quietly confirms what many of us have felt for years:

What we still call “social networks” are not social.

They are attention machines.