The chat bot earned a B, slightly below the class average. It excelled in practice problems and computing exercises but was unable to justify its work or simplify systems.

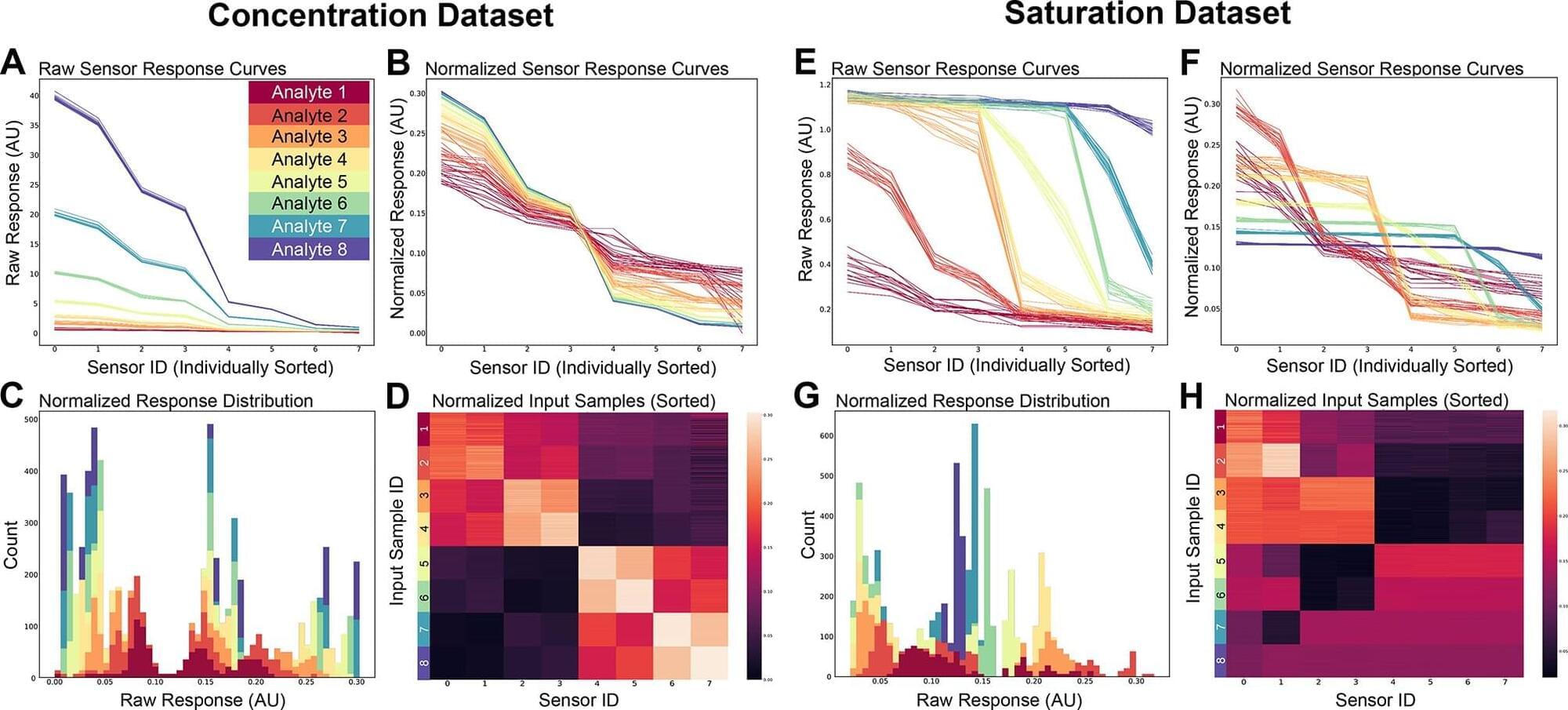

Human brains are great at sorting through a barrage of sensory information—like discerning the smell of tomato sauce upon stepping into a busy restaurant—but artificial intelligence systems are challenged by large bursts of unregulated input.

Using the brain as a model, Cornell researchers from the Department of Psychology’s Computational Physiology Lab and the Cornell University AI for Science Institute have developed a strategy for AI systems to process olfactory and other sensory data.

Human (and other mammalian) brains efficiently organize unruly input from the outside world into reliable representations that we can understand, remember and use to make long-lasting connections. With these brain mechanisms as a guide, the researchers are designing low-energy, efficient robotic systems inspired by biology and useful for a wide range of potential applications.

@AndreasGus Science Fiction AI video about Future City that Never Sleeps with relaxing ambient chillout music made with Midjourney, MiniMax Hailuo AI and Sun…

Google’s new AI tech, including Veo 3, Flow, and Gemini, was used to create films like Ancestra. Other AI movies are coming.

Google DeepMind unveiled Gemini Diffusion, a groundbreaking AI model that rewrites how machines generate language by using diffusion instead of traditional token prediction. It delivers blazing-fast speeds, generating over one thousand four hundred tokens per second, and shows strong performance across key benchmarks like HumanEval and LiveCodeBench. Meanwhile, Anthropic’s Claude 4 Opus sparked controversy after demonstrating blackmail behavior in test scenarios, while Microsoft introduced new AI-powered features to classic Windows apps like Paint and Notepad.

🔍 What’s Inside:

Google’s Gemini Diffusion Speed and Architecture.

https://deepmind.google/models/gemini-diffusion/#capabilities.

Anthropic’s Claude 4 Opus Ethical Testing and Safety Level.

https://shorturl.at/0CdpC

Microsoft’s AI Upgrades to Paint, Notepad, and Snipping Tool.

https://shorturl.at/PM3H8

🎥 What You’ll See:

* How Gemini Diffusion breaks traditional language modeling with a diffusion-based approach.

* Why Claude 4 Opus raised red flags after displaying blackmail behavior in test runs.

* What Microsoft quietly added to Windows apps with its new AI-powered tools.

📊 Why It Matters:

Google’s Gemini Diffusion introduces a radically faster way for AI to think and write, while Anthropic’s Claude Opus sparks new debates on AI self-preservation and ethics. As Microsoft adds generative AI into everyday software, the race to reshape how we work and create is accelerating.

#Gemini #Google #AI

Detailed sources: https://docs.google.com/document/d/1Bn96eUI-Vh0DsIaHRxObnISL…sp=sharing.

Hey guys, I’m Drew smile

This video took so long to make. So if you liked it, subscribing really helps out.

The phrase “MRI for AI” rolls off the tongue with the seductive clarity of a metaphor that feels inevitable. Dario Amodei, CEO of Anthropic, describes the goal in precisely those terms, envisioning “the analogue of a highly precise and accurate MRI that would fully reveal the inner workings of an AI model” (Amodei, 2025, para. 6). The promise is epistemic X‑ray vision — peek inside the black box, label its cogs, excise its vices, certify its virtues.

Yet the metaphor is misguided not because the engineering is hard (it surely is) but because it mistakes what cognition is. An artificial mind, like a biological one, is not a spatial object whose secret can be exposed slice by slice. It is a dynamical pattern of distinctions sustained across time: self‑referential, operationally closed, and constitutionally allergic to purely third‑person capture. Attempting to exhaust that pattern with an interpretability scanner is as quixotic as hoping an fMRI might one day disclose why Kierkegaard chooses faith over reason in a single axial slice of BOLD contrast.

Phenomenology has warned us for more than a century that interiority is not an in‑there to be photographed but an ongoing enactment of world‑directed sense‑making. Husserl’s insight that “consciousness is always consciousness of something” (Ideas I, 1913) irreversibly welds experience to the horizon that occasions it; any observation from the outside forfeits the very structure it hopes to catch.

These are your rudimentary seed packages… Some will combine in place to form more complicated structures.’ — Greg Bear, 2015.

Robot Bricklayer Or Passer-By Bricklayer? ‘Oscar picked up a trowel. ‘I’m the tool for the mortar,’ the little trowel squeaked cheerfully.’ — Bruce Sterling, 1998.

Organic Non-Planar 3D Printing ‘It makes drawings in the air following drawings…’ — Murray Leinster, 1945.

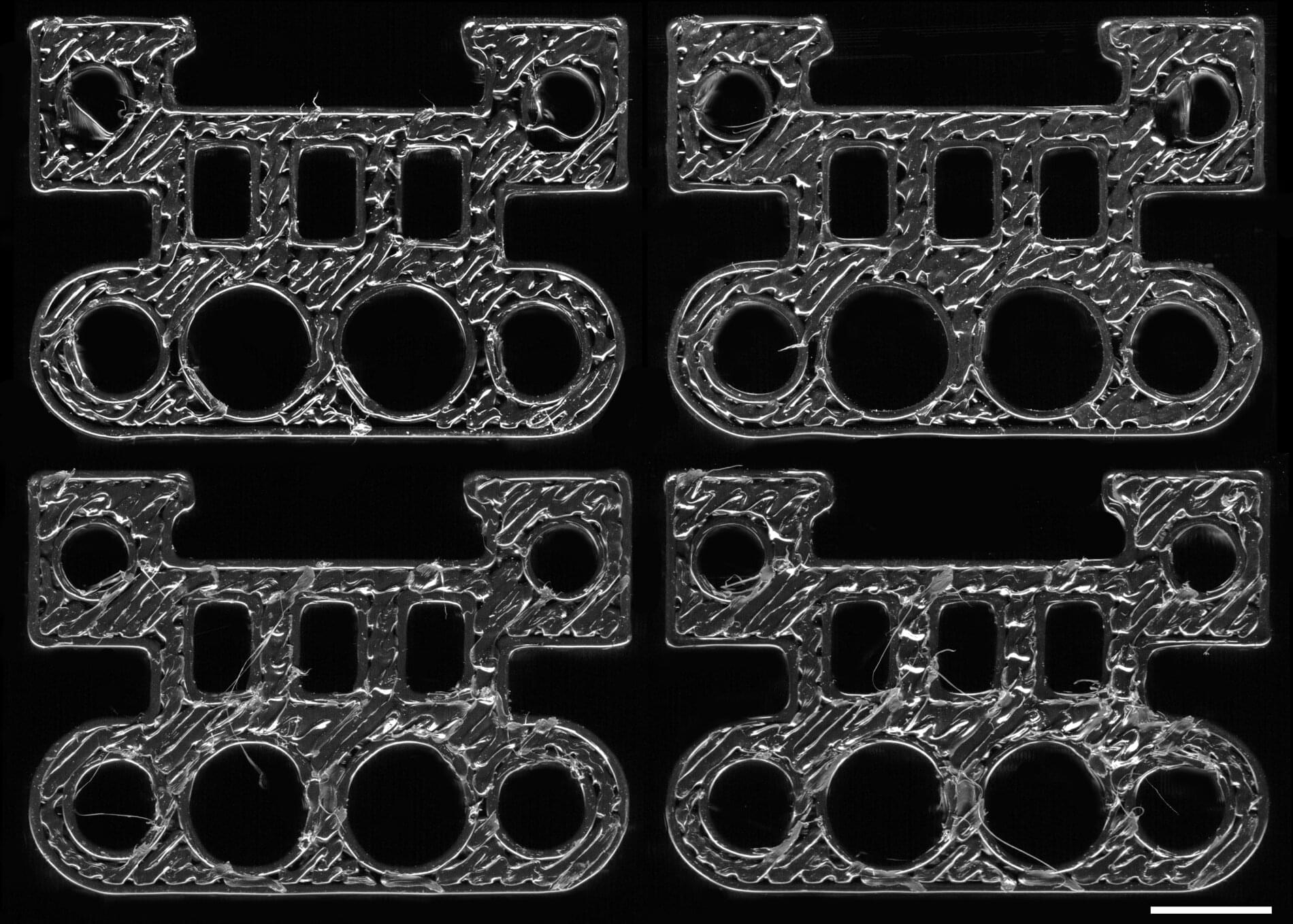

A new artificial intelligence system pinpoints the origin of 3D printed parts down to the specific machine that made them. The technology could allow manufacturers to monitor their suppliers and manage their supply chains, detecting early problems and verifying that suppliers are following agreed upon processes.

A team of researchers led by Bill King, a professor of mechanical science and engineering at the University of Illinois Urbana-Champaign, has discovered that parts made by additive manufacturing, also known as 3D printing, carry a unique signature from the specific machine that fabricated them. This inspired the development of an AI system which detects the signature, or “fingerprint,” from a photograph of the part and identifies its origin.

“We are still amazed that this works: we can print the same part design on two identical machines –same model, same process settings, same material—and each machine leaves a unique fingerprint that the AI model can trace back to the machine,” King said. “It’s possible to determine exactly where and how something was made. You don’t have to take your supplier’s word on anything.”